1 Introduction

In 1936, Alan Turing published a seminal paper [Reference TuringTur36] which is rightly considered foundational for modern computer science. The main subject of his paper, as seen from the title, was the concept of a computable real number. Informally, such numbers can be approximated to an arbitrary desired precision using some algorithm. Turing formalized the latter as a Turing machine (TM), which is now the commonly accepted theoretical model of computation. Appearing before actual computers, TMs can be somewhat cumbersome to describe, but their computational power is equivalent to those of programs in a modern programming language, such as Python, for instance. Let

denote the set of dyadic rationals. A number

![]() $x\in {\mathbb {R}}$

is computable if there exists a TM M, which has a single input

$x\in {\mathbb {R}}$

is computable if there exists a TM M, which has a single input

![]() $n\in {\mathbb {N}}$

and which outputs

$n\in {\mathbb {N}}$

and which outputs

![]() $d_n\in {\mathbb {D}}$

such that

$d_n\in {\mathbb {D}}$

such that

The dyadic notation here is purely to support the intuition that modern computers operate in binary; replacing

![]() $2^{-n}$

with any other constructive bound, such as, for instance,

$2^{-n}$

with any other constructive bound, such as, for instance,

![]() $n^{-1}$

, would result in an equivalent definition.

$n^{-1}$

, would result in an equivalent definition.

Since there are only countably many programs in Python, there are only countably many computable numbers. Yet, it is surprisingly nontrivial to present an example of one. To this end, Turing introduced an algorithmically unsolvable problem, now known as the Halting Problem: determine algorithmically whether a given TM halts or runs forever. Turing showed that there does not exist a program M whose input is another program

![]() $M_1$

and whose output is

$M_1$

and whose output is

![]() $1$

if

$1$

if

![]() $M_1$

halts, and

$M_1$

halts, and

![]() $0$

if

$0$

if

![]() $M_1$

does not halt.

$M_1$

does not halt.

From this, a non-computable real is constructed as follows. Let us enumerate all programs

in some explicit way. For instance, all possible finite combinations of symbols in the Python alphabet can be listed in the lexicographic order. Some of these programs will not run at all (and thus halt by definition) but some will run without ever halting. Let the halting predicate

![]() $p(n)$

be equal to

$p(n)$

be equal to

![]() $1$

if

$1$

if

![]() $M_n$

halts and

$M_n$

halts and

![]() $0$

otherwise.

$0$

otherwise.

Now, set

This number is clearly non-computable – an algorithm computing it could be used to determine the value of the halting predicate for every given n, and thus cannot exist.

It is worth noting a further property of

![]() $\alpha $

. Let us say that

$\alpha $

. Let us say that

![]() $x\in {\mathbb {R}}$

is left-computable if there exists a TM M which outputs an increasing sequence

$x\in {\mathbb {R}}$

is left-computable if there exists a TM M which outputs an increasing sequence

There are, again, only countably many left-computable numbers, and it is trivial to see that computable numbers form their subset (a proper subset, as seen below). Right computability is defined in the same way with decreasing sequences, and it is a nice exercise to show that being simultaneously left- and right-computable is equivalent to being computable.

The non-computable number

![]() $\alpha $

is left-computable, as it is the limit of

$\alpha $

is left-computable, as it is the limit of

which can be generated by a program which emulates

![]() $M_1,\ldots ,M_k$

for k steps to produce

$M_1,\ldots ,M_k$

for k steps to produce

![]() $\alpha _k$

.

$\alpha _k$

.

Since Turing, examples of non-computable reals were typically constructed along similar lines. In the 2000s, working on problems of computability in dynamics, M. Braverman and the second author discovered that non-computability may be produced via an analytic expression [Reference Braverman and YampolskyBY09]. This expression came in the form of the Brjuno function

![]() ${{\mathcal B}}(\theta )$

which was introduced by Yoccoz [Reference YoccozYoc96] to study linearization problems of irrationally indifferent dynamics. We will discuss

${{\mathcal B}}(\theta )$

which was introduced by Yoccoz [Reference YoccozYoc96] to study linearization problems of irrationally indifferent dynamics. We will discuss

![]() ${{\mathcal B}}(\theta )$

and its various cousins in detail below, but let us give an explicit formula here. For

${{\mathcal B}}(\theta )$

and its various cousins in detail below, but let us give an explicit formula here. For

![]() $\theta \in (0,1)\setminus {\mathbb {Q}}$

, we have

$\theta \in (0,1)\setminus {\mathbb {Q}}$

, we have

Here,

![]() $\theta _{-1}=1$

,

$\theta _{-1}=1$

,

![]() $\theta _0\equiv \theta $

, and

$\theta _0\equiv \theta $

, and

![]() $\theta _{i+1}$

is obtained from

$\theta _{i+1}$

is obtained from

![]() $\theta _i$

by applying the Gauss map

$\theta _i$

by applying the Gauss map

![]() $x\mapsto \{1/x\}$

. Of course, for rational values of

$x\mapsto \{1/x\}$

. Of course, for rational values of

![]() $\theta ,$

the summand will eventually turn infinite. The sum may also diverge for an irrational

$\theta ,$

the summand will eventually turn infinite. The sum may also diverge for an irrational

![]() $\theta $

, and yet can be shown to converge to a finite value for almost all values in

$\theta $

, and yet can be shown to converge to a finite value for almost all values in

![]() $(0,1)$

.

$(0,1)$

.

It is evident that if

![]() $\theta $

is computable, then

$\theta $

is computable, then

![]() ${{\mathcal B}}(\theta )$

is left-computable. Very surprisingly, this can be reversed in the following way. Setting

${{\mathcal B}}(\theta )$

is left-computable. Very surprisingly, this can be reversed in the following way. Setting

for each left-computable

![]() $y\in [y_*,\infty ),$

there exists a computable

$y\in [y_*,\infty ),$

there exists a computable

![]() $\theta \in (0,1)$

with

$\theta \in (0,1)$

with

![]() ${{\mathcal B}}(\theta )=y$

. The Brjuno function can be seen as a “machine” mapping computable values in

${{\mathcal B}}(\theta )=y$

. The Brjuno function can be seen as a “machine” mapping computable values in

![]() $(0,1)$

surjectively onto left-computable values in

$(0,1)$

surjectively onto left-computable values in

![]() $[y_*,\infty )$

.

$[y_*,\infty )$

.

Moreover, there exists an explicit algorithm

![]() $\hat M$

which, given a sequence

$\hat M$

which, given a sequence

![]() $a_n\nearrow y$

, computes

$a_n\nearrow y$

, computes

![]() $\theta $

such that

$\theta $

such that

![]() ${{\mathcal B}}(\theta )=y$

. Taking Turing’s non-computable

${{\mathcal B}}(\theta )=y$

. Taking Turing’s non-computable

![]() $\alpha $

, as we saw above, there is an explicit program to produce a sequence of rationals

$\alpha $

, as we saw above, there is an explicit program to produce a sequence of rationals

![]() $\alpha _n\nearrow \alpha $

. “Feeding” the sequence

$\alpha _n\nearrow \alpha $

. “Feeding” the sequence

![]() $\alpha _n$

to

$\alpha _n$

to

![]() $\hat M,$

we obtain an explicitly computable

$\hat M,$

we obtain an explicitly computable

![]() $\theta _*$

for which

$\theta _*$

for which

![]() ${{\mathcal B}}(\theta _*)=\alpha $

. This example demonstrates that

${{\mathcal B}}(\theta _*)=\alpha $

. This example demonstrates that

![]() ${{\mathcal B}}(\theta )$

is a natural analytic mechanism for producing non-computable reals.

${{\mathcal B}}(\theta )$

is a natural analytic mechanism for producing non-computable reals.

The purpose of this article is as follows. We distill the proof of the above result from [Reference Braverman and YampolskyBY09], where it is somewhat hidden in the considerations of complex dynamics and Julia sets. Moreover, we generalize the result to cover other Brjuno-type functions which have previously appeared in the mathematical literature, some of them 60 years before Yoccoz’s work and in a completely different context. Our generalization describes the phenomenon in the language of dynamical systems. As we will see, ergodic sampling of a particular type of dynamics with suitable weights leads to non-computability.

Finally, for an even broader natural class of functions, which have also previously been studied, and which do not quite fit the above framework, we prove a more general, albeit weaker, non-computability result.

2 Preliminaries

2.1 Computable functions

The “modern” definition of a computable function requires the concept of an oracle. Loosely speaking, an oracle for a real number x, for example, is a user who knows x and, when queried by a TM, can input its value with any desired precision. In the world of TMs (as well as Python programs), an oracle may be conceived as an infinite tape on which an infinite string of dyadic rationals is written (encoding, for instance, a Cauchy sequence for

![]() $x\in {\mathbb {R}}$

) and which the program is able to read at will. Of course, only a finite amount of information can be read off this tape each time. As well as a real number, an oracle can be used to encode anything else which could be written on an infinite tape, for instance, the magically obtained solution to the Halting Problem.

$x\in {\mathbb {R}}$

) and which the program is able to read at will. Of course, only a finite amount of information can be read off this tape each time. As well as a real number, an oracle can be used to encode anything else which could be written on an infinite tape, for instance, the magically obtained solution to the Halting Problem.

Formally, an oracle is a function

![]() $\phi :{\mathbb {N}}\to {\mathbb {D}}$

. An oracle for

$\phi :{\mathbb {N}}\to {\mathbb {D}}$

. An oracle for

![]() $x\in {\mathbb {R}}$

satisfies

$x\in {\mathbb {R}}$

satisfies

We say a TM

![]() $M^{\phi }$

is an oracle Turing Machine if at any step of the computation,

$M^{\phi }$

is an oracle Turing Machine if at any step of the computation,

![]() $M^{\phi }$

can query the value

$M^{\phi }$

can query the value

![]() $\phi (n)$

for any n. We treat an oracle TM as a function of the oracle; that is, we think of

$\phi (n)$

for any n. We treat an oracle TM as a function of the oracle; that is, we think of

![]() $\phi $

in

$\phi $

in

![]() $M^{\phi }$

as a placeholder for any oracle, and the TM performs its computational steps depending on the particular oracle it is given. We will talk about “querying the oracle,” “being given the access to an oracle for x,” or just “given

$M^{\phi }$

as a placeholder for any oracle, and the TM performs its computational steps depending on the particular oracle it is given. We will talk about “querying the oracle,” “being given the access to an oracle for x,” or just “given

![]() $x.$

”

$x.$

”

We need oracle TMs to define computable functions on the reals. For

![]() $S\subset {\mathbb {R}}$

, we say that a function

$S\subset {\mathbb {R}}$

, we say that a function

![]() $f:S\to {\mathbb {R}}$

is computable if there exists an oracle TM

$f:S\to {\mathbb {R}}$

is computable if there exists an oracle TM

![]() $M^\phi $

with a single input n such that for any

$M^\phi $

with a single input n such that for any

![]() $x\in S,$

the following is true. If

$x\in S,$

the following is true. If

![]() $\phi $

is an oracle for x, then upon input n, the machine

$\phi $

is an oracle for x, then upon input n, the machine

![]() $M^\phi $

outputs

$M^\phi $

outputs

![]() $d_n\in {\mathbb {D}}$

such that

$d_n\in {\mathbb {D}}$

such that

In other words, there is an algorithm which can output the value of

![]() $f(x)$

with any desired precision if it is allowed to query the value of x with an arbitrary finite precision.

$f(x)$

with any desired precision if it is allowed to query the value of x with an arbitrary finite precision.

The domain of the real-valued function plays an important role in the above definition. The definition states that there is a single algorithm which, given x, works for every

![]() $x \in S$

. We will abbreviate this by saying that f is uniformly computable on S.

$x \in S$

. We will abbreviate this by saying that f is uniformly computable on S.

In the case when S is a singleton,

![]() $S=\{x_0\},$

we will say that the function

$S=\{x_0\},$

we will say that the function

![]() $f(x)$

is computable at the point

$f(x)$

is computable at the point

![]() $x_0$

. Evidently, the weakest computability result and the strongest non-computability result one can obtain in regards to real-valued functions is when the domain is restricted to a single point.

$x_0$

. Evidently, the weakest computability result and the strongest non-computability result one can obtain in regards to real-valued functions is when the domain is restricted to a single point.

It is worth making note of the following easy fact, whose proof we leave as an exercise.

Proposition 2.1 If f is uniformly computable on

![]() $S,$

then f is continuous on S.

$S,$

then f is continuous on S.

Uniform left- or right-computability of functions is defined in a completely analogous way.

2.2 Brjuno function and friends

Every irrational number

![]() $\theta $

in the unit interval admits a unique (simple) continued fraction expansion:

$\theta $

in the unit interval admits a unique (simple) continued fraction expansion:

$$ \begin{align*} [a_{1}, a_{2}, a_{3}, \ldots] \equiv \cfrac{1}{a_{1}+\cfrac{1}{a_{2}+\cfrac{1}{a_{3}+\dots}}} \in (0,1)\setminus\mathbb{Q}, \end{align*} $$

$$ \begin{align*} [a_{1}, a_{2}, a_{3}, \ldots] \equiv \cfrac{1}{a_{1}+\cfrac{1}{a_{2}+\cfrac{1}{a_{3}+\dots}}} \in (0,1)\setminus\mathbb{Q}, \end{align*} $$

where

![]() $a_{i} \in {\mathbb {N}}$

. An important related concept is that of the Gauss map

$a_{i} \in {\mathbb {N}}$

. An important related concept is that of the Gauss map

![]() $G:(0,1]\to [0,1]$

given by

$G:(0,1]\to [0,1]$

given by

it has the property

In what follows, for a function F, we denote

![]() $F^{n}$

its n-th iterate. For ease of notation, for each

$F^{n}$

its n-th iterate. For ease of notation, for each

![]() $j\in {\mathbb {N,}}$

let us define the function

$j\in {\mathbb {N,}}$

let us define the function

![]() $\eta _{j}: (0, 1) \setminus \mathbb {Q} \to (0, 1) \setminus \mathbb {Q}$

as

$\eta _{j}: (0, 1) \setminus \mathbb {Q} \to (0, 1) \setminus \mathbb {Q}$

as

![]() $ \eta _{j}(x) = G^{j-1}(x)$

, so that

$ \eta _{j}(x) = G^{j-1}(x)$

, so that

We define Yoccoz’s Brjuno function, or for brevity, just the Brjuno function [Reference YoccozYoc96] by

where we set

![]() $\eta _{0}(x) = 1$

for all x. Irrationals in

$\eta _{0}(x) = 1$

for all x. Irrationals in

![]() $(0,1)$

for which

$(0,1)$

for which

![]() ${{\mathcal B}}(x)<+\infty $

are known as Brjuno numbers; they form a full measure subest of

${{\mathcal B}}(x)<+\infty $

are known as Brjuno numbers; they form a full measure subest of

![]() $(0,1)$

. The original work of Brjuno [Reference BrjunoBrj71] characterized Brjuno numbers using a different infinite series, whose convergence is equivalent to that of (2.1).

$(0,1)$

. The original work of Brjuno [Reference BrjunoBrj71] characterized Brjuno numbers using a different infinite series, whose convergence is equivalent to that of (2.1).

The Brjuno condition has been introduced in the study of the linearization of neutral fixed points. The function

![]() ${{\mathcal B}}$

has an important geometric meaning in this context as an estimate on the size of the domain of definition of a linearizing coordinate. It has a number of remarkable properties and has been studied extensively (see, for instance, [Reference Marmi, Moussa and YoccozMMY97]).

${{\mathcal B}}$

has an important geometric meaning in this context as an estimate on the size of the domain of definition of a linearizing coordinate. It has a number of remarkable properties and has been studied extensively (see, for instance, [Reference Marmi, Moussa and YoccozMMY97]).

Intuitively, the condition

![]() ${{\mathcal B}}(x)<+\infty $

is a Diophantine-type condition; if x is a Diophantine number, then it can be shown that the series (2.1) is majorized by a geometric series. As we have learned from a talk by Marmi [Reference MarmiMar22], similar expressions have appeared much earlier in the theory of Diophantine approximation. Notably, in 1933, Wilton [Reference WiltonWil33] defined the sums

${{\mathcal B}}(x)<+\infty $

is a Diophantine-type condition; if x is a Diophantine number, then it can be shown that the series (2.1) is majorized by a geometric series. As we have learned from a talk by Marmi [Reference MarmiMar22], similar expressions have appeared much earlier in the theory of Diophantine approximation. Notably, in 1933, Wilton [Reference WiltonWil33] defined the sums

which we will call the first and second Wilton functions, respectively.

To illustrate how different the application of Wilton’s functions is from the Brjuno function, let us quote Wilton’s results. If we denote

![]() $d(n)$

to be the number of divisors of a positive integer n, Wilton showed that

$d(n)$

to be the number of divisors of a positive integer n, Wilton showed that

$$ \begin{align*} \sum_{n=1}^{\infty} \dfrac{d(n)}{n} \cos 2\pi nx < \infty &\text{ if and only if } {{\mathcal W}}_{1}(x) < \infty, \text{ and} \\ \sum_{n=1}^{\infty} \dfrac{d(n)}{n} \sin 2\pi nx < \infty &\text{ if and only if } {{\mathcal W}}_{2}(x) < \infty. \end{align*} $$

$$ \begin{align*} \sum_{n=1}^{\infty} \dfrac{d(n)}{n} \cos 2\pi nx < \infty &\text{ if and only if } {{\mathcal W}}_{1}(x) < \infty, \text{ and} \\ \sum_{n=1}^{\infty} \dfrac{d(n)}{n} \sin 2\pi nx < \infty &\text{ if and only if } {{\mathcal W}}_{2}(x) < \infty. \end{align*} $$

One important generalization of the simple continued fraction expansion of an irrational number is the

![]() $\alpha $

-continued fraction expansion for

$\alpha $

-continued fraction expansion for

![]() $\alpha \in [1/2, 1]$

. Let

$\alpha \in [1/2, 1]$

. Let

![]() $A_{\alpha } : [0, \alpha ] \to [0, \alpha ]$

be the map

$A_{\alpha } : [0, \alpha ] \to [0, \alpha ]$

be the map

By iterating this mapping, we define the infinite

![]() $\alpha $

-continued fraction expansion for any

$\alpha $

-continued fraction expansion for any

![]() $x \in (0, \alpha ) - \mathbb {Q}$

as follows. For

$x \in (0, \alpha ) - \mathbb {Q}$

as follows. For

![]() $n \geq 0,$

we let

$n \geq 0,$

we let

$$ \begin{align*} & x_{0} = |x - \lfloor x - \alpha + 1 \rfloor|, a_{0} = \lfloor x - \alpha + 1 \rfloor, \varepsilon_{0} = 1,\\ & x_{n+1} = A_{\alpha}(x_{n}) = A_{\alpha}^{n+1}(x), a_{n+1} = \left\lfloor \dfrac{1}{x_{n}} - \alpha + 1 \right\rfloor \geq 1, \varepsilon_{n+1} = \text{sgn}(x_{n}). \end{align*} $$

$$ \begin{align*} & x_{0} = |x - \lfloor x - \alpha + 1 \rfloor|, a_{0} = \lfloor x - \alpha + 1 \rfloor, \varepsilon_{0} = 1,\\ & x_{n+1} = A_{\alpha}(x_{n}) = A_{\alpha}^{n+1}(x), a_{n+1} = \left\lfloor \dfrac{1}{x_{n}} - \alpha + 1 \right\rfloor \geq 1, \varepsilon_{n+1} = \text{sgn}(x_{n}). \end{align*} $$

Then, we can write

$$ \begin{align*} x = [(a_{1}, \varepsilon_{1}), (a_{2}, \varepsilon_{2}), \ldots, (a_{n}, \varepsilon_{n}), \ldots] := \dfrac{\varepsilon_{0}}{a_{1}+\dfrac{\varepsilon_{1}}{a_{2}+\dfrac{\varepsilon_{2}}{a_{3}+\dots}}} \in (0,\alpha)-\mathbb{Q}. \end{align*} $$

$$ \begin{align*} x = [(a_{1}, \varepsilon_{1}), (a_{2}, \varepsilon_{2}), \ldots, (a_{n}, \varepsilon_{n}), \ldots] := \dfrac{\varepsilon_{0}}{a_{1}+\dfrac{\varepsilon_{1}}{a_{2}+\dfrac{\varepsilon_{2}}{a_{3}+\dots}}} \in (0,\alpha)-\mathbb{Q}. \end{align*} $$

Note that when

![]() $\alpha =1$

, we recover the standard continued fraction expansion.

$\alpha =1$

, we recover the standard continued fraction expansion.

Generalizations of the Brjuno function based on the above expansions have been studied, for example, in [Reference Luzzi, Marmi, Nakada and NatsuiLMNN10]. There, the authors considered the properties of the function

where

![]() $\eta _{\alpha ,0}(x) = 1$

,

$\eta _{\alpha ,0}(x) = 1$

,

![]() $\eta _{\alpha , j}(x) = A_{\alpha }^{j-1}(x), \alpha \in [1/2, 1], j \geq 1$

is a generalization of

$\eta _{\alpha , j}(x) = A_{\alpha }^{j-1}(x), \alpha \in [1/2, 1], j \geq 1$

is a generalization of

![]() $\eta _{j}$

to alpha-continued fraction maps, and

$\eta _{j}$

to alpha-continued fraction maps, and

![]() $u:(0, 1) \to \mathbb {R}^{+}$

is a

$u:(0, 1) \to \mathbb {R}^{+}$

is a

![]() $\mathcal {C}^{1}$

function such that

$\mathcal {C}^{1}$

function such that

A further generalized class

![]() $\{{{\mathcal B}}_{\alpha , u, \nu }\}$

of Brjuno functions is discussed in [Reference Bakhtawar, Carminati and MarmiBCM24], where the last two conditions above are dropped and the term

$\{{{\mathcal B}}_{\alpha , u, \nu }\}$

of Brjuno functions is discussed in [Reference Bakhtawar, Carminati and MarmiBCM24], where the last two conditions above are dropped and the term

![]() $\eta _{\alpha ,1}(x) \eta _{\alpha ,2}(x) \dots \eta _{\alpha ,i-1}(x)$

is raised to some power

$\eta _{\alpha ,1}(x) \eta _{\alpha ,2}(x) \dots \eta _{\alpha ,i-1}(x)$

is raised to some power

![]() $\nu \in \mathbb {Z}^{+}$

:

$\nu \in \mathbb {Z}^{+}$

:

As shown in [Reference Bakhtawar, Carminati and MarmiBCM24]:

Proposition 2.2 For all

![]() $\alpha \in \mathbb {Q} \cap [1/2, 1]$

, such functions

$\alpha \in \mathbb {Q} \cap [1/2, 1]$

, such functions

![]() ${{\mathcal B}}_{\alpha , u, \nu }$

are lower semi-continuous and thus attain their global minima.

${{\mathcal B}}_{\alpha , u, \nu }$

are lower semi-continuous and thus attain their global minima.

Note that if we take

![]() $\alpha =1$

,

$\alpha =1$

,

![]() $\nu =1$

, and

$\nu =1$

, and

![]() $u = -\log $

, we recover Yoccoz’s Brjuno function discussed above; similarly, taking

$u = -\log $

, we recover Yoccoz’s Brjuno function discussed above; similarly, taking

![]() $\alpha =1$

,

$\alpha =1$

,

![]() $\nu =1$

, and

$\nu =1$

, and

![]() $u = -\log ^{2}$

recovers the first Wilton function

$u = -\log ^{2}$

recovers the first Wilton function

![]() ${{\mathcal W}}_{1}$

. Note, however, that we cannot obtain

${{\mathcal W}}_{1}$

. Note, however, that we cannot obtain

![]() ${{\mathcal W}}_{2}$

as a special case of

${{\mathcal W}}_{2}$

as a special case of

![]() ${{\mathcal B}}_{\alpha , u, \nu }$

.

${{\mathcal B}}_{\alpha , u, \nu }$

.

3 Statements of the results

3.1 A general framework

We refer the readers to the survey [Reference Marmi, Moussa and YoccozMMY06] which discusses a cohomological interpretation of the Brjuno function and lays the groundwork for its generalizations. Our discussion will be much less technical, yet essentially equivalent in the cases we consider. It will yield a generalization which is (a) broad enough to include the relevant examples we have quoted and (b) captures the essence of the non-computability phenomenon discovered in [Reference Braverman and YampolskyBY09]. This is achieved via the following framework. Suppose

![]() $G(x)$

is a piecewise-defined expanding mapping whose domain is an infinite collection of subintervals of

$G(x)$

is a piecewise-defined expanding mapping whose domain is an infinite collection of subintervals of

![]() $(0,1),$

each of which is mapped surjectively over all of

$(0,1),$

each of which is mapped surjectively over all of

![]() $(0,1)$

. Let

$(0,1)$

. Let

![]() $\psi :(0,1)\to {\mathbb {R}}$

(in our case,

$\psi :(0,1)\to {\mathbb {R}}$

(in our case,

![]() $\psi (x)=x^\nu $

), and consider the skew product dynamics given by

$\psi (x)=x^\nu $

), and consider the skew product dynamics given by

$$ \begin{align} F:\left(\begin{array}{c}x\\y\end{array}\right)=\left(\begin{array}{c}G(x)\\\psi(x) y\end{array}\right). \end{align} $$

$$ \begin{align} F:\left(\begin{array}{c}x\\y\end{array}\right)=\left(\begin{array}{c}G(x)\\\psi(x) y\end{array}\right). \end{align} $$

The class of functions

![]() ${{\mathcal F}}$

for which non-computability arises is produced by additive (ergodic) sampling of orbits

${{\mathcal F}}$

for which non-computability arises is produced by additive (ergodic) sampling of orbits

![]() $(x_n,y_n)$

of F using a suitable weight function

$(x_n,y_n)$

of F using a suitable weight function

![]() $u(x,y)\equiv u(x)$

with positive values:

$u(x,y)\equiv u(x)$

with positive values:

It is worth noting that such a function is a formal solution of the twisted cohomological equation

As noted above, in our case, we set

We will now need to make somewhat technical but straightforward general assumptions on G and u.

Let

![]() $G: (0, 1) \to (0, 1)$

be a function which is

$G: (0, 1) \to (0, 1)$

be a function which is

![]() $\mathcal {C}^{1}$

on a set

$\mathcal {C}^{1}$

on a set

![]() $S \subseteq (0, 1)$

, with

$S \subseteq (0, 1)$

, with

![]() $s_{0} := \inf S$

,

$s_{0} := \inf S$

,

![]() $s_{1} := \sup S$

. Suppose that for a countable collection of disjoint open intervals

$s_{1} := \sup S$

. Suppose that for a countable collection of disjoint open intervals

![]() $J_{i} = (\ell _{i}, r_{i})$

with

$J_{i} = (\ell _{i}, r_{i})$

with

![]() $\ell _{1}> \ell _{2} > \cdots $

, we have

$\ell _{1}> \ell _{2} > \cdots $

, we have

![]() $S = J_{1} \sqcup J_{2} \sqcup J_{3} \sqcup \cdots $

. Below, we will denote

$S = J_{1} \sqcup J_{2} \sqcup J_{3} \sqcup \cdots $

. Below, we will denote

![]() $G_{i} := G|_{J_{i}}$

, so that

$G_{i} := G|_{J_{i}}$

, so that

![]() $G_{i}^{-1}: (s_{0}, s_{1}) \to J_{i}$

is the unique branch of

$G_{i}^{-1}: (s_{0}, s_{1}) \to J_{i}$

is the unique branch of

![]() $G^{-1}$

mapping into

$G^{-1}$

mapping into

![]() $J_{i}$

. We assume that G satisfies the following criteria:

$J_{i}$

. We assume that G satisfies the following criteria:

-

(i)

$G(J_{i}) = (s_{0}, s_{1})$

for each i.

$G(J_{i}) = (s_{0}, s_{1})$

for each i. -

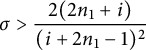

(ii)

$|G'|>1$

, and additionally there exist

$|G'|>1$

, and additionally there exist

$\tau>1, \sigma >1$

, and

$\tau>1, \sigma >1$

, and

$\kappa \in \mathbb {Z}$

such that if we let then we have both

$\kappa \in \mathbb {Z}$

such that if we let then we have both $$ \begin{align*} \tau_{i, 1} := \inf_{x \in J_{i}} \left| G'(x) \right|, \tau_{i, \kappa} := \inf_{x \in J_{i}} \left| (G^{\kappa})'(x) \right|, \end{align*} $$

$$ \begin{align*} \tau_{i, 1} := \inf_{x \in J_{i}} \left| G'(x) \right|, \tau_{i, \kappa} := \inf_{x \in J_{i}} \left| (G^{\kappa})'(x) \right|, \end{align*} $$

$\tau _{i, 1}^{-1} < \ell _{i} \cdot \sigma $

and

$\tau _{i, 1}^{-1} < \ell _{i} \cdot \sigma $

and

$\tau _{i, \kappa }^{-1} < \ell _{i} \cdot \tau ^{-1}$

for all i. In particular, since

$\tau _{i, \kappa }^{-1} < \ell _{i} \cdot \tau ^{-1}$

for all i. In particular, since

$\ell _{i} \leq s_{1} < 1$

, this means that

$\ell _{i} \leq s_{1} < 1$

, this means that

$|(G^{\kappa })'(x)|> \tau $

for all

$|(G^{\kappa })'(x)|> \tau $

for all

$x \in (s_{0}, s_{1})$

.

$x \in (s_{0}, s_{1})$

.

-

(iii)

$G_{1}$

is decreasing on

$G_{1}$

is decreasing on

$J_{1}$

.

$J_{1}$

. -

(iv) Let

$\varphi $

be the unique fixed point of

$\varphi $

be the unique fixed point of

$G_{1}$

, and let

$G_{1}$

, and let

$\delta _{G}(N) := G_{N}^{-1}(\varphi )- G_{N+1}^{-1}(\varphi )>0$

. Then, there is some constant

$\delta _{G}(N) := G_{N}^{-1}(\varphi )- G_{N+1}^{-1}(\varphi )>0$

. Then, there is some constant

$D>0$

such that for all

$D>0$

such that for all

$N \in \mathbb {Z}^{+}$

,

$N \in \mathbb {Z}^{+}$

,  $$ \begin{align*} \dfrac{r_{N+1}}{\ell_{N+1}} \cdot \dfrac{r_{N}-\ell_{N+1}}{\delta_{G}(N)} < D. \end{align*} $$

$$ \begin{align*} \dfrac{r_{N+1}}{\ell_{N+1}} \cdot \dfrac{r_{N}-\ell_{N+1}}{\delta_{G}(N)} < D. \end{align*} $$

-

(v) We have

$\dfrac {r_{i}-\ell _{i}}{\ell _{i}^{2}} < D$

for some constant

$\dfrac {r_{i}-\ell _{i}}{\ell _{i}^{2}} < D$

for some constant

$D>0$

independent of i.

$D>0$

independent of i. -

(vi) G is computable on its domain.

-

(vii)

$g(i) := \dfrac {r_{i}}{\ell _{i+1}} - 1 \to 0$

as

$g(i) := \dfrac {r_{i}}{\ell _{i+1}} - 1 \to 0$

as

$i \to \infty $

. Note that since g is positive and bounded from above, there is some constant

$i \to \infty $

. Note that since g is positive and bounded from above, there is some constant

$m_{g}>0$

for which

$m_{g}>0$

for which

$0 < g(i) \leq m_{g}$

.

$0 < g(i) \leq m_{g}$

.

It is easy to show that properties (i) and (ii) imply that G restricted to the set

![]() $\Lambda = \bigcap _{j=0}^{\infty }G^{-j}((s_{0}, s_{1}))$

is topologically conjugate to the full shift over the alphabet of positive integers

$\Lambda = \bigcap _{j=0}^{\infty }G^{-j}((s_{0}, s_{1}))$

is topologically conjugate to the full shift over the alphabet of positive integers

![]() $\mathbb {Z}^{+}$

. As such, we can write any

$\mathbb {Z}^{+}$

. As such, we can write any

![]() $x \in \Lambda $

as its symbolic representation

$x \in \Lambda $

as its symbolic representation

![]() $x = [a_{1}, a_{2}, \ldots ]$

, where

$x = [a_{1}, a_{2}, \ldots ]$

, where

![]() $G^{j-1}(x) \in J_{a_{j}}$

. In this notation,

$G^{j-1}(x) \in J_{a_{j}}$

. In this notation,

![]() $\varphi $

above can be written as

$\varphi $

above can be written as

![]() $[1, 1, 1, \ldots ]$

. By (iii), we have

$[1, 1, 1, \ldots ]$

. By (iii), we have

![]() $\varphi < s_{1}$

, from which it is straightforward to check that we indeed have

$\varphi < s_{1}$

, from which it is straightforward to check that we indeed have

![]() $\delta _{G}(N)>0$

in (iv). For convenience, for

$\delta _{G}(N)>0$

in (iv). For convenience, for

![]() $j \in \mathbb {Z}^{+}$

, we will denote

$j \in \mathbb {Z}^{+}$

, we will denote

![]() $\eta _{j}: \Lambda \to \Lambda $

,

$\eta _{j}: \Lambda \to \Lambda $

,

![]() $\eta _{j} = G^{j-1}$

, so that

$\eta _{j} = G^{j-1}$

, so that

Henceforth, we will assume that G is restricted to

![]() $\Lambda $

.

$\Lambda $

.

Now, let

![]() $u: (s_{0}, s_{1}) \to \mathbb {R}^{+}$

be a

$u: (s_{0}, s_{1}) \to \mathbb {R}^{+}$

be a

![]() $\mathcal {C}^{1}$

function satisfying the following:

$\mathcal {C}^{1}$

function satisfying the following:

-

(i)

$\lim _{x \to s_{0}^{+}}u(x) = \infty $

.

$\lim _{x \to s_{0}^{+}}u(x) = \infty $

. -

(ii) If

$s_{1}=1$

, then

$s_{1}=1$

, then

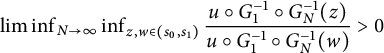

$\liminf _{N \to \infty } \inf _{z,w \in (s_{0},s_{1})} \dfrac {u \circ G_{1}^{-1} \circ G_{N}^{-1}(z)}{u \circ G_{1}^{-1} \circ G_{N}^{-1}(w)}> 0$

.

$\liminf _{N \to \infty } \inf _{z,w \in (s_{0},s_{1})} \dfrac {u \circ G_{1}^{-1} \circ G_{N}^{-1}(z)}{u \circ G_{1}^{-1} \circ G_{N}^{-1}(w)}> 0$

. -

(iii) There is some

$C>0$

such that

$C>0$

such that

$|u'(x)| < \dfrac {C}{(x-s_{0})^{2}}$

for all

$|u'(x)| < \dfrac {C}{(x-s_{0})^{2}}$

for all

$x \in (s_{0}, s_{1})$

.

$x \in (s_{0}, s_{1})$

. -

(iv) u is computable on numbers

$[a_{1}, a_{2}, \ldots ]$

such that

$[a_{1}, a_{2}, \ldots ]$

such that

$a_{l}=1$

for all

$a_{l}=1$

for all

$l>l_{0}$

for some integer

$l>l_{0}$

for some integer

$l_{0}$

.

$l_{0}$

. -

(v) u is left-computable on all of

$\Lambda $

.

$\Lambda $

.

In what follows, for any

![]() $\nu>0,$

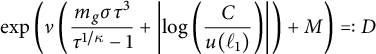

we define the generalized Brjuno function to be

$\nu>0,$

we define the generalized Brjuno function to be

where

![]() $x = [a_{1}, a_{2}, \ldots ] \in \Lambda $

.

$x = [a_{1}, a_{2}, \ldots ] \in \Lambda $

.

We note:

Theorem 3.1 The following functions restricted to their corresponding sets

![]() $\Lambda $

fall under the definition of a generalized Brjuno function given above:

$\Lambda $

fall under the definition of a generalized Brjuno function given above:

-

• Yoccoz’s Brjuno function

${{\mathcal B}}$

(2.1).

${{\mathcal B}}$

(2.1). -

• The first Wilton function

${{\mathcal W}}_{1}$

(2.2).

${{\mathcal W}}_{1}$

(2.2). -

• The functions

${{\mathcal B}}_{\alpha , u, \nu }$

(2.5) under the additional assumptions of (ii)–(v) on u. For example, taking

${{\mathcal B}}_{\alpha , u, \nu }$

(2.5) under the additional assumptions of (ii)–(v) on u. For example, taking

$G = A_{\alpha }$

for

$G = A_{\alpha }$

for

$\alpha \in [1/2,1]$

and

$\alpha \in [1/2,1]$

and

$u(x)$

to be any of

$u(x)$

to be any of

$\log ^{n}(1/x)$

for

$\log ^{n}(1/x)$

for

$n \in \mathbb {Z}$

or

$n \in \mathbb {Z}$

or

$x^{-1}$

yields a generalized Brjuno function.

$x^{-1}$

yields a generalized Brjuno function.

Let us postpone the proof to Section A.1 in the Appendix, and proceed to formulating the results.

3.2 Main results

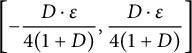

Theorem 3.2 Let

![]() $x_*$

be a computable real number in

$x_*$

be a computable real number in

![]() $\Lambda $

with the property

$\Lambda $

with the property

![]() $y_*=\Phi (x_*)<+\infty .$

There exists an oracle TM

$y_*=\Phi (x_*)<+\infty .$

There exists an oracle TM

![]() $M^\phi $

with a single input

$M^\phi $

with a single input

![]() $n\in {\mathbb {N}}$

such that the following holds. Suppose

$n\in {\mathbb {N}}$

such that the following holds. Suppose

![]() $y\in [y_*,+\infty )$

and

$y\in [y_*,+\infty )$

and

Then,

![]() $M^\phi $

outputs

$M^\phi $

outputs

![]() $d_n$

such that

$d_n$

such that

As a corollary:

Corollary 3.3 If

![]() $y\in [y_*,+\infty )$

is left computable, then there exists a computable

$y\in [y_*,+\infty )$

is left computable, then there exists a computable

![]() $x\in \Lambda $

with

$x\in \Lambda $

with

In fact, it is clear from the proof of Theorem 3.2 that countably many such

![]() $x \in \Lambda $

exist.

$x \in \Lambda $

exist.

As was shown in [Reference Balazard and MartinBM12], the Brjuno function

![]() ${{\mathcal B}}$

attains its global maximum at

${{\mathcal B}}$

attains its global maximum at

Therefore:

Corollary 3.4 If

![]() $y\in [{{\mathcal B}}(w_*),+\infty )$

is left computable, then there exists a computable

$y\in [{{\mathcal B}}(w_*),+\infty )$

is left computable, then there exists a computable

![]() $x\in (0,1)$

with

$x\in (0,1)$

with

4 Proof of Theorem 3.2

4.1 Three main lemmas

It will be helpful to first outline the general strategy for the proof of Theorem 3.2. We are given a computable sequence

![]() $\{y_{n}\}$

converging upward to some left-computable y, and we must find a computable

$\{y_{n}\}$

converging upward to some left-computable y, and we must find a computable

![]() $x \in (s_{0}, s_{1})$

for which

$x \in (s_{0}, s_{1})$

for which

![]() $y = \Phi (x)$

. This is done by starting with some

$y = \Phi (x)$

. This is done by starting with some

![]() $\gamma _{0} \in (s_{0}, s_{1})$

and iteratively modifying the symbolic representation of

$\gamma _{0} \in (s_{0}, s_{1})$

and iteratively modifying the symbolic representation of

![]() $\gamma _{k}$

to “squeeze”

$\gamma _{k}$

to “squeeze”

![]() $\Phi (\gamma _{k})$

to be in the interval

$\Phi (\gamma _{k})$

to be in the interval

![]() $(\Phi (\gamma _{s+k})-2^{-k}\varepsilon , \Phi (\gamma _{s+k})+2^{-k}\varepsilon )$

for some positive integer s. Passing to the limit, we obtain

$(\Phi (\gamma _{s+k})-2^{-k}\varepsilon , \Phi (\gamma _{s+k})+2^{-k}\varepsilon )$

for some positive integer s. Passing to the limit, we obtain

![]() $x = \gamma _{\infty }$

for which

$x = \gamma _{\infty }$

for which

![]() $\Phi (x) = y$

as needed.

$\Phi (x) = y$

as needed.

To ensure this strategy works, we need ways of carefully controlling the value of

![]() $\Phi (\gamma )$

from the symbolic representation of

$\Phi (\gamma )$

from the symbolic representation of

![]() $\gamma $

. This role is played by Lemmas 4.1–4.3 below, which are analogous to Lemmas 5.18–5.20, respectively, in [Reference Braverman and YampolskyBY09] and whose proofs are in the Section A.2 in the Appendix.

$\gamma $

. This role is played by Lemmas 4.1–4.3 below, which are analogous to Lemmas 5.18–5.20, respectively, in [Reference Braverman and YampolskyBY09] and whose proofs are in the Section A.2 in the Appendix.

Lemma 4.1 For any initial segment

![]() $I = [a_{1}, a_{2}, \ldots , a_{n}]$

, write

$I = [a_{1}, a_{2}, \ldots , a_{n}]$

, write

![]() $\omega = [a_{1}, a_{2}, \ldots , a_{n}, 1, 1, 1, \ldots ]$

. Then, for any

$\omega = [a_{1}, a_{2}, \ldots , a_{n}, 1, 1, 1, \ldots ]$

. Then, for any

![]() $\varepsilon> 0$

, there is an

$\varepsilon> 0$

, there is an

![]() $m> 0$

and an integer N such that if we write

$m> 0$

and an integer N such that if we write

![]() $\beta ^{N} = [a_{1}, a_{2}, \ldots , a_{n}, 1, 1, \ldots , 1, N, 1, 1, \ldots ]$

, where the N is located in the

$\beta ^{N} = [a_{1}, a_{2}, \ldots , a_{n}, 1, 1, \ldots , 1, N, 1, 1, \ldots ]$

, where the N is located in the

![]() $(n+m)$

-th position, then

$(n+m)$

-th position, then

In the Appendix, this lemma will be proven under the slightly weaker assumptions used in Section 5. Also, it is clear from the proof that m can be taken arbitrarily large.

Lemma 4.2 With

![]() $\omega $

as above, for any

$\omega $

as above, for any

![]() $\varepsilon>0,$

there is an

$\varepsilon>0,$

there is an

![]() $m_{0}>0$

, which can be computed from

$m_{0}>0$

, which can be computed from

![]() $(a_{0}, a_{1}, \ldots , a_{n})$

and

$(a_{0}, a_{1}, \ldots , a_{n})$

and

![]() $\varepsilon $

, such that for any

$\varepsilon $

, such that for any

![]() $m \geq m_{0}$

and any tail

$m \geq m_{0}$

and any tail

![]() $I = [a_{n+m}, a_{n+m+1}, \ldots ],$

we have

$I = [a_{n+m}, a_{n+m+1}, \ldots ],$

we have

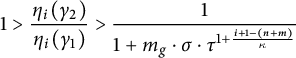

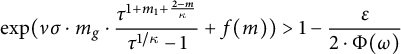

where

Lemma 4.3 Let

![]() $\omega = [a_{1}, a_{2}, \ldots ]$

be such that

$\omega = [a_{1}, a_{2}, \ldots ]$

be such that

![]() $\Phi (\omega ) < \infty $

. Write

$\Phi (\omega ) < \infty $

. Write

![]() $\omega _{k} = [a_{1}, a_{2}, \ldots , a_{k}, 1, 1, \ldots ]$

. Then, for every

$\omega _{k} = [a_{1}, a_{2}, \ldots , a_{k}, 1, 1, \ldots ]$

. Then, for every

![]() $\varepsilon>0,$

there is an m such that for all

$\varepsilon>0,$

there is an m such that for all

![]() $k \geq m$

,

$k \geq m$

,

We proceed with proving Theorem 3.2.

4.2 The proof

We first require a few preliminary results.

Proposition 4.4 Let

![]() $m_{0}>0$

be an integer. Then, there exists an oracle TM which, given access to a number

$m_{0}>0$

be an integer. Then, there exists an oracle TM which, given access to a number

![]() $x = [a_{1}, a_{2}, \ldots ] \in \Lambda $

such that

$x = [a_{1}, a_{2}, \ldots ] \in \Lambda $

such that

![]() $a_{m} = 1$

for

$a_{m} = 1$

for

![]() $m>m_{0}$

, computes

$m>m_{0}$

, computes

![]() $\Phi (x)$

.

$\Phi (x)$

.

Proof Let

![]() $\varphi = [1, 1, 1, \ldots ]$

. For any

$\varphi = [1, 1, 1, \ldots ]$

. For any

![]() $x,$

we have

$x,$

we have

$$ \begin{align*} \Phi(x) &= \sum_{i=1}^{m_{0}} \left( \eta_{0}(x) \dots \eta_{i-1}(x) \right)^{\nu} \cdot u(\eta_{i}(x)) + \sum_{i=m_{0}+1}^{\infty} \left( \varphi^{\nu} \right)^{n-1} \cdot u(\varphi) \\ &= \sum_{i=1}^{m_{0}} \left( \eta_{0}(x) \dots \eta_{i-1}(x) \right)^{\nu} \cdot u(\eta_{i}(x)) + \frac{\varphi^{\nu \cdot m_{0}}}{1-\varphi^{\nu}} \cdot u(\varphi). \end{align*} $$

$$ \begin{align*} \Phi(x) &= \sum_{i=1}^{m_{0}} \left( \eta_{0}(x) \dots \eta_{i-1}(x) \right)^{\nu} \cdot u(\eta_{i}(x)) + \sum_{i=m_{0}+1}^{\infty} \left( \varphi^{\nu} \right)^{n-1} \cdot u(\varphi) \\ &= \sum_{i=1}^{m_{0}} \left( \eta_{0}(x) \dots \eta_{i-1}(x) \right)^{\nu} \cdot u(\eta_{i}(x)) + \frac{\varphi^{\nu \cdot m_{0}}}{1-\varphi^{\nu}} \cdot u(\varphi). \end{align*} $$

Since u is computable on each number

![]() $\eta _{i}(x)$

whose symbolic representation ends in all ones by assumption (iv) on u, and each

$\eta _{i}(x)$

whose symbolic representation ends in all ones by assumption (iv) on u, and each

![]() $\eta _{j}$

is computable on all of

$\eta _{j}$

is computable on all of

![]() $\Lambda $

by assumption (vi) on G, there is an oracle TM which, given access to x, computes the sum on the left to an arbitrary precision. The construction of this oracle TM is independent of x and depends only on

$\Lambda $

by assumption (vi) on G, there is an oracle TM which, given access to x, computes the sum on the left to an arbitrary precision. The construction of this oracle TM is independent of x and depends only on

![]() $m_{0}$

. Additionally, since

$m_{0}$

. Additionally, since

![]() $\varphi $

also ends in all ones, there is a TM which computes the value of the term on the right to an arbitrary precision, also depending only on

$\varphi $

also ends in all ones, there is a TM which computes the value of the term on the right to an arbitrary precision, also depending only on

![]() $m_{0}$

. Combining these TMs in the obvious way, we obtain an oracle TM which, given access to x, computes

$m_{0}$

. Combining these TMs in the obvious way, we obtain an oracle TM which, given access to x, computes

![]() $\Phi (x)$

to an arbitrary precision.

$\Phi (x)$

to an arbitrary precision.

Lemma 4.5 Given an initial segment

![]() $I = [a_{0}, a_{1}, \ldots , a_{n}]$

and

$I = [a_{0}, a_{1}, \ldots , a_{n}]$

and

![]() $m_{0}>0$

, write

$m_{0}>0$

, write

![]() $\omega = [a_{0}, a_{1}, \ldots , a_{n}, 1, 1, \ldots ]$

. Then, for all

$\omega = [a_{0}, a_{1}, \ldots , a_{n}, 1, 1, \ldots ]$

. Then, for all

![]() $\varepsilon>0$

, we can uniformly compute

$\varepsilon>0$

, we can uniformly compute

![]() $m>m_{0}$

and

$m>m_{0}$

and

![]() $t, N \in \mathbb {Z}^{+}$

such that if we write

$t, N \in \mathbb {Z}^{+}$

such that if we write

![]() $\beta = [a_{0}, a_{1}, \ldots , a_{n}, 1, 1, \ldots , 1, N , 1, 1, \ldots ]$

, where N is in the

$\beta = [a_{0}, a_{1}, \ldots , a_{n}, 1, 1, \ldots , 1, N , 1, 1, \ldots ]$

, where N is in the

![]() $(n+m)$

-th position, we have

$(n+m)$

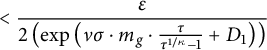

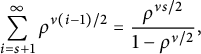

-th position, we have

and for any

![]() $\gamma = [a_{0}, a_{1}, \ldots , a_{n}, 1, 1, \ldots , 1, N , 1, \ldots , 1, c_{n+m+t+1}, c_{n+m+t+2}, \ldots ]$

, we have

$\gamma = [a_{0}, a_{1}, \ldots , a_{n}, 1, 1, \ldots , 1, N , 1, \ldots , 1, c_{n+m+t+1}, c_{n+m+t+2}, \ldots ]$

, we have

Proof We will first show that such

![]() $m, N$

exist, then give an algorithm to compute them. Let

$m, N$

exist, then give an algorithm to compute them. Let

![]() $\varepsilon>0$

be given. By Lemma 4.1, there exists m (and we can make

$\varepsilon>0$

be given. By Lemma 4.1, there exists m (and we can make

![]() $m>m_{0}$

) and

$m>m_{0}$

) and

![]() $\exists N \in \mathbb {Z}^{+}$

such that

$\exists N \in \mathbb {Z}^{+}$

such that

Taking

![]() $I'=[a_{0}, a_{1}, \ldots , a_{n}, 1, 1, \ldots , 1, N]$

and

$I'=[a_{0}, a_{1}, \ldots , a_{n}, 1, 1, \ldots , 1, N]$

and

![]() $\omega '=\beta $

, applying Lemma 4.2 with

$\omega '=\beta $

, applying Lemma 4.2 with

![]() $\varepsilon '=2^{-n}$

, we get

$\varepsilon '=2^{-n}$

, we get

![]() $t_{0}>0,$

which can be computed from

$t_{0}>0,$

which can be computed from

![]() $(a_{0}, a_{1}, \ldots , a_{n}, 1, 1, \ldots , 1, N)$

such that for all

$(a_{0}, a_{1}, \ldots , a_{n}, 1, 1, \ldots , 1, N)$

such that for all

![]() $t \geq t_{0}$

and any tail

$t \geq t_{0}$

and any tail

![]() $I = [c_{n+m+t+1}, c_{n+m+t+2}, \ldots ]$

, we have

$I = [c_{n+m+t+1}, c_{n+m+t+2}, \ldots ]$

, we have

as needed.

Since

![]() $\omega , \beta $

have symbolic representations ending in all ones, for any specific

$\omega , \beta $

have symbolic representations ending in all ones, for any specific

![]() $m,N,$

we can compute

$m,N,$

we can compute

![]() $\Phi (\omega )$

and

$\Phi (\omega )$

and

![]() $\Phi (\beta )$

by Proposition 4.4. So, we can find the required

$\Phi (\beta )$

by Proposition 4.4. So, we can find the required

![]() $m,N$

by enumerating all pairs

$m,N$

by enumerating all pairs

![]() $(m,N)$

and exhaustively checking equations (4.1) and (4.2) for each of them. We know that we will eventually find a pair for which these equations hold. Once we have m and N, we can use Lemma 4.2 to compute t.

$(m,N)$

and exhaustively checking equations (4.1) and (4.2) for each of them. We know that we will eventually find a pair for which these equations hold. Once we have m and N, we can use Lemma 4.2 to compute t.

Lemma 4.6 The infimum

![]() $\Phi (x_{*})$

of

$\Phi (x_{*})$

of

![]() $\Phi (x)$

over all

$\Phi (x)$

over all

![]() $x \in \Lambda $

is equal to the infimum over the numbers whose symbolic representations have only finitely many terms that are not

$x \in \Lambda $

is equal to the infimum over the numbers whose symbolic representations have only finitely many terms that are not

![]() $1$

:

$1$

:

Proof Let

![]() $\varepsilon>0$

be given. By definition of infimum, there exists

$\varepsilon>0$

be given. By definition of infimum, there exists

![]() $x=[a_{1}, a_{2}, \ldots ]$

such that

$x=[a_{1}, a_{2}, \ldots ]$

such that

Write

![]() $x_{k}=[a_{1}, a_{2}, \ldots , a_{k}, 1, 1, \ldots ]$

. By Lemma 4.3, there exists m such that for

$x_{k}=[a_{1}, a_{2}, \ldots , a_{k}, 1, 1, \ldots ]$

. By Lemma 4.3, there exists m such that for

![]() $k \geq m$

,

$k \geq m$

,

Thus,

![]() $\Phi (x_{k})< \Phi (x_{*}) + \varepsilon $

, so we can make

$\Phi (x_{k})< \Phi (x_{*}) + \varepsilon $

, so we can make

![]() $\Phi (x_{k})$

as close to

$\Phi (x_{k})$

as close to

![]() $\Phi (x_{*})$

as we need.

$\Phi (x_{*})$

as we need.

Now, we are given

The case of

![]() $y = y_{*}$

is trivial, so we suppose

$y = y_{*}$

is trivial, so we suppose

![]() $y> y_{*}$

. Then, there is an s and an

$y> y_{*}$

. Then, there is an s and an

![]() $\varepsilon>0$

such that

$\varepsilon>0$

such that

By Lemma 4.6, there exists

![]() $\gamma _{0}=[a_{1}, a_{2}, \ldots , a_{n}, 1, 1, \ldots ]$

such that

$\gamma _{0}=[a_{1}, a_{2}, \ldots , a_{n}, 1, 1, \ldots ]$

such that

We will now give an algorithm for computing a number

![]() $x \in \Lambda $

for which

$x \in \Lambda $

for which

![]() $\Phi (x) = \lim \nearrow y_{n} = y$

, which would complete the proof of Theorem 3.2. The algorithm works as follows. At state

$\Phi (x) = \lim \nearrow y_{n} = y$

, which would complete the proof of Theorem 3.2. The algorithm works as follows. At state

![]() $k,$

it produces a finite initial segment

$k,$

it produces a finite initial segment

![]() $I_{k}=[a_{0}, \ldots , a_{k}]$

such that the following properties hold:

$I_{k}=[a_{0}, \ldots , a_{k}]$

such that the following properties hold:

-

(1)

$I_{0} = [a_{1}, a_{2}, \ldots , a_{n}]$

.

$I_{0} = [a_{1}, a_{2}, \ldots , a_{n}]$

. -

(2)

$I_{k}$

has at least k terms, i.e.,

$I_{k}$

has at least k terms, i.e.,

$m_{k} \geq k$

.

$m_{k} \geq k$

. -

(3) For each k,

$I_{k+1}$

is an extension of

$I_{k+1}$

is an extension of

$I_{k}$

.

$I_{k}$

. -

(4) For each k, define

$\gamma _{k}=[I_{k}, 1, 1, \ldots ]$

. Then,

$\gamma _{k}=[I_{k}, 1, 1, \ldots ]$

. Then,  $$ \begin{align*} y_{s+k} - 2^{-k} \varepsilon < \Phi(\gamma_{k}) < y_{s+k} - 2^{-(k+1)} \varepsilon. \end{align*} $$

$$ \begin{align*} y_{s+k} - 2^{-k} \varepsilon < \Phi(\gamma_{k}) < y_{s+k} - 2^{-(k+1)} \varepsilon. \end{align*} $$

-

(5) For each k,

$\Phi (\gamma _{k})> \Phi (\gamma _{k+1})$

.

$\Phi (\gamma _{k})> \Phi (\gamma _{k+1})$

. -

(6) For each k and any extension

$\beta = [I_{k}, b_{m_{k}+1}, b_{m_{k}+2}, \ldots ]$

, we have

$\beta = [I_{k}, b_{m_{k}+1}, b_{m_{k}+2}, \ldots ]$

, we have  $$ \begin{align*} \Phi(\beta)> \Phi(\gamma_{k})-2^{-k}. \end{align*} $$

$$ \begin{align*} \Phi(\beta)> \Phi(\gamma_{k})-2^{-k}. \end{align*} $$

The first three properties are easy to verify. The last three are checked using Lemma 4.5. By this lemma, we can increase

![]() $\Phi (\gamma _{k-1})$

by any given amount, possibly in more than one step, by extending

$\Phi (\gamma _{k-1})$

by any given amount, possibly in more than one step, by extending

![]() $I_{k-1}$

to

$I_{k-1}$

to

![]() $I_{k}$

. Thus, if we have

$I_{k}$

. Thus, if we have

by virtue of

![]() $\{ a_{s+k} \}_{k=1}^{\infty }$

being non-decreasing, we have both

$\{ a_{s+k} \}_{k=1}^{\infty }$

being non-decreasing, we have both

So, we can increase

![]() $\Phi (\gamma _{k-1})$

by such a fine amount that

$\Phi (\gamma _{k-1})$

by such a fine amount that

satisfying the fourth and fifth properties. In performing this fine increase, we have used the fact that the

![]() $y_{s+k}$

’s are computable. The last property is satisfied by Lemma 4.5 (4.2).

$y_{s+k}$

’s are computable. The last property is satisfied by Lemma 4.5 (4.2).

Denote

The symbolic representation of x is the limit of the initial segments

![]() $I_{k}$

. This algorithm gives us at least one term of the symbolic representation of x per iteration, and hence we would need at most

$I_{k}$

. This algorithm gives us at least one term of the symbolic representation of x per iteration, and hence we would need at most

![]() $O(n)$

iterations to compute x with precision

$O(n)$

iterations to compute x with precision

![]() $2^{-n}$

. The initial segment of

$2^{-n}$

. The initial segment of

![]() $\gamma _{0}$

can also be computed as in the proof of Lemma 4.5. It remains to show that x is the number we are after.

$\gamma _{0}$

can also be computed as in the proof of Lemma 4.5. It remains to show that x is the number we are after.

Lemma 4.7 We have

![]() $\Phi (x) = y$

.

$\Phi (x) = y$

.

Proof Taking limits on all sides of (4), we get

It remains to show

![]() $\lim _{k\to \infty } \Phi (\gamma _{k}) = \Phi (x)$

. As in Proposition 4.4, denote

$\lim _{k\to \infty } \Phi (\gamma _{k}) = \Phi (x)$

. As in Proposition 4.4, denote

![]() $x = [a_{1}, a_{2}, \ldots ]$

and let

$x = [a_{1}, a_{2}, \ldots ]$

and let

![]() $\alpha _{k}=[a_{k}, a_{k+1}, \ldots ], \alpha _{0}=1$

. Let

$\alpha _{k}=[a_{k}, a_{k+1}, \ldots ], \alpha _{0}=1$

. Let

![]() $\varphi = [1, 1, 1, \ldots ]$

, and additionally for any number

$\varphi = [1, 1, 1, \ldots ]$

, and additionally for any number

![]() $\xi = [b_{1}, b_{2}, \ldots ] \in \Lambda $

denote

$\xi = [b_{1}, b_{2}, \ldots ] \in \Lambda $

denote

![]() $(\xi )_{k} = [b_{k}, b_{k+1}, \ldots ]$

. We have

$(\xi )_{k} = [b_{k}, b_{k+1}, \ldots ]$

. We have

$$ \begin{align*} \Phi(x) &= \lim_{k\to\infty} \left( \sum_{n=1}^{m_{k}} \alpha_{0} \alpha_{1} \dots \alpha_{n-1} \cdot u(\alpha_{n}) \right) \\ &\leq \lim_{k\to\infty} \left( \sum_{n=1}^{m_{k}} \alpha_{0} \alpha_{1} \dots \alpha_{n-1} \cdot u(\alpha_{n}) + \sum_{n=m_{k}+1}^{\infty} \varphi^{n-1} \cdot u(\varphi) \right) \\ &= \lim_{k\to\infty} \Phi(\gamma_{k}). \end{align*} $$

$$ \begin{align*} \Phi(x) &= \lim_{k\to\infty} \left( \sum_{n=1}^{m_{k}} \alpha_{0} \alpha_{1} \dots \alpha_{n-1} \cdot u(\alpha_{n}) \right) \\ &\leq \lim_{k\to\infty} \left( \sum_{n=1}^{m_{k}} \alpha_{0} \alpha_{1} \dots \alpha_{n-1} \cdot u(\alpha_{n}) + \sum_{n=m_{k}+1}^{\infty} \varphi^{n-1} \cdot u(\varphi) \right) \\ &= \lim_{k\to\infty} \Phi(\gamma_{k}). \end{align*} $$

Additionally, taking limits on both sides of (6) with

![]() $\beta = x$

yields

$\beta = x$

yields

therefore

![]() $\lim _{k\to \infty } \Phi (\gamma _{k}) = \Phi (x)$

.

$\lim _{k\to \infty } \Phi (\gamma _{k}) = \Phi (x)$

.

This concludes the proof of Theorem 3.2.

5 Generalized non-computability result

5.1 Modified assumptions

We will now prove a non-computability result about a slightly more broad class of generalized Brjuno functions than the one considered in Section 5. For this result, we require all the assumptions on G except (v) and (vi), and on u we require only assumption (i) together with a slightly weaker variation of assumption (iii):

-

(iii′) There is some

$C>0$

such that

$C>0$

such that

$u'(x) < \dfrac {C}{(x-s_{0})^{2}}$

for all

$u'(x) < \dfrac {C}{(x-s_{0})^{2}}$

for all

$x \in (s_{0}, s_{1})$

.

$x \in (s_{0}, s_{1})$

.

We can additionally allow for more flexibility in the definition of the generalized Brjuno function by adding a “sign” term:

where

![]() $\nu>0$

as before and

$\nu>0$

as before and

![]() $s(i) \in \{-1, 1\}$

.

$s(i) \in \{-1, 1\}$

.

Theorem 5.1 The following functions restricted to their corresponding sets

![]() $\Lambda $

fall under the definition of a generalized Brjuno function given above:

$\Lambda $

fall under the definition of a generalized Brjuno function given above:

-

• Yoccoz’s Brjuno function

${{\mathcal B}}$

(2.1).

${{\mathcal B}}$

(2.1). -

• The first Wilton function

${{\mathcal W}}_{1}$

(2.2) and the second Wilton function

${{\mathcal W}}_{1}$

(2.2) and the second Wilton function

${{\mathcal W}}_{2}$

(2.3).

${{\mathcal W}}_{2}$

(2.3). -

• The functions

${{\mathcal B}}_{\alpha , u, \nu }$

(2.5) under the additional assumption of (iii

${{\mathcal B}}_{\alpha , u, \nu }$

(2.5) under the additional assumption of (iii

$'$

) on u. As before, taking

$'$

) on u. As before, taking

$G = A_{\alpha }$

for

$G = A_{\alpha }$

for

$\alpha \in [1/2,1]$

and

$\alpha \in [1/2,1]$

and

$u(x)$

to be any of

$u(x)$

to be any of

$\log ^{n}(1/x)$

for

$\log ^{n}(1/x)$

for

$n \in \mathbb {Z}$

or

$n \in \mathbb {Z}$

or

$x^{-1}$

yields a generalized Brjuno function.

$x^{-1}$

yields a generalized Brjuno function.

The proof is completely analogous to the proof of Theorem 3.1 and will be omitted.

5.2 Noncomputability result

We proceed with the main result of this section, which concerns the computability of the function

![]() $\Phi $

as opposed to the computability of real numbers.

$\Phi $

as opposed to the computability of real numbers.

Theorem 5.2 There exists a number

![]() $x \in \Lambda $

for which

$x \in \Lambda $

for which

![]() $\Phi (\cdot )$

as defined above is not computable at x.

$\Phi (\cdot )$

as defined above is not computable at x.

Without loss of generality, we will assume in what follows that the sign term

![]() $s(i) = 1$

infinitely often (otherwise, just replace

$s(i) = 1$

infinitely often (otherwise, just replace

![]() $\Phi $

with

$\Phi $

with

![]() $-\Phi $

).

$-\Phi $

).

As before, it will be instructive to first go over the strategy of the proof. This outline is rough and is not fully logically sound, however, it captures the main idea of the argument. To prove a function is non-computable at a single point x, it suffices to enumerate all oracle TMs

![]() $M^{\phi }_{i}$

,

$M^{\phi }_{i}$

,

![]() $i \in \mathbb {N}$

(recall that there are countably many oracle TMs), and show that if

$i \in \mathbb {N}$

(recall that there are countably many oracle TMs), and show that if

![]() $\phi $

is any oracle of

$\phi $

is any oracle of

![]() $x,$

then

$x,$

then

![]() $M^{\phi }_{i}$

does not approximate

$M^{\phi }_{i}$

does not approximate

![]() $\Phi (x)$

arbitrarily well.

$\Phi (x)$

arbitrarily well.

We start with

![]() $x_{0} = [1, 1, 1, \ldots ]$

and the first TM

$x_{0} = [1, 1, 1, \ldots ]$

and the first TM

![]() $M^{\phi }_{n_{1}}$

in our enumeration which computes

$M^{\phi }_{n_{1}}$

in our enumeration which computes

![]() $x_{0}$

. If any of the digits

$x_{0}$

. If any of the digits

![]() $a_{j}$

in the symbolic representation of

$a_{j}$

in the symbolic representation of

![]() $x_{0}$

are changed to some

$x_{0}$

are changed to some

![]() $N \in \mathbb {Z}^{+}$

, as

$N \in \mathbb {Z}^{+}$

, as

![]() $N \to \infty ,$

the series

$N \to \infty ,$

the series

![]() $\Phi (x)$

diverges. However, if we change some digit

$\Phi (x)$

diverges. However, if we change some digit

![]() $a_{j}$

far enough in the representation of

$a_{j}$

far enough in the representation of

![]() $x_{0}$

, for any

$x_{0}$

, for any

![]() $N,$

the new value of

$N,$

the new value of

![]() $x_{0}$

changes by at most some fixed small amount

$x_{0}$

changes by at most some fixed small amount

![]() $\varepsilon _{j}$

which goes to

$\varepsilon _{j}$

which goes to

![]() $0$

as

$0$

as

![]() $j \to \infty $

. So, the idea is to define

$j \to \infty $

. So, the idea is to define

![]() $x_{1}$

from

$x_{1}$

from

![]() $x_{0}$

by changing

$x_{0}$

by changing

![]() $a_{j_{1}}$

for large enough

$a_{j_{1}}$

for large enough

![]() $j_{1}$

to some large enough

$j_{1}$

to some large enough

![]() $N_{1}$

, such that if

$N_{1}$

, such that if

![]() $M^{\phi }_{n_{1}}$

is given an oracle for

$M^{\phi }_{n_{1}}$

is given an oracle for

![]() $x_{1}$

, then it does not properly compute

$x_{1}$

, then it does not properly compute

![]() $\Phi (x_{1})$

, which in some sense “fools” the oracle TM

$\Phi (x_{1})$

, which in some sense “fools” the oracle TM

![]() $M^{\phi }_{n_{1}}$

. To fool the machine

$M^{\phi }_{n_{1}}$

. To fool the machine

![]() $M^{\phi }_{n_{2}}$

, we then change a digit

$M^{\phi }_{n_{2}}$

, we then change a digit

![]() $j_{2}>j_{1}$

sufficiently far in the symbolic representation of

$j_{2}>j_{1}$

sufficiently far in the symbolic representation of

![]() $x_{1}$

to a large

$x_{1}$

to a large

![]() $N_{2}$

to get

$N_{2}$

to get

![]() $x_{2}$

, in such a way that neither

$x_{2}$

, in such a way that neither

![]() $M^{\phi }_{n_{2}}$

nor any other

$M^{\phi }_{n_{2}}$

nor any other

![]() $M^{\phi }_{k}$

for

$M^{\phi }_{k}$

for

![]() $k < n_{2}$

properly compute

$k < n_{2}$

properly compute

![]() $\Phi (x_{2})$

. Continuing in this manner, we will arrive at a limiting number

$\Phi (x_{2})$

. Continuing in this manner, we will arrive at a limiting number

![]() $x_{\infty } \in \Lambda $

, with

$x_{\infty } \in \Lambda $

, with

![]() $\Phi (x_{\infty }) < \infty $

and such that none of the oracle TMs

$\Phi (x_{\infty }) < \infty $

and such that none of the oracle TMs

![]() $M^{\phi }_{i}$

in our list properly compute

$M^{\phi }_{i}$

in our list properly compute

![]() $x_{\infty }$

.

$x_{\infty }$

.

As in the proof of Theorem 4.3, we will need to carefully control the value of

![]() $\Phi (x)$

from the symbolic expansion of x. For the proof of Theorem 5.2, we only need Lemma 4.1 from the previous section, which is proven in the Appendix under the weaker assumptions on

$\Phi (x)$

from the symbolic expansion of x. For the proof of Theorem 5.2, we only need Lemma 4.1 from the previous section, which is proven in the Appendix under the weaker assumptions on

![]() $\Phi $

used in this section.

$\Phi $

used in this section.

For the below proofs, we will say that

![]() $\Phi (x)$

is computable at x if there exists a TM

$\Phi (x)$

is computable at x if there exists a TM

![]() $M^{\phi }$

such that if

$M^{\phi }$

such that if

![]() $\phi $

is an oracle for x, then on input n,

$\phi $

is an oracle for x, then on input n,

![]() $M^{\phi }$

outputs some

$M^{\phi }$

outputs some

![]() $y'$

for which

$y'$

for which

![]() $|\Phi (x)-y'| \leq 2^{-n}$

. This definition uses “

$|\Phi (x)-y'| \leq 2^{-n}$

. This definition uses “

![]() $\leq $

” instead of the “

$\leq $

” instead of the “

![]() $<$

” which is used in the definition given in Section 2, but it is easy to see that the two definitions are equivalent.

$<$

” which is used in the definition given in Section 2, but it is easy to see that the two definitions are equivalent.

Before starting the proof, we need the following elementary fact.

Lemma 5.3 Write any number in

![]() $\Lambda $

as

$\Lambda $

as

![]() $\omega = [a_{1}, a_{2}, \ldots ]$

. For any

$\omega = [a_{1}, a_{2}, \ldots ]$

. For any

![]() $\varepsilon> 0$

, there is an

$\varepsilon> 0$

, there is an

![]() $L>0$

for which

$L>0$

for which

![]() $n>L$

implies that for any sequence of natural numbers

$n>L$

implies that for any sequence of natural numbers

![]() $(N_{0}, N_{1}, \ldots )$

,

$(N_{0}, N_{1}, \ldots )$

,

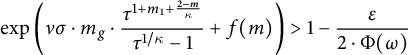

Proof Let

![]() $M>0$

be large enough that

$M>0$

be large enough that

![]() $\dfrac {s_{1}-s_{0}}{\tau ^{M}} < \varepsilon $

and let

$\dfrac {s_{1}-s_{0}}{\tau ^{M}} < \varepsilon $

and let

![]() $L = \kappa \cdot M$

, where

$L = \kappa \cdot M$

, where

![]() $\kappa , \tau $

are from assumption (ii) on G. Noting that

$\kappa , \tau $

are from assumption (ii) on G. Noting that

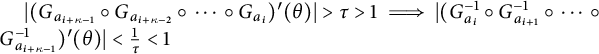

and

$|(G_{a_{i+\kappa -1}} \circ G_{a_{i+\kappa -2}} \circ \cdots \circ G_{a_{i}})'(\theta )|> \tau > 1 \implies |(G_{a_{i}}^{-1} \circ G_{a_{i+1}}^{-1} \circ \cdots \circ G_{a_{i+\kappa -1}}^{-1})'(\theta )| < \frac {1}{\tau } < 1$

, as well as

$|(G_{a_{i+\kappa -1}} \circ G_{a_{i+\kappa -2}} \circ \cdots \circ G_{a_{i}})'(\theta )|> \tau > 1 \implies |(G_{a_{i}}^{-1} \circ G_{a_{i+1}}^{-1} \circ \cdots \circ G_{a_{i+\kappa -1}}^{-1})'(\theta )| < \frac {1}{\tau } < 1$

, as well as

![]() $|(G_{a_{i}}^{1})'(\theta )| < 1$

from (ii), we have

$|(G_{a_{i}}^{1})'(\theta )| < 1$

from (ii), we have

$$ \begin{align*} &\text{length}((G_{a_{1}}^{-1} \circ \cdots \circ G_{a_{n-1}}^{-1})((s_{0}, s_{1}))) \\ &\quad \leq \left( \prod_{i=0}^{M-1} \sup_{\theta \in \Lambda} |(G_{a_{i\kappa+1}}^{-1} \circ \cdots \circ G_{a_{i\kappa+\kappa}}^{-1})'(\theta)| \right) \cdot \left( \prod_{j=L+1}^{n-1} \sup_{\theta \in \Lambda} |(G_{a_{j}}^{-1})'(\theta)| \right) \cdot (s_{1} - s_{0}) \\ &\quad < \dfrac{s_{1}-s_{0}}{\tau^{M}} < \varepsilon. \end{align*} $$

$$ \begin{align*} &\text{length}((G_{a_{1}}^{-1} \circ \cdots \circ G_{a_{n-1}}^{-1})((s_{0}, s_{1}))) \\ &\quad \leq \left( \prod_{i=0}^{M-1} \sup_{\theta \in \Lambda} |(G_{a_{i\kappa+1}}^{-1} \circ \cdots \circ G_{a_{i\kappa+\kappa}}^{-1})'(\theta)| \right) \cdot \left( \prod_{j=L+1}^{n-1} \sup_{\theta \in \Lambda} |(G_{a_{j}}^{-1})'(\theta)| \right) \cdot (s_{1} - s_{0}) \\ &\quad < \dfrac{s_{1}-s_{0}}{\tau^{M}} < \varepsilon. \end{align*} $$

In particular, we have the following.

Corollary 5.4 For

![]() $\omega = [a_{1}, a_{2}, \ldots ]$

as above, for any

$\omega = [a_{1}, a_{2}, \ldots ]$

as above, for any

![]() $\varepsilon> 0$

, there is an

$\varepsilon> 0$

, there is an

![]() $L>0$

for which

$L>0$

for which

![]() $n>L$

implies that

$n>L$

implies that

![]() $\forall N \in \mathbb {N}$

,

$\forall N \in \mathbb {N}$

,

Before proceeding to the proof of the main result, we first define some notation. For any

![]() $x_{i} = [a_{1}^{i}, a_{2}^{i}, \ldots ],$

let

$x_{i} = [a_{1}^{i}, a_{2}^{i}, \ldots ],$

let

![]() $\eta _{k}^{i}=[a_{k}^{i}, a_{k+1}^{i}, \ldots ]$

, noting that

$\eta _{k}^{i}=[a_{k}^{i}, a_{k+1}^{i}, \ldots ]$

, noting that

where

![]() $\nu , \rho>0$

and

$\nu , \rho>0$

and

![]() $s(i) \in \{-1, 1\}$

with

$s(i) \in \{-1, 1\}$

with

![]() $s(i) = 1$

infinitely often. Let

$s(i) = 1$

infinitely often. Let

$$ \begin{align*} f(i,k)=\sum_{n=1}^{k}s(i) \cdot \left( \eta_{0}^{i} \eta_{1}^{i} \dots \eta_{n-1}^{i} \right)^{\nu} \cdot u(\eta_{n}^{i}), \end{align*} $$

$$ \begin{align*} f(i,k)=\sum_{n=1}^{k}s(i) \cdot \left( \eta_{0}^{i} \eta_{1}^{i} \dots \eta_{n-1}^{i} \right)^{\nu} \cdot u(\eta_{n}^{i}), \end{align*} $$

noting that

![]() $\lim _{k \to \infty }f(i, k) = \Phi (x_{i})$

.

$\lim _{k \to \infty }f(i, k) = \Phi (x_{i})$

.

5.3 Proof of Theorem 5.2

We will first show inductively that there exist:

-

• nested initial segments

$I_{1} \subseteq I_{2} \subseteq \dots $

, where each

$I_{1} \subseteq I_{2} \subseteq \dots $

, where each

$I_{i}$

has length

$I_{i}$

has length

$p_{i}$

;

$p_{i}$

; -

• for each

$i = 1, 2, \ldots $

, positive integers

$i = 1, 2, \ldots $

, positive integers

$N^{i}$

and

$N^{i}$

and

$m_{i}$

;

$m_{i}$

; -

• positive integers

$k_{1} < k_{2} < \cdots $

and

$k_{1} < k_{2} < \cdots $

and

$l_{1} < l_{2} < \cdots $

and

$l_{1} < l_{2} < \cdots $

and

$\hat {a}_{1} < \hat {a}_{2} < \cdots $

and

$\hat {a}_{1} < \hat {a}_{2} < \cdots $

and

$n(0) < n(1) < n(2) < \cdots $

, positive real numbers

$n(0) < n(1) < n(2) < \cdots $

, positive real numbers

$\hat {\varepsilon }_{1}> \hat {\varepsilon }_{2} > \cdots $

, and oracles

$\hat {\varepsilon }_{1}> \hat {\varepsilon }_{2} > \cdots $

, and oracles

$\phi _{0}, \phi _{1}, \ldots $

;

$\phi _{0}, \phi _{1}, \ldots $

;

such that if we let

![]() $x_{i} = [I_{i}, 1, \ldots , 1, N^{i}, 1, \ldots ]$

for

$x_{i} = [I_{i}, 1, \ldots , 1, N^{i}, 1, \ldots ]$

for

![]() $i \in \mathbb {Z}^{+}$

, where

$i \in \mathbb {Z}^{+}$

, where

![]() $N^{i}$

is in the

$N^{i}$

is in the

![]() $(m_{i}+p_{i})$

-th position, then we have the following:

$(m_{i}+p_{i})$

-th position, then we have the following:

-

(1)

$\phi _{i}$

is an oracle for

$\phi _{i}$

is an oracle for

$x_{i}$

such that

$x_{i}$

such that

$|\phi _{i}(n) - x_{i}| < 2^{-(n+1)}$

for all n.

$|\phi _{i}(n) - x_{i}| < 2^{-(n+1)}$

for all n. -

(2)

$\phi _{i}$

agrees with the oracle

$\phi _{i}$

agrees with the oracle

$\phi _{j-1}$

on inputs

$\phi _{j-1}$

on inputs

$1, 2, \ldots , k_{j}$

for

$1, 2, \ldots , k_{j}$

for

$j=1, 2, \ldots , i$

.

$j=1, 2, \ldots , i$

. -

(3) Running

$M_{l_{i}}^{\phi _{i}}(1), M_{l_{i}}^{\phi _{i}}(2), \ldots , M_{l_{i}}^{\phi _{i}}(\hat {a}_{i})$

queries

$M_{l_{i}}^{\phi _{i}}(1), M_{l_{i}}^{\phi _{i}}(2), \ldots , M_{l_{i}}^{\phi _{i}}(\hat {a}_{i})$

queries

$\phi _{i}$

with parameters not exceeding

$\phi _{i}$

with parameters not exceeding

$k_{i}$

.

$k_{i}$

. -

(4) Running

$M_{l_{i}}^{\phi _{i}}(\hat {a}_{i})$

yields a number

$M_{l_{i}}^{\phi _{i}}(\hat {a}_{i})$

yields a number

$A_{l_{i}}$

for which

$A_{l_{i}}$

for which  $$ \begin{align*} A_{l_{i}} + 2^{-\hat{a}_{i}} \leq \Phi(x_{i-1}) + 2^{-\hat{a}_{i}+1} < \Phi(x_{i}) < \Phi(x_{i-1}) + 2 \cdot 2^{-\hat{a}_{i}+1}. \end{align*} $$

$$ \begin{align*} A_{l_{i}} + 2^{-\hat{a}_{i}} \leq \Phi(x_{i-1}) + 2^{-\hat{a}_{i}+1} < \Phi(x_{i}) < \Phi(x_{i-1}) + 2 \cdot 2^{-\hat{a}_{i}+1}. \end{align*} $$

-

(5) Running

$M_{l_{j}}^{\phi _{i}}(\hat {a}_{j})$

for

$M_{l_{j}}^{\phi _{i}}(\hat {a}_{j})$

for

$j = 1, 2, \ldots , i$

yields a number

$j = 1, 2, \ldots , i$

yields a number

$B_{l_{j}}$

for which

$B_{l_{j}}$

for which

$B_{l_{j}} + 2^{-\hat {a}_{j}} < \Phi (x_{i})$

.

$B_{l_{j}} + 2^{-\hat {a}_{j}} < \Phi (x_{i})$

. -

(6) The TMs

$M^{\phi _{i}}_{k}$

for

$M^{\phi _{i}}_{k}$

for

$k \in \{1, \ldots , l_{i}\}$

all do not properly compute

$k \in \{1, \ldots , l_{i}\}$

all do not properly compute

$\Phi (x_{i})$

; in particular, they all compute

$\Phi (x_{i})$

; in particular, they all compute

$\Phi (x_{i})$

with an error of at least

$\Phi (x_{i})$

with an error of at least

$\hat {\varepsilon }_{i}$

.

$\hat {\varepsilon }_{i}$

. -

(7) For

$k\geq n(i)$

, we have

$k\geq n(i)$

, we have

$|f(i,k)-\Phi (x_{i})| < 2^{-i}$

.

$|f(i,k)-\Phi (x_{i})| < 2^{-i}$

. -

(8) For

$k = 1, 2, \ldots , n(i-1)$

, we have

$k = 1, 2, \ldots , n(i-1)$

, we have

$|f(i,k)-f(i-1,k)|<2^{-i}$

.

$|f(i,k)-f(i-1,k)|<2^{-i}$

.

5.3.1 Base case

There are countably many oracle TMs

![]() $M^{\phi }$

, where

$M^{\phi }$

, where

![]() $\phi $

represents an oracle for x, so we can order them as

$\phi $

represents an oracle for x, so we can order them as

![]() $M_{1}^{\phi }, M_{2}^{\phi }, \ldots $

. Let

$M_{1}^{\phi }, M_{2}^{\phi }, \ldots $

. Let

![]() $x_{0} = [1, 1, 1, \ldots ]$

. Given an oracle

$x_{0} = [1, 1, 1, \ldots ]$

. Given an oracle

![]() $\phi _{0}$

for

$\phi _{0}$

for

![]() $x_{0}$

such that

$x_{0}$

such that

![]() $|\phi _{0}(n') - x_{0}| < 2^{-(n'+1)}$

for all

$|\phi _{0}(n') - x_{0}| < 2^{-(n'+1)}$

for all

![]() $n'$

, let

$n'$

, let

![]() $M_{l_{1}}^{\phi _{0}}$

be the first TM to compute

$M_{l_{1}}^{\phi _{0}}$

be the first TM to compute

![]() $\Phi (x_{0})$

(if no such TM exists, we are done). Since this is the first such TM, all of

$\Phi (x_{0})$

(if no such TM exists, we are done). Since this is the first such TM, all of

![]() $M_{1}^{\phi _{0}}, M_{2}^{\phi _{0}}, \ldots , M_{l_{1}-1}^{\phi _{0}}$

do not properly compute

$M_{1}^{\phi _{0}}, M_{2}^{\phi _{0}}, \ldots , M_{l_{1}-1}^{\phi _{0}}$

do not properly compute

![]() $\Phi (x_{0})$

, so there are integers

$\Phi (x_{0})$