1. Introduction

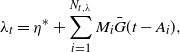

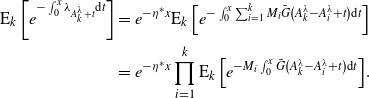

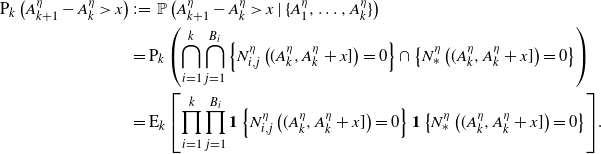

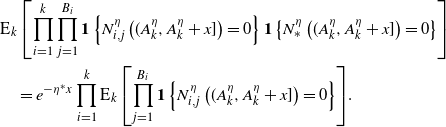

What’s past is prologue—unavoidably, the present is shaped by what has already occurred. The current state of the world is indebted to our history. Our actions, behaviors, and decisions are both precursory and prescriptive to those that follow, and this can be observed across a variety of different scenarios. For example, the spread of an infectious disease is accelerated as more people become sick and dampened as they recover. In finance, a flurry of recent transactions can prompt new buyers or sellers to enter a market. On social media platforms, as more and more users interact with a post it can become trending or viral and thus be broadcast to an even larger audience.

Self-exciting processes are an intriguing family of stochastic models in which the history of events influences the future. The paper [Reference Hawkes36] introduced the concept of self-excitement—defining what is now known as the Hawkes process, a model in which ‘the current intensity of events is determined by events in the past’. That is, the Hawkes process is a stochastic-intensity point process that depends on the history of the point process itself. The rate of new event occurrences increases as each event occurs. As time passes between occurrences, the intensity is governed by a deterministic excitement kernel. Most often, this kernel is specified so that the intensity jumps upward at event epochs and strictly decreases in the interim. In this way, occurrences beget occurrences; hence the term ‘self-exciting’. Unlike in the Poisson process, disjoint increments are not independent in sample paths of the Hawkes process. Instead, they are positively correlated and, by definition, the events of the former influence the events of the latter. Furthermore, the Hawkes process is known to be over-dispersed—meaning that its variance is larger than its mean—which is commonly found in real-world data, whereas the Poisson process has equal mean and variance.

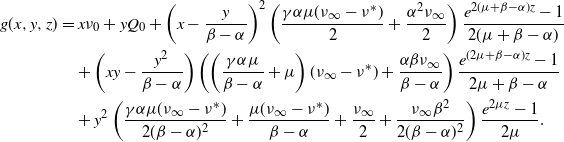

Because of the practical relevance of these model features, self-exciting processes have been used in a wide variety of applications, many of which are quite recent additions to the literature. Seismology was among the first domains to incorporate these models, for example in [Reference Ogata51], as the occurrence of an earthquake increases the risk of subsequent seismic activity in the form of aftershocks. Finance has since followed as a popular application and is now perhaps the most active area of work. In these studies, self-excitement is used to capture the often contagious nature of financial activity; see e.g. [Reference At-Sahalia, Cacho-Diaz and Laeven2, Reference Azizpour, Giesecke and Schwenkler4, Reference Bacry, Delattre, Hoffmann and Muzy5, Reference Bacry and Muzy7, Reference Da Fonseca and Zaatour15, Reference Errais, Giesecke and Goldberg24, Reference Gao, Zhou and Zhu28, Reference Rambaldi, Bacry and Lillo53, Reference Wu, Rambaldi, Muzy and Bacry62]. Similarly, there have been many recent internet and social media scenarios that have been modeled using self-exciting processes, drawing upon the virality of modern web traffic. For example, see [Reference Farajtabar26, Reference Rizoiu54, Reference Rizoiu55]. Notably, this also includes use of Hawkes processes for constructing data-driven methods in the artificial intelligence and machine learning literatures, e.g. [Reference Du22, Reference Mei and Eisner50, Reference Xu, Luo and Zha63]. In an intriguing area of work, self-excitement has also been used to model interpersonal communication, for example in application to conversation audio recordings in [Reference Masuda, Takaguchi, Sato and Yano49] or in studying email correspondence in [Reference Halpin and De Boeck34, Reference Malmgren, Stouffer, Motter and Amaral47]. Hawkes processes have also recently been used to represent arrivals to service systems in queueing models, e.g. in [Reference Daw and Pender20, Reference Gao and Zhu29, Reference Gao and Zhu30, Reference Koops, Saxena, Boxma and Mandjes41]. This is of course not an exhaustive list of works in these areas, nor is it a complete account of all the modern applications of self-excitement. Examples of other notable uses include neuroscience [Reference Krumin, Reutsky and Shoham42, Reference Truccolo57], environmental management [Reference Gupta, Farajtabar, Dilkina and Zha32], public health [Reference Zino, Rizzo and Porfiri68], movie trailer generation [Reference Xu, Zhen and Zha64], energy conservation [Reference Li and Zha44], and industrial preventative maintenance [Reference Yan65].

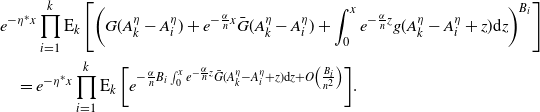

As the variety of uses for self-excitement has continued to grow, the number of Hawkes process generalizations has kept pace. By modifying the definition of the Hawkes process in some way, the works in this generalized self-exciting process literature provide new perspectives on these concepts while also empowering and enriching applications. For example, [Reference Brémaud and Massoulié13] introduces a nonlinear Hawkes process that adapts the definition of the process intensity to feature a general, nonnegative function of the integration over the process history, as opposed to the linear form given originally. Similarly, the quadratic Hawkes process model given by [Reference Blanc, Donier and Bouchaud11] allows for excitation kernels that have quadratic dependence on the process history, rather than simply linear. This is also an example of a generalization motivated by application, as the authors seek to capture time-reversal asymmetry observed in financial data. As another finance-motivated generalization, [Reference Dassios and Zhao17] proposes the dynamic contagion process. This model can be thought of as a hybrid between a Hawkes process and a shot-noise process, as the stochastic intensity of the model features both self-excited and externally excited jumps. The authors take motivation from an application in credit risk, in which the dynamics are shaped both by the process history and by exogenous shocks. The affine point processes studied in e.g. [Reference Errais, Giesecke and Goldberg24, Reference Zhang, Blanchet, Giesecke and Glynn66, Reference Zhang, Glynn, Giesecke and Blanchet67] are also motivated by credit risk applications. The models in these works combine the self-exciting dynamics of Hawkes process with those of an affine jump-diffusion process, imbedding modeling concepts of feedback and dependency into the process intensity. An exact simulation procedure for the Hawkes process with Cox–Ingersoll–Ross (CIR) intensity, a generalization of the Hawkes process that is a special case of the affine point process, is shown in [Reference Dassios and Zhao18]. In that case, the authors discuss an application to portfolio loss processes.

There have also been several Hawkes process generalizations proposed in social media and data analytics contexts. For example, [Reference Rizoiu54] introduces a finite-population Hawkes process that couples self-excitement dynamics with those of the susceptible–infected–recovered (SIR) process. Drawing upon the use of the SIR process for the spread of both disease and ideas, the authors propose this SIR–Hawkes process as a method of studying information cascades. Similarly, [Reference Mei and Eisner50] introduces the neural Hawkes process as a new point process model in the machine learning literature. As the name suggests, this model combines self-excitement with concepts from neural networks. Specifically, a recurrent neural network effectively replaces the excitation kernel, governing the effect of the past events on the rate of future occurrences. In the literature for Bayesian nonparametric models, [Reference Du22] presents the Dirichlet–Hawkes process for topic clustering in document streams. In this case, the authors combine a Hawkes process and a Dirichlet process, so that the intensity of the stream of new documents is self-exciting while the type of each new document is determined by the Dirichlet process, leading to a preferential attachment structure among the document types.

In this paper, we propose the ephemerally self-exciting process (ESEP), a novel generalization of the Hawkes process. Rather than regulating the excitement through the gradual, deterministic decay provided by an excitation kernel function, we instead incorporate randomly timed down-jumps. We will refer to this random length of time as the arrival’s activity duration. The down-jumps are equal in size to the up-jumps, and between events the arrival rate does not change. Thus, this process increases in arrival rate upon each occurrence, and these increases are then mirrored some time later by decreases in the arrival rate once the activity duration expires. In this way, the self-excitement is ephemeral: it is only in effect as long as the excitement is active. Much of the body of this work will discuss how this ephemeral, piecewise constant self-excitement compares to the eternal but ever-decaying notion from Hawkes’s original definition. As we will see in our analysis, this new process is both a promising model of self-excitement and an explanation of its origins in natural phenomena.

1.1. Practical relevance

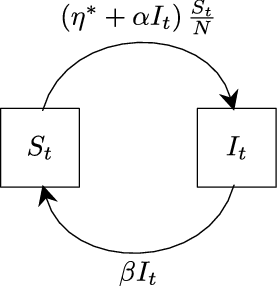

While this paper will not be focused on any one application, in this subsection we summarize several domain areas in which the models in this work can be applied. A natural example is in public health and the management of epidemics. For example, consider influenza. When a person becomes sick with the flu, she increases the rate of spread of the virus through her contact with others. This creates a self-exciting dynamic in the spread of the virus. However, a person only spreads a disease as long as she is contagious; once she has recovered she no longer has a direct effect on the rate of new infections. From a system-level perspective, the ESEP can thus be thought of as modeling the arrivals of new infections, capturing the self-exciting and ephemeral nature of sick patients. This motivates the use of this model as an arrival process to queueing models for healthcare, as the rate of arrivals to clinics serving patients with infectious diseases should depend on the number of people currently infected. The healthcare service can also be separately modeled, as an infinite-server queue may be a fitting representation for the number of infected individuals but the clinic itself likely has limited capacity. This concept of course extends to the modeling and management of any other viral disease, including the novel coronavirus that has caused the COVID-19 pandemic.

Of course, epidemic models need not be applied exclusively to disease spread. These same ideas can be used for information spread and product adoption, such as in the aforementioned Hawkes-infused models in [Reference Rizoiu54, Reference Zino, Rizzo and Porfiri68]. In these contexts, one can think of the duration in system as being the time a person actively promotes a concept or product. A single person only affects the self-excitement of the idea or product spread as long as she is in the system, which distinguishes this model from those in the aforementioned works. Epidemic models have also been used to study social issues, such as the contagious nature of imprisonment demonstrated by [Reference Lum, Swarup, Eubank and Hawdon46]. We discuss the relevance of ephemeral self-excitement for epidemics in detail in Subsection 3.3 by relating this model to the susceptible–infected–susceptible (SIS) process through a convergence in distribution. In fact, throughout Section 3 we establish connections from this process to other relevant stochastic models. This includes classical processes such as branching processes and random walks, as well as models popular both in Bayesian nonparametrics and in preferential attachment settings, such as the Dirichlet process and the Chinese restaurant process (CRP).

In the context of service systems, self-excitement can be motivated by the same rationale that inspires restaurants to seat customers at the tables by the windows. Potential new customers could choose to dine at the establishment because they can see others already eating there, taking an implicit recommendation from those already being served. This same example also motivates the ephemerality. After a customer seated by the window finishes her dinner and departs, any passing potential patron only sees an empty table; the implicit recommendation vanishes with the departing customer. A similar dynamic can be observed in online streaming platforms. For example, on popular music streaming services like Spotify and Apple Music, users can see what songs and albums have been recently played by their friends. If a user sees that many of her friends have listened to the same album recently, she may be more inclined to listen to it as well. However, this applies only as long as the word ‘recently’ does. If her friends don’t play the album within a certain amount of time, the platform will no longer promote the album to her in that fashion. Again, this displays the ephemerality of the underlying self-excitement: the album grows more attractive as more users listen to it, but only as long as those listens are ‘recent’ enough.

In finance, limit order books (LOBs) are among the many concepts that have been modeled using Hawkes process, for example in [Reference Bacry, Jaisson and Muzy6, Reference Rambaldi, Bacry and Lillo53]. LOBs have also been studied through queueing models, where one can model the state of the LOB (or, more specifically, the number of unresolved bids and asks) as the length of a queueing process. Moreover, there has been recent work that models this process as not just a queue, but a queue with Hawkes process arrivals; for example see [Reference Gao and Zhu29, Reference Guo, Ruan and Zhu31]. Conceptually, the self-excitement may arise from traders reacting to the activity of other traders, creating runs of transactions. However, the desire not to act on stale information may mean that this excitement lasts only as long as trades are actively being conducted. In fact, the idea of the self-excitement in LOB models being ‘queue-reactive’ has just very recently been considered by [Reference Wu, Rambaldi, Muzy and Bacry62], a work related to this one.

One can also consider failures in a mechanical system as an application of this model. For example, consider a network of water pipes. When one pipe breaks or bursts, it can place stress on the pipes connected to it. This stress may then cause further failures within the pipe network. However, once the pipe is properly repaired, it should no longer place strain on the surrounding components. Thus, the increase in pipe failure rate caused by a failure is only in effect until the repair occurs, inducing ephemeral self-excitement. The self-excitement (albeit without the ephemerality) arising in this scenario was modeled using Hawkes processes in [Reference Yan65], which includes an empirical study. A similar problem for electrical systems is considered in [Reference Ertekin, Rudin and McCormick25]. The reactive point process considered in that work is perhaps the model most similar to the ones studied herein, as the rate of new power failures both increases at the prior failure times and decreases upon inspection or repair. However, a key difference is that in [Reference Ertekin, Rudin and McCormick25], the authors treat the inspection times as controlled by management, whereas in this paper the model is fully stochastic and thus the repair durations are random. Regardless, that work is an excellent example of how generalized self-exciting processes can be used to shape practical policy. Because power outages have significant and wide-reaching consequences, it is critical to understand the interdependency between electrical grid failures and to study the ESEP that arises from them.

1.2. Organization and contributions of paper

Let us now detail the remainder of this paper’s organization, as well as the contributions therein.

-

In Section 2, we define the ephemerally self-exciting process (ESEP), a Markovian model for self-excitement that lasts for only a finite amount of time. After defining the model, we develop fundamental distributional quantities and compare the ESEP to the Hawkes process.

-

In Section 3, we relate the ESEP to many other important and well-known stochastic processes. This includes branching processes, which gives us further comparisons between the Hawkes process and the ESEP, models for preferential attachment and Bayesian statistics, and epidemic models. The lattermost of these motivates the ESEP as a representation for the times of infection within an epidemic, and this also provides a formal link between the conceptually similar concepts of epidemics and self-excitement.

-

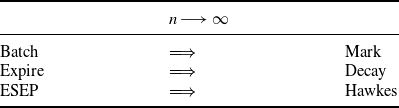

In Section 4, we broaden our exploration of ephemeral self-excitement to non-Markovian models with general activity durations and batches of arrivals. In this general setting, we establish a limit theorem providing an alternate construction of general Hawkes processes. This batch scaling limit thus yields intuition for the observed occurrence of self-excitement in natural phenomena and stands as a fundamental cornerstone for studying such processes.

In addition to these main avenues of study, we also have extensive auxiliary analysis housed in this paper’s appendix. Appendix A contains lemmas and side results that support our analysis but are outside the main narrative. In Appendix B, we explore a model that is a hybrid between the ESEP and the Hawkes process, in that it regulates the excitement with both down-jumps and decay. Appendix C is devoted to a finite-capacity version of the ESEP, in which arrivals that would put the active number in system above the capacity are blocked from occurring. Finally, Appendix D contains an algebraically cumbersome proof of a result from Section 2.

2. Modeling ephemeral self-excitement

We begin this paper by defining our ephemerally self-exciting model and conducting an initial analysis of some fundamental quantities, including the transient moment generating function and the steady-state distribution. Before doing so, though, let us first review the Hawkes process, which is the original self-exciting probability model.

2.1. Preliminary models and concepts

Introduced and pioneered through the series of papers [Reference Hawkes35–Reference Hawkes and Oakes37], the Hawkes process is a stochastic-intensity point process in which the current rate of arrivals is dependent on the history of the arrival process itself. Formally, this is defined as follows: let

![]() $(\lambda_t, N_{t,\lambda})$

be an intensity and counting process pair such that

$(\lambda_t, N_{t,\lambda})$

be an intensity and counting process pair such that

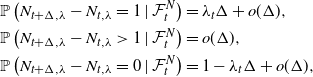

\begin{align*}\mathbb{P}\left( N_{t+\Delta,\lambda} - N_{t,\lambda} = 1 \mid \mathcal{F}^N_t \right) &= \lambda_t \Delta + o(\Delta), \\\mathbb{P}\left( N_{t+\Delta,\lambda} - N_{t,\lambda} > 1 \mid \mathcal{F}^N_t \right) &= o(\Delta), \\\mathbb{P}\left( N_{t+\Delta,\lambda} - N_{t,\lambda} = 0 \mid \mathcal{F}^N_t \right) &= 1 - \lambda_t \Delta + o(\Delta),\end{align*}

\begin{align*}\mathbb{P}\left( N_{t+\Delta,\lambda} - N_{t,\lambda} = 1 \mid \mathcal{F}^N_t \right) &= \lambda_t \Delta + o(\Delta), \\\mathbb{P}\left( N_{t+\Delta,\lambda} - N_{t,\lambda} > 1 \mid \mathcal{F}^N_t \right) &= o(\Delta), \\\mathbb{P}\left( N_{t+\Delta,\lambda} - N_{t,\lambda} = 0 \mid \mathcal{F}^N_t \right) &= 1 - \lambda_t \Delta + o(\Delta),\end{align*}

where

![]() $\mathcal{F}^N_t$

is the filtration of

$\mathcal{F}^N_t$

is the filtration of

![]() $N_{t,\lambda}$

up to time t and

$N_{t,\lambda}$

up to time t and

![]() $\lambda_t$

is given by

$\lambda_t$

is given by

where

![]() $\lambda^* > 0$

and

$\lambda^* > 0$

and

![]() $g\, :\, \mathbb{R}^+ \to \mathbb{R}^+$

is such that

$g\, :\, \mathbb{R}^+ \to \mathbb{R}^+$

is such that

![]() $\int_0^\infty g(x) \textrm{d}x < 1$

. Through this definition, the intensity

$\int_0^\infty g(x) \textrm{d}x < 1$

. Through this definition, the intensity

![]() $\lambda_t$

captures the history of the arrival process up to time t. Thus,

$\lambda_t$

captures the history of the arrival process up to time t. Thus,

![]() $\lambda_t$

encapsulates the sequence of past events and uses it to determine the rate of future occurrences. We refer to

$\lambda_t$

encapsulates the sequence of past events and uses it to determine the rate of future occurrences. We refer to

![]() $\lambda^*$

as the baseline intensity and

$\lambda^*$

as the baseline intensity and

![]() $g({\cdot})$

as the excitation kernel. The baseline intensity represents an underlying stationary arrival rate, and the excitation kernel governs the effect that the history of the process has on the current intensity. A common modeling choice is to set

$g({\cdot})$

as the excitation kernel. The baseline intensity represents an underlying stationary arrival rate, and the excitation kernel governs the effect that the history of the process has on the current intensity. A common modeling choice is to set

![]() $g(x) = \alpha e^{-\beta x}$

, where

$g(x) = \alpha e^{-\beta x}$

, where

![]() $\beta > \alpha > 0$

. This is often referred to as the ‘exponential’ kernel, and it is perhaps the most widely used form of the Hawkes process. In this case,

$\beta > \alpha > 0$

. This is often referred to as the ‘exponential’ kernel, and it is perhaps the most widely used form of the Hawkes process. In this case,

![]() $(\lambda_t, N_{t,\lambda})$

is a Markov process obeying the stochastic differential equation

$(\lambda_t, N_{t,\lambda})$

is a Markov process obeying the stochastic differential equation

That is, at arrival epochs

![]() $\lambda_t$

jumps upward by the amount

$\lambda_t$

jumps upward by the amount

![]() $\alpha$

and the

$\alpha$

and the

![]() $N_{t,\lambda}$

increases by 1; between arrivals

$N_{t,\lambda}$

increases by 1; between arrivals

![]() $\lambda_t$

decays exponentially at rate

$\lambda_t$

decays exponentially at rate

![]() $\beta$

towards the baseline intensity

$\beta$

towards the baseline intensity

![]() $\lambda^*$

. Thus, each arrival increases the likelihood of additional arrivals occurring soon afterwards—hence, it self-excites. This form of the Hawkes process is also often alternatively stated with an initial value for

$\lambda^*$

. Thus, each arrival increases the likelihood of additional arrivals occurring soon afterwards—hence, it self-excites. This form of the Hawkes process is also often alternatively stated with an initial value for

![]() $\lambda_t$

, say

$\lambda_t$

, say

![]() $\lambda_0 \geq \lambda^*$

. In this case, the intensity can be expressed as

$\lambda_0 \geq \lambda^*$

. In this case, the intensity can be expressed as

Additional overview of the Hawkes process with the exponential kernel can be found in Section 2 of [Reference Daw and Pender20]. Another common choice for excitation kernel is the ‘power-law’ kernel

![]() $g(x) = \frac{k}{(c+x)^p}$

, where

$g(x) = \frac{k}{(c+x)^p}$

, where

![]() $k > 0$

,

$k > 0$

,

![]() $c > 0$

, and

$c > 0$

, and

![]() $p > 0$

. This kernel was originally popularized in seismology [Reference Ogata51].

$p > 0$

. This kernel was originally popularized in seismology [Reference Ogata51].

2.2. Defining the ephemerally self-exciting process

As we have discussed in the introduction, a plethora of natural phenomena exhibit self-exciting features but only for a finite amount of time. This prompts the notion of ephemeral self-excitement. By comparison to the traditional Hawkes process we have reviewed in Subsection 2.1, we seek a model in which a new occurrence increases the arrival rate only so long as the newly entered entity remains active in the system. Thus, we now define the ephemerally self-exciting process (ESEP), which trades the Hawkes process’s eternal decay for randomly drawn expiration times. Moreover, in the following Markovian model, exponential decay is replaced with exponentially distributed durations. In Section 4, we extend these concepts to generally distributed service. As another generalization, in Appendix B we consider a Markovian model with both decay and down-jumps. For now, we explore the effects of ephemerality through the ESEP model in Definition 1.

Definition 1.

(Ephemerally self-exciting process.) For times

![]() $t \geq 0$

, a baseline intensity

$t \geq 0$

, a baseline intensity

![]() $\eta^* > 0$

, intensity jump size

$\eta^* > 0$

, intensity jump size

![]() $\alpha > 0$

, and expiration rate

$\alpha > 0$

, and expiration rate

![]() $\beta > 0$

, let

$\beta > 0$

, let

![]() $N_t$

be a counting process with stochastic intensity

$N_t$

be a counting process with stochastic intensity

![]() $\eta_t$

such that

$\eta_t$

such that

where

![]() $Q_t$

is incremented with

$Q_t$

is incremented with

![]() $N_t$

and then is depleted at unit down-jumps according to the rate

$N_t$

and then is depleted at unit down-jumps according to the rate

![]() $\beta Q_t$

. We say that

$\beta Q_t$

. We say that

![]() $(\eta_t, N_t)$

is an ephemerally self-exciting process (ESEP).

$(\eta_t, N_t)$

is an ephemerally self-exciting process (ESEP).

We will assume that

![]() $\eta_0$

and

$\eta_0$

and

![]() $Q_0$

are known initial values such that

$Q_0$

are known initial values such that

![]() $\eta_0 = \eta^* + \alpha Q_0$

. In addition to this definition, one could also describe the ESEP through its dynamics. In particular, the behavior of this process can be summarily cast through the life cycle of its arrivals:

$\eta_0 = \eta^* + \alpha Q_0$

. In addition to this definition, one could also describe the ESEP through its dynamics. In particular, the behavior of this process can be summarily cast through the life cycle of its arrivals:

-

i. At each arrival, the arrival rate

$\eta_t$

increases by

$\eta_t$

increases by

$\alpha$

.

$\alpha$

. -

ii. Each arrival remains active for an activity duration drawn from an independent and identically distributed (i.i.d.) sequence of exponential random variables with rate

$\beta$

.

$\beta$

. -

iii. At the expiration of a given activity duration,

$\eta_t$

decreases by

$\eta_t$

decreases by

$\alpha$

.

$\alpha$

.

The ephemerality of the ESEP is embodied by this cycle. Because arrivals only contribute to the intensity for the length of their activity duration, their effect on the process’s excitation vanishes when this clock expires. Furthermore, there is an affine relationship between the number of active ‘exciters’—meaning unexpired arrivals still causing excitation—and the intensity, i.e.

![]() $\eta_t = \eta^* + \alpha Q_t$

. Thus, we could also track the arrival rate through

$\eta_t = \eta^* + \alpha Q_t$

. Thus, we could also track the arrival rate through

![]() $Q_t$

in place of

$Q_t$

in place of

![]() $\eta_t$

and still have full understanding of this process. This also means that results are readily transferrable between these two processes; we will often make use of this fact.

$\eta_t$

and still have full understanding of this process. This also means that results are readily transferrable between these two processes; we will often make use of this fact.

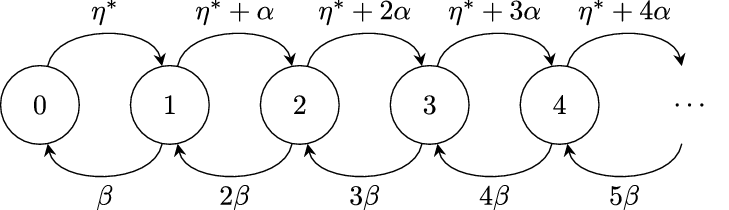

Because the ESEP is quite parsimonious, there are many alternative perspectives we could take to gain additional understanding of it. For example, one could consider

![]() $Q_t$

a Markovian queueing system with infinitely many servers and a state-dependent arrival rate. Equivalently, one could also describe the ESEP as a Markov chain on the nonnegative integers where transitions at state i are to

$Q_t$

a Markovian queueing system with infinitely many servers and a state-dependent arrival rate. Equivalently, one could also describe the ESEP as a Markov chain on the nonnegative integers where transitions at state i are to

![]() $i+1$

at rate

$i+1$

at rate

![]() $\eta^* + \alpha i$

and to

$\eta^* + \alpha i$

and to

![]() $i-1$

at rate

$i-1$

at rate

![]() $\mu i$

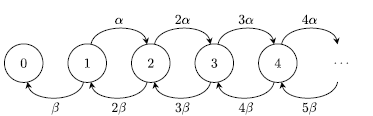

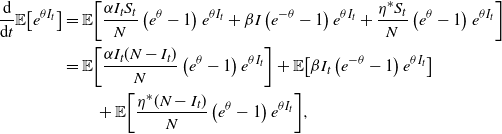

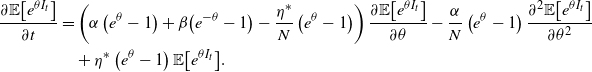

, with the counting process then defined as the epochs of the upward jumps in this chain. This Markov chain perspective certifies the existence and uniqueness of Definition 1. A visualization of this linear birth–death–immigration process is given in Figure 1. Stability for this chain occurs when

$\mu i$

, with the counting process then defined as the epochs of the upward jumps in this chain. This Markov chain perspective certifies the existence and uniqueness of Definition 1. A visualization of this linear birth–death–immigration process is given in Figure 1. Stability for this chain occurs when

![]() $\beta > \alpha$

; we will assume this henceforth, although of course it is not necessary for transient results. One could also view the ESEP as a generalization of Hawkes’s original definition where the excitation kernel function

$\beta > \alpha$

; we will assume this henceforth, although of course it is not necessary for transient results. One could also view the ESEP as a generalization of Hawkes’s original definition where the excitation kernel function

![]() $g({\cdot})$

is replaced with a randomly drawn indicator function that is different for each arrival. Each indicator function compares time to an independently drawn exponential random variable, and this perspective is closely aligned with our analysis in Section 3.

$g({\cdot})$

is replaced with a randomly drawn indicator function that is different for each arrival. Each indicator function compares time to an independently drawn exponential random variable, and this perspective is closely aligned with our analysis in Section 3.

Figure 1. The transition diagram of the Markov chain for

![]() $Q_t$

.

$Q_t$

.

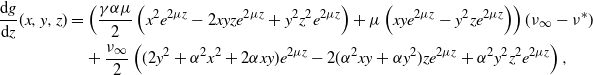

In the remainder of this subsection, let us now develop a few fundamental quantities for this stochastic process, particularly its intensity and active number in system, as these capture the self-exciting behavior of the process. First, in Proposition 1 we compute the transient moment generating function for the intensity

![]() $\eta_t$

. As we have noted, this can also be used to immediately derive the same transform for

$\eta_t$

. As we have noted, this can also be used to immediately derive the same transform for

![]() $Q_t$

, and the proof makes use of this fact.

$Q_t$

, and the proof makes use of this fact.

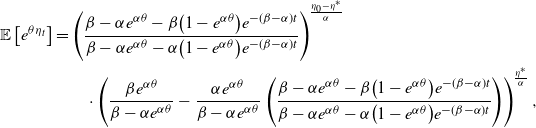

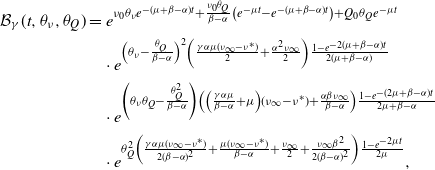

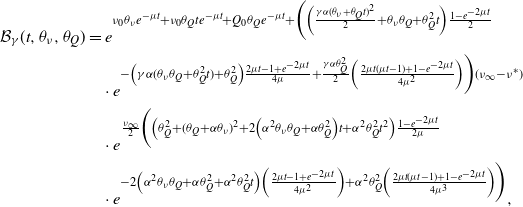

Proposition 1. Let

![]() $\eta_t = \eta^* + \alpha Q_t$

be the intensity of an ESEP with baseline intensity

$\eta_t = \eta^* + \alpha Q_t$

be the intensity of an ESEP with baseline intensity

![]() $\eta^* > 0$

, intensity jump

$\eta^* > 0$

, intensity jump

![]() $\alpha > 0$

, and expiration rate

$\alpha > 0$

, and expiration rate

![]() $\beta > \alpha$

. Then the moment generating function for

$\beta > \alpha$

. Then the moment generating function for

![]() $\eta_t$

is given by

$\eta_t$

is given by

\begin{align*}{\mathbb{E}\left[e^{\theta \eta_t}\right]}&=\left(\frac{\beta - \alpha e^{\alpha\theta} - \beta \big(1-e^{\alpha\theta}\big)e^{-(\beta - \alpha)t}}{\beta - \alpha e^{\alpha\theta} - \alpha \big(1-e^{\alpha\theta}\big)e^{-(\beta - \alpha)t}}\right)^{\!\frac{\eta_0 - \eta^*}{\alpha}}\\&\qquad \cdot \left(\frac{\beta e^{\alpha \theta}}{\beta - \alpha e^{\alpha\theta}}-\frac{\alpha e^{\alpha \theta}}{\beta - \alpha e^{\alpha\theta}}\left(\frac{\beta - \alpha e^{\alpha\theta} - \beta \big(1-e^{\alpha\theta}\big)e^{-(\beta - \alpha)t}}{\beta - \alpha e^{\alpha\theta} - \alpha \big(1-e^{\alpha\theta}\big)e^{-(\beta - \alpha)t}}\right)\right)^{\!\frac{\eta^*}{\alpha}},\end{align*}

\begin{align*}{\mathbb{E}\left[e^{\theta \eta_t}\right]}&=\left(\frac{\beta - \alpha e^{\alpha\theta} - \beta \big(1-e^{\alpha\theta}\big)e^{-(\beta - \alpha)t}}{\beta - \alpha e^{\alpha\theta} - \alpha \big(1-e^{\alpha\theta}\big)e^{-(\beta - \alpha)t}}\right)^{\!\frac{\eta_0 - \eta^*}{\alpha}}\\&\qquad \cdot \left(\frac{\beta e^{\alpha \theta}}{\beta - \alpha e^{\alpha\theta}}-\frac{\alpha e^{\alpha \theta}}{\beta - \alpha e^{\alpha\theta}}\left(\frac{\beta - \alpha e^{\alpha\theta} - \beta \big(1-e^{\alpha\theta}\big)e^{-(\beta - \alpha)t}}{\beta - \alpha e^{\alpha\theta} - \alpha \big(1-e^{\alpha\theta}\big)e^{-(\beta - \alpha)t}}\right)\right)^{\!\frac{\eta^*}{\alpha}},\end{align*}

for all

![]() $t \geq 0$

and

$t \geq 0$

and

![]() $\theta < \frac{1}{\alpha}\log\left(\frac{\beta}{\alpha}\right)$

.

$\theta < \frac{1}{\alpha}\log\left(\frac{\beta}{\alpha}\right)$

.

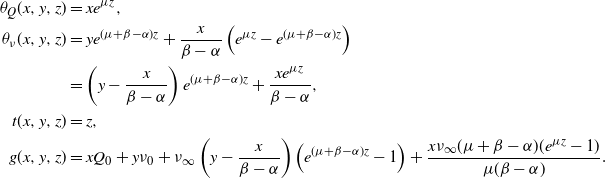

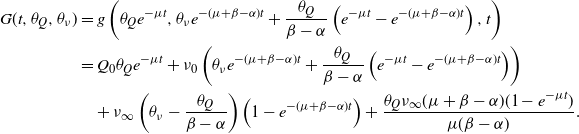

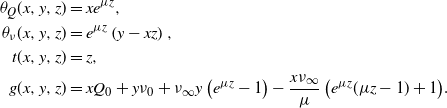

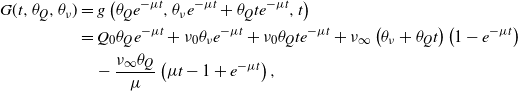

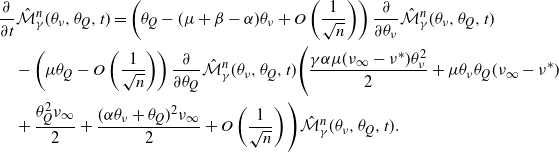

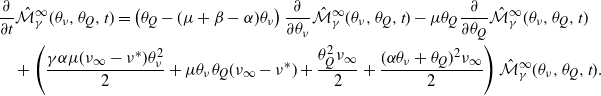

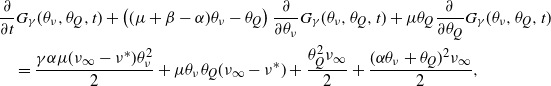

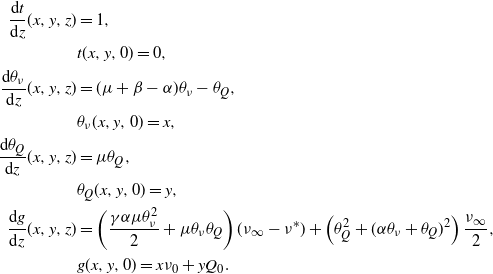

Proof. We will approach this through the perspective of the active number in system,

![]() $Q_t$

. Using Lemma 2, we have that the probability generating function for

$Q_t$

. Using Lemma 2, we have that the probability generating function for

![]() $Q_t$

, say

$Q_t$

, say

![]() $\mathcal{P}(z,t) = {\mathbb{E}\!\left[z^{Q_t}\right]}$

for

$\mathcal{P}(z,t) = {\mathbb{E}\!\left[z^{Q_t}\right]}$

for

![]() $z \in [0,1]$

, is given by the solution to the following partial differential equation (PDE):

$z \in [0,1]$

, is given by the solution to the following partial differential equation (PDE):

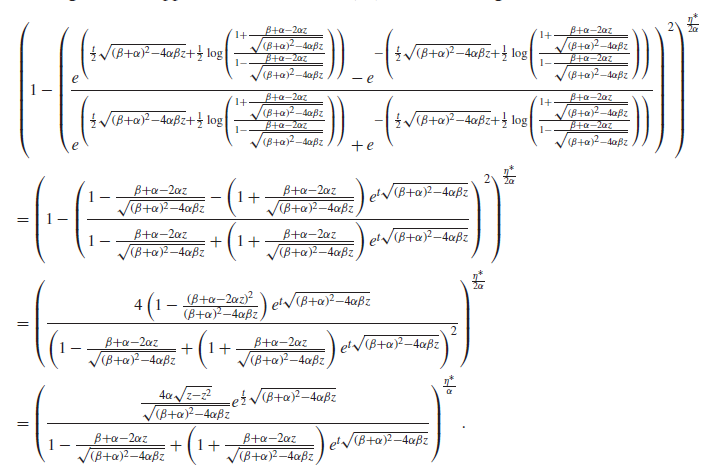

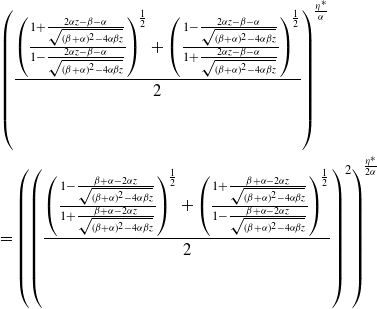

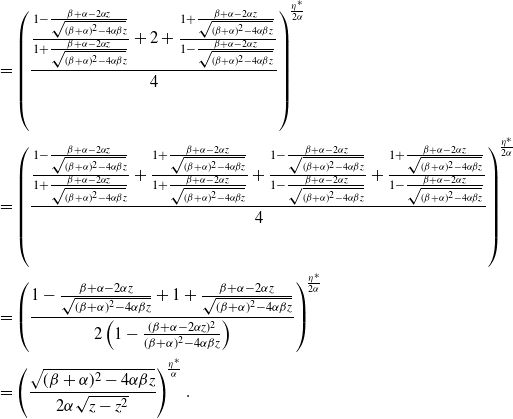

which is equivalently expressed by

with initial condition

![]() $\mathcal{P}(z,0) = z^{Q_0}$

. The solution to this initial value problem is given by

$\mathcal{P}(z,0) = z^{Q_0}$

. The solution to this initial value problem is given by

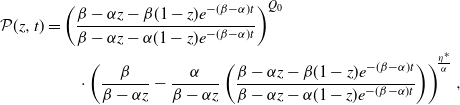

\begin{align*}\mathcal{P}(z,t)&=\left(\frac{\beta - \alpha z - \beta (1-z)e^{-(\beta - \alpha)t}}{\beta - \alpha z - \alpha (1-z)e^{-(\beta - \alpha)t}}\right)^{Q_0}\\&\qquad \cdot \left(\frac{\beta}{\beta - \alpha z}-\frac{\alpha}{\beta - \alpha z}\left(\frac{\beta - \alpha z - \beta (1-z)e^{-(\beta - \alpha)t}}{\beta - \alpha z - \alpha (1-z)e^{-(\beta - \alpha)t}}\right)\right)^{\!\frac{\eta^*}{\alpha}},\end{align*}

\begin{align*}\mathcal{P}(z,t)&=\left(\frac{\beta - \alpha z - \beta (1-z)e^{-(\beta - \alpha)t}}{\beta - \alpha z - \alpha (1-z)e^{-(\beta - \alpha)t}}\right)^{Q_0}\\&\qquad \cdot \left(\frac{\beta}{\beta - \alpha z}-\frac{\alpha}{\beta - \alpha z}\left(\frac{\beta - \alpha z - \beta (1-z)e^{-(\beta - \alpha)t}}{\beta - \alpha z - \alpha (1-z)e^{-(\beta - \alpha)t}}\right)\right)^{\!\frac{\eta^*}{\alpha}},\end{align*}

yielding the probability generating function for

![]() $Q_t$

. By setting

$Q_t$

. By setting

![]() $z = e^{\theta}$

we obtain the moment generating function. Finally, using the affine relationship

$z = e^{\theta}$

we obtain the moment generating function. Finally, using the affine relationship

![]() $\eta_t = \eta^* + \alpha Q_t$

, we have that

$\eta_t = \eta^* + \alpha Q_t$

, we have that

with

![]() $\eta_0 = \eta^* + \alpha Q_0$

.

$\eta_0 = \eta^* + \alpha Q_0$

.

As we have mentioned, this Markov chain can be shown to be stable for

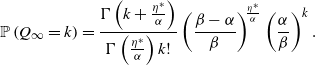

![]() $\beta > \alpha$

through standard techniques. Thus, using the moment generating function from Proposition 1, we can find the steady-state distributions of the intensity and the active number in system by taking the limit of t. We can quickly observe that this leads to a negative binomial distribution, as we state in Theorem 1. Because of the varying definitions of the negative binomial distribution, we state the probability mass function explicitly.

$\beta > \alpha$

through standard techniques. Thus, using the moment generating function from Proposition 1, we can find the steady-state distributions of the intensity and the active number in system by taking the limit of t. We can quickly observe that this leads to a negative binomial distribution, as we state in Theorem 1. Because of the varying definitions of the negative binomial distribution, we state the probability mass function explicitly.

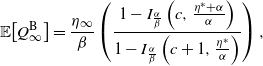

Theorem 1. Let

![]() $\eta_t = \eta^* + \alpha Q_t$

be an ESEP with baseline intensity

$\eta_t = \eta^* + \alpha Q_t$

be an ESEP with baseline intensity

![]() $\eta^* > 0$

, intensity jump

$\eta^* > 0$

, intensity jump

![]() $\alpha > 0$

, and expiration rate

$\alpha > 0$

, and expiration rate

![]() $\beta > \alpha$

. Then the active number in system in steady state follows a negative binomial distribution with parameters

$\beta > \alpha$

. Then the active number in system in steady state follows a negative binomial distribution with parameters

![]() $\frac{\alpha}{\beta}$

and

$\frac{\alpha}{\beta}$

and

![]() $\frac{\eta^*}{\alpha}$

, which is to say that the steady-state probability mass function is

$\frac{\eta^*}{\alpha}$

, which is to say that the steady-state probability mass function is

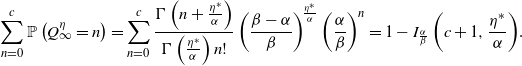

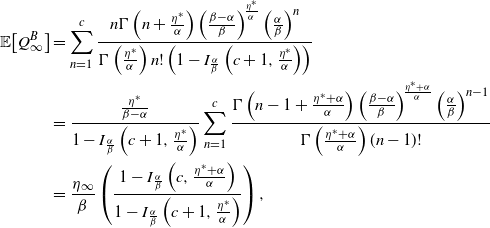

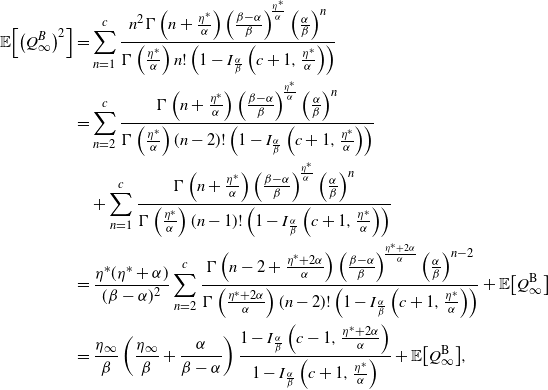

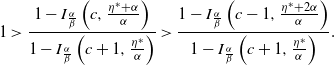

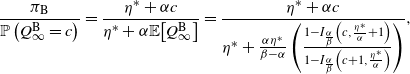

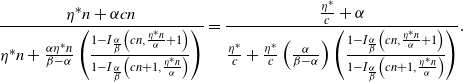

\begin{align}\mathbb{P}\left( Q_\infty = k \right)&=\frac{\Gamma\left(k + \frac{\eta^*}{\alpha}\right)}{\Gamma\left(\frac{\eta^*}{\alpha}\right) k! }\left(\frac{\beta - \alpha}{\beta}\right)^{\!\frac{\eta^*}{\alpha}}\left(\frac{\alpha}{\beta}\right)^k.\end{align}

\begin{align}\mathbb{P}\left( Q_\infty = k \right)&=\frac{\Gamma\left(k + \frac{\eta^*}{\alpha}\right)}{\Gamma\left(\frac{\eta^*}{\alpha}\right) k! }\left(\frac{\beta - \alpha}{\beta}\right)^{\!\frac{\eta^*}{\alpha}}\left(\frac{\alpha}{\beta}\right)^k.\end{align}

Consequently, the steady-state distribution of the intensity is given by a shifted and scaled negative binomial with parameters

![]() $\frac{\alpha}{\beta}$

and

$\frac{\alpha}{\beta}$

and

![]() $\frac{\eta^*}{\alpha}$

, shifted by

$\frac{\eta^*}{\alpha}$

, shifted by

![]() $\eta^*$

and scaled by

$\eta^*$

and scaled by

![]() $\alpha$

.

$\alpha$

.

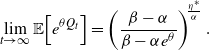

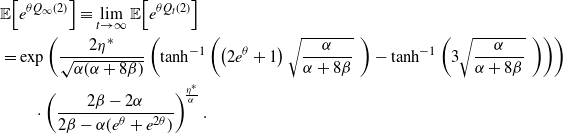

Proof. Using Proposition 1, we can see that the steady-state moment generating function of

![]() $Q_t$

is given by

$Q_t$

is given by

\begin{align*}\lim_{t\to\infty} {\mathbb{E}\!\left[e^{\theta Q_t}\right]}=\left(\frac{\beta-\alpha}{\beta - \alpha e^{\theta}}\right)^{\!\frac{\eta^*}{\alpha}}.\end{align*}

\begin{align*}\lim_{t\to\infty} {\mathbb{E}\!\left[e^{\theta Q_t}\right]}=\left(\frac{\beta-\alpha}{\beta - \alpha e^{\theta}}\right)^{\!\frac{\eta^*}{\alpha}}.\end{align*}

We can observe that this steady-state moment generating function is equivalent to that of a negative binomial. By the affine transformation

![]() $\eta_t = \eta^* + \alpha Q_t$

, we find the steady-state distribution for the intensity.

$\eta_t = \eta^* + \alpha Q_t$

, we find the steady-state distribution for the intensity.

The negative binomial distribution presented in Theorem 1 allows for

![]() $\frac{\eta^*}{\alpha}$

to take on any positive real value; integrality is not required. If

$\frac{\eta^*}{\alpha}$

to take on any positive real value; integrality is not required. If

![]() $\frac{\eta^*}{\alpha}$

is in fact an integer, then the gamma functions will match the corresponding factorial functions and this ratio of factorials will simplify to a binomial coefficient, reproducing the most familiar form of the negative binomial distribution. In this case,

$\frac{\eta^*}{\alpha}$

is in fact an integer, then the gamma functions will match the corresponding factorial functions and this ratio of factorials will simplify to a binomial coefficient, reproducing the most familiar form of the negative binomial distribution. In this case,

![]() $\frac{\eta^*}{\alpha}$

can be interpreted as the number of failures upon which an experiment is stopped, where the success probability is

$\frac{\eta^*}{\alpha}$

can be interpreted as the number of failures upon which an experiment is stopped, where the success probability is

![]() $\frac{\alpha}{\beta}$

and

$\frac{\alpha}{\beta}$

and

![]() $k + \frac{\eta^*}{\alpha}$

is the total number of trials.

$k + \frac{\eta^*}{\alpha}$

is the total number of trials.

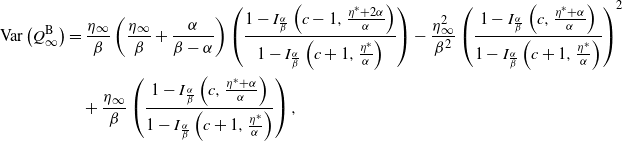

Let us pause to note that this explicit characterization of the steady-state intensity is already an advantage of the ESEP over the traditional Markovian Hawkes process, for which there is not a closed-form intensity stationary distribution available. As a consequence of Theorem 1, we can observe that the steady-state mean of the intensity is

![]() $\eta_\infty \,:\!=\, \frac{\beta \eta^*}{\beta - \alpha}$

. Interestingly, this would also be the steady-state mean of the Hawkes process when given the same baseline intensity, the same intensity jump size, and an exponential decay rate equal to the rate of expiration. This leads us to ponder how the processes would otherwise compare when given equivalent parameters. In Proposition 2 we find that although this equivalence of means extends to transient settings, for all higher moments the ESEP dominates the Hawkes process in terms of both the intensity and the counting process.

$\eta_\infty \,:\!=\, \frac{\beta \eta^*}{\beta - \alpha}$

. Interestingly, this would also be the steady-state mean of the Hawkes process when given the same baseline intensity, the same intensity jump size, and an exponential decay rate equal to the rate of expiration. This leads us to ponder how the processes would otherwise compare when given equivalent parameters. In Proposition 2 we find that although this equivalence of means extends to transient settings, for all higher moments the ESEP dominates the Hawkes process in terms of both the intensity and the counting process.

Proposition 2. Let

![]() $(\eta_t, N_{t,\eta})$

be an ESEP intensity and counting process pair with jump size

$(\eta_t, N_{t,\eta})$

be an ESEP intensity and counting process pair with jump size

![]() $\alpha > 0$

, expiration rate

$\alpha > 0$

, expiration rate

![]() $\beta > \alpha$

, and baseline intensity

$\beta > \alpha$

, and baseline intensity

![]() $\eta^* > 0$

. Similarly, let

$\eta^* > 0$

. Similarly, let

![]() $(\lambda_t, N_{t,\lambda})$

be a Hawkes process intensity and counting process pair with jump size

$(\lambda_t, N_{t,\lambda})$

be a Hawkes process intensity and counting process pair with jump size

![]() $\alpha > 0$

, decay rate

$\alpha > 0$

, decay rate

![]() $\beta > 0$

, and baseline intensity

$\beta > 0$

, and baseline intensity

![]() $\eta^* > 0$

. Then, if the two processes have equal initial values, their means will satisfy

$\eta^* > 0$

. Then, if the two processes have equal initial values, their means will satisfy

and for

![]() $m \geq 2$

their mth moments are ordered so that

$m \geq 2$

their mth moments are ordered so that

for all time

![]() $t \geq 0$

.

$t \geq 0$

.

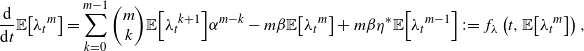

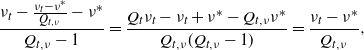

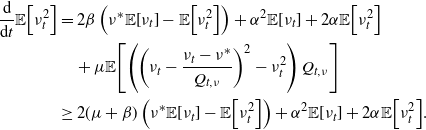

Proof. Let us start with the means. For the intensities, we can note that these are given by the solutions to

and through simplification one can quickly observe that these two ordinary differential equations (ODEs) are equivalent. Thus, because we have assumed that the processes have the same initial values, we find that

![]() ${\mathbb{E}\!\left[\lambda_t\right]} = {\mathbb{E}\!\left[\eta_t\right]}$

. Since

${\mathbb{E}\!\left[\lambda_t\right]} = {\mathbb{E}\!\left[\eta_t\right]}$

. Since

this equality immediately extends to the means of the counting processes as well. We will now use these equations for the means as the base cases for inductive arguments, beginning again with the intensities. For the inductive step, we will assume that the intensity moment ordering holds for moments 1 to

![]() $m-1$

. The mth moment of the Hawkes process intensity is thus given by the solution to

$m-1$

. The mth moment of the Hawkes process intensity is thus given by the solution to

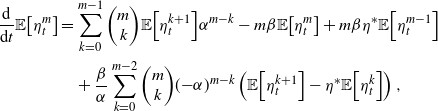

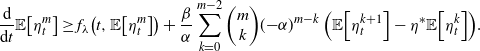

\begin{align*}\frac{\textrm{d}}{\textrm{d}t}{\mathbb{E}\!\left[{\lambda_t}^m\right]}=\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[{\lambda_t}^{k+1}\right]} \alpha^{m-k}-m \beta {\mathbb{E}\!\left[{\lambda_t}^m\right]}+m \beta \eta^{*} {\mathbb{E}\!\left[{\lambda_{t}}^{m-1}\right]}\,:\!=\,f_{\lambda}\left(t, {\mathbb{E}\!\left[{\lambda_t}^{m}\right]}\right),\end{align*}

\begin{align*}\frac{\textrm{d}}{\textrm{d}t}{\mathbb{E}\!\left[{\lambda_t}^m\right]}=\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[{\lambda_t}^{k+1}\right]} \alpha^{m-k}-m \beta {\mathbb{E}\!\left[{\lambda_t}^m\right]}+m \beta \eta^{*} {\mathbb{E}\!\left[{\lambda_{t}}^{m-1}\right]}\,:\!=\,f_{\lambda}\left(t, {\mathbb{E}\!\left[{\lambda_t}^{m}\right]}\right),\end{align*}

where

![]() $f_\lambda\!\left(t, {\mathbb{E}\!\left[\lambda_t^{m}\right]}\right)$

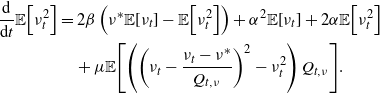

is meant to capture that this ODE depends on the value of the mth moment and of the lower moments, which by the inductive hypothesis we take as known functions of the time t. Then, the mth moment of the ESEP intensity will satisfy

$f_\lambda\!\left(t, {\mathbb{E}\!\left[\lambda_t^{m}\right]}\right)$

is meant to capture that this ODE depends on the value of the mth moment and of the lower moments, which by the inductive hypothesis we take as known functions of the time t. Then, the mth moment of the ESEP intensity will satisfy

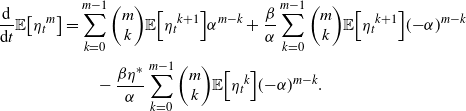

\begin{align*}\frac{\textrm{d}}{\textrm{d}t}{\mathbb{E}\!\left[{\eta_t}^m\right]}=&\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[{\eta_t}^{k+1}\right]} \alpha^{m-k}+\frac{\beta}{\alpha}\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[{\eta_t}^{k+1}\right]} ({-}\alpha)^{m-k}\\&\quad -\frac{\beta \eta^*}{\alpha}\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[{\eta_t}^{k}\right]} ({-}\alpha)^{m-k}.\end{align*}

\begin{align*}\frac{\textrm{d}}{\textrm{d}t}{\mathbb{E}\!\left[{\eta_t}^m\right]}=&\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[{\eta_t}^{k+1}\right]} \alpha^{m-k}+\frac{\beta}{\alpha}\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[{\eta_t}^{k+1}\right]} ({-}\alpha)^{m-k}\\&\quad -\frac{\beta \eta^*}{\alpha}\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[{\eta_t}^{k}\right]} ({-}\alpha)^{m-k}.\end{align*}

By pulling the

![]() $k = m-1$

terms off the top of each summation, we can re-express this ODE as

$k = m-1$

terms off the top of each summation, we can re-express this ODE as

\begin{align*}\frac{\textrm{d}}{\textrm{d}t}{\mathbb{E}\!\left[\eta_t^m\right]}&=\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[\eta_t^{k+1}\right]} \alpha^{m-k}-m\beta {\mathbb{E}\!\left[\eta_t^{m}\right]}+m\beta \eta^* {\mathbb{E}\!\left[\eta_t^{m-1}\right]}\\&\quad+\frac{\beta}{\alpha}\sum_{k=0}^{m-2} {\left(\begin{array}{c}m\\ k\end{array}\right)} ({-}\alpha)^{m-k}\left({\mathbb{E}\!\left[\eta_t^{k+1}\right]}-\eta^* {\mathbb{E}\!\left[\eta_t^{k}\right]}\right),\end{align*}

\begin{align*}\frac{\textrm{d}}{\textrm{d}t}{\mathbb{E}\!\left[\eta_t^m\right]}&=\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[\eta_t^{k+1}\right]} \alpha^{m-k}-m\beta {\mathbb{E}\!\left[\eta_t^{m}\right]}+m\beta \eta^* {\mathbb{E}\!\left[\eta_t^{m-1}\right]}\\&\quad+\frac{\beta}{\alpha}\sum_{k=0}^{m-2} {\left(\begin{array}{c}m\\ k\end{array}\right)} ({-}\alpha)^{m-k}\left({\mathbb{E}\!\left[\eta_t^{k+1}\right]}-\eta^* {\mathbb{E}\!\left[\eta_t^{k}\right]}\right),\end{align*}

and through the definition of

![]() $f_\lambda({\cdot})$

and the inductive hypothesis, we can find the following lower bound:

$f_\lambda({\cdot})$

and the inductive hypothesis, we can find the following lower bound:

\begin{align*}\frac{\textrm{d}}{\textrm{d}t}{\mathbb{E}\!\left[\eta_t^m\right]}&\geq f_\lambda\!\left(t, {\mathbb{E}\!\left[\eta_t^m\right]}\right)+\frac{\beta}{\alpha}\sum_{k=0}^{m-2} {\left(\begin{array}{c}m\\ k\end{array}\right)} ({-}\alpha)^{m-k}\left({\mathbb{E}\!\left[\eta_t^{k+1}\right]}-\eta^* {\mathbb{E}\!\left[\eta_t^{k}\right]}\right)\!.\end{align*}

\begin{align*}\frac{\textrm{d}}{\textrm{d}t}{\mathbb{E}\!\left[\eta_t^m\right]}&\geq f_\lambda\!\left(t, {\mathbb{E}\!\left[\eta_t^m\right]}\right)+\frac{\beta}{\alpha}\sum_{k=0}^{m-2} {\left(\begin{array}{c}m\\ k\end{array}\right)} ({-}\alpha)^{m-k}\left({\mathbb{E}\!\left[\eta_t^{k+1}\right]}-\eta^* {\mathbb{E}\!\left[\eta_t^{k}\right]}\right)\!.\end{align*}

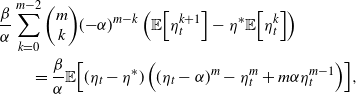

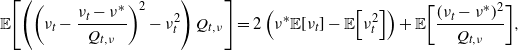

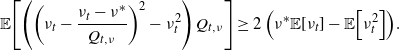

This rightmost term can then be expressed as

\begin{align*}&\frac{\beta}{\alpha}\sum_{k=0}^{m-2} {\left(\begin{array}{c}m\\ k\end{array}\right)} ({-}\alpha)^{m-k}\left({\mathbb{E}\!\left[\eta_t^{k+1}\right]}-\eta^* {\mathbb{E}\!\left[\eta_t^{k}\right]}\right)\\&\qquad =\frac{\beta}{\alpha}{\mathbb{E}\!\left[(\eta_t - \eta^*)\left((\eta_t - \alpha)^m-\eta_t^m+m \alpha \eta_t^{m-1}\right)\right]},\end{align*}

\begin{align*}&\frac{\beta}{\alpha}\sum_{k=0}^{m-2} {\left(\begin{array}{c}m\\ k\end{array}\right)} ({-}\alpha)^{m-k}\left({\mathbb{E}\!\left[\eta_t^{k+1}\right]}-\eta^* {\mathbb{E}\!\left[\eta_t^{k}\right]}\right)\\&\qquad =\frac{\beta}{\alpha}{\mathbb{E}\!\left[(\eta_t - \eta^*)\left((\eta_t - \alpha)^m-\eta_t^m+m \alpha \eta_t^{m-1}\right)\right]},\end{align*}

and we can now reason about the quantity inside the expectation. By definition, we have that

![]() $\eta_t \geq \eta^*$

with probability one, and furthermore we can observe that if

$\eta_t \geq \eta^*$

with probability one, and furthermore we can observe that if

![]() $\eta_t - \eta^* > 0$

, then

$\eta_t - \eta^* > 0$

, then

![]() $\eta_t \geq \eta^* + \alpha > \alpha$

. Thus, let us consider

$\eta_t \geq \eta^* + \alpha > \alpha$

. Thus, let us consider

![]() $(\eta_t - \alpha)^m-\eta_t^m+m \alpha \eta_t^{m-1}$

assuming

$(\eta_t - \alpha)^m-\eta_t^m+m \alpha \eta_t^{m-1}$

assuming

![]() $\eta_t > \alpha$

. Dividing through by

$\eta_t > \alpha$

. Dividing through by

![]() $\eta_t^m$

, we have the expression

$\eta_t^m$

, we have the expression

Since

![]() $(1-x)^m - 1 + mx$

is equal to 0 at

$(1-x)^m - 1 + mx$

is equal to 0 at

![]() $x = 0$

and is non-decreasing on

$x = 0$

and is non-decreasing on

![]() $x \in [0,1)$

via a first-derivative check, we can note that (5) is nonnegative for all

$x \in [0,1)$

via a first-derivative check, we can note that (5) is nonnegative for all

![]() $\eta_t > \eta^*$

. Thus, we have that

$\eta_t > \eta^*$

. Thus, we have that

and by consequence,

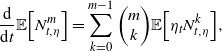

completing the proof of the intensity moment ordering via Lemma 3. For the counting processes, let us again assume as an inductive hypothesis that the moment ordering holds for moments 1 through

![]() $m-1$

, with the mean equality serving as the base case. Then, the mth moment of the ESEP counting process will satisfy

$m-1$

, with the mean equality serving as the base case. Then, the mth moment of the ESEP counting process will satisfy

\begin{align*}\frac{\textrm{d}}{\textrm{d}t}{\mathbb{E}\!\left[N_{t,\eta}^m\right]}&=\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[\eta_t N_{t,\eta}^k\right]},\end{align*}

\begin{align*}\frac{\textrm{d}}{\textrm{d}t}{\mathbb{E}\!\left[N_{t,\eta}^m\right]}&=\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[\eta_t N_{t,\eta}^k\right]},\end{align*}

and the ODE for the mth Hawkes counting process moment is analogous. By the Fortuin–Kasteleyn–Ginibre inequality, we can observe that

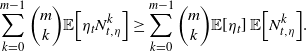

\begin{align*}\sum_{k=0}^{m-1} {\left(\begin{array}{c}m \\ k\end{array}\right)} {\mathbb{E}\!\left[\eta_t N_{t,\eta}^k\right]}\geq \sum_{k=0}^{m-1} {\left(\begin{array}{c}m \\ k\end{array}\right)} {\mathbb{E}\!\left[\eta_t\right]}\, {\mathbb{E}\!\left[N_{t,\eta}^k\right]}.\end{align*}

\begin{align*}\sum_{k=0}^{m-1} {\left(\begin{array}{c}m \\ k\end{array}\right)} {\mathbb{E}\!\left[\eta_t N_{t,\eta}^k\right]}\geq \sum_{k=0}^{m-1} {\left(\begin{array}{c}m \\ k\end{array}\right)} {\mathbb{E}\!\left[\eta_t\right]}\, {\mathbb{E}\!\left[N_{t,\eta}^k\right]}.\end{align*}

By the inductive hypothesis, we have that

![]() ${\mathbb{E}\!\left[N_{t,\eta}^k\right]} \geq {\mathbb{E}\!\left[N_{t,\lambda}^k\right]}$

for each

${\mathbb{E}\!\left[N_{t,\eta}^k\right]} \geq {\mathbb{E}\!\left[N_{t,\lambda}^k\right]}$

for each

![]() $k \leq m - 1$

, and thus we can observe that

$k \leq m - 1$

, and thus we can observe that

\begin{align*}\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[\eta_t\right]}\, {\mathbb{E}\!\left[N_{t,\eta}^k\right]}\geq \sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[\lambda_t\right]}\, {\mathbb{E}\!\left[N_{t,\lambda}^k\right]}.\end{align*}

\begin{align*}\sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[\eta_t\right]}\, {\mathbb{E}\!\left[N_{t,\eta}^k\right]}\geq \sum_{k=0}^{m-1} {\left(\begin{array}{c}m\\ k\end{array}\right)} {\mathbb{E}\!\left[\lambda_t\right]}\, {\mathbb{E}\!\left[N_{t,\lambda}^k\right]}.\end{align*}

Finally, by another application of Lemma 3, we have

![]() ${\mathbb{E}\!\left[N_{t,\lambda}^m\right]} \leq {\mathbb{E}\!\left[N_{t,\eta}^m\right]}$

.

${\mathbb{E}\!\left[N_{t,\lambda}^m\right]} \leq {\mathbb{E}\!\left[N_{t,\eta}^m\right]}$

.

The fact that the ESEP variance dominates the Hawkes variance should not be surprising, since the presence of both up- and down-jumps means that the ESEP sample paths should be subject to more abrupt changes. Nevertheless, this also shows that the ESEP is more over-dispersed than the Hawkes process is. This may be an attractive feature for data modeling. It is worth noting that matrix computations are available for all moments of these intensities via [Reference Daw and Pender21], through which one could use the method of moments to fit the processes to data.

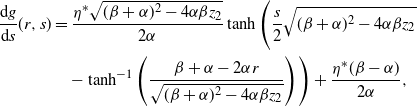

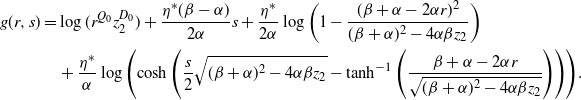

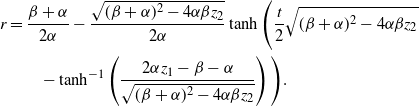

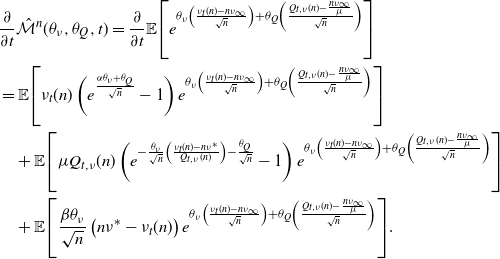

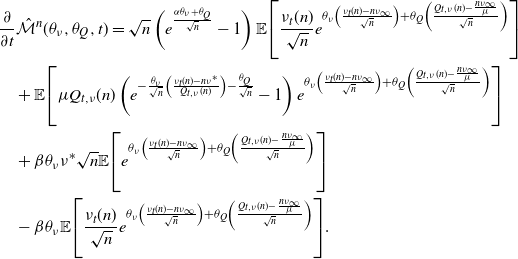

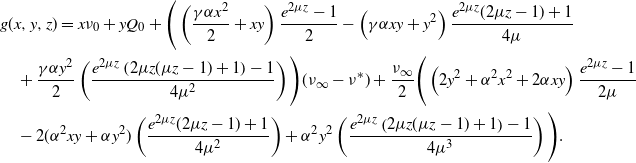

2.3. The ephemerally self-exciting counting process

Thus far we have studied the intensity of the ESEP, as this process is by definition tracking the self-excitement. However, this excitation is manifested in the actual arrivals from the process, which are counted in

![]() $N_t$

. We now turn our attention to developing fundamental quantities for this counting process. To begin, we give the probability generating function of the counting process in closed form below in Proposition 3. One can note that by comparison, the generating functions of the Hawkes process are instead only expressible as functions of ODEs with no known closed-form solutions; see for example Subsection 3.5 of [Reference Daw and Pender20].

$N_t$

. We now turn our attention to developing fundamental quantities for this counting process. To begin, we give the probability generating function of the counting process in closed form below in Proposition 3. One can note that by comparison, the generating functions of the Hawkes process are instead only expressible as functions of ODEs with no known closed-form solutions; see for example Subsection 3.5 of [Reference Daw and Pender20].

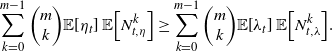

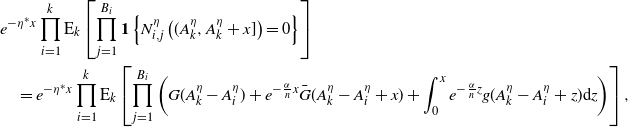

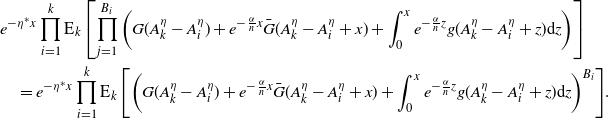

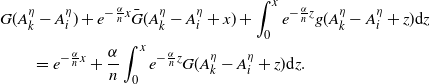

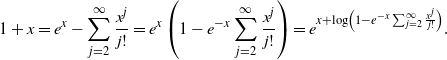

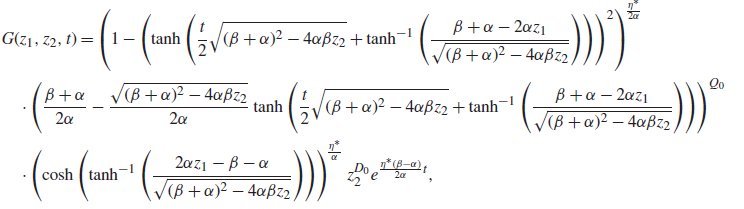

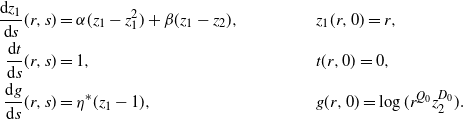

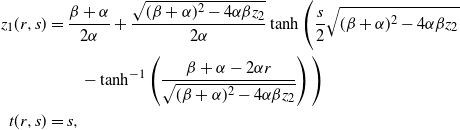

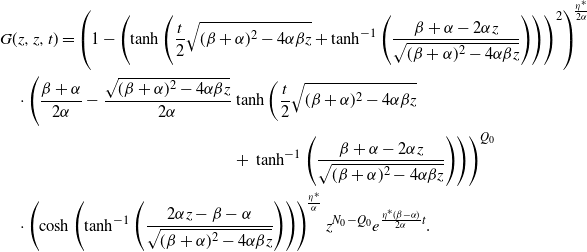

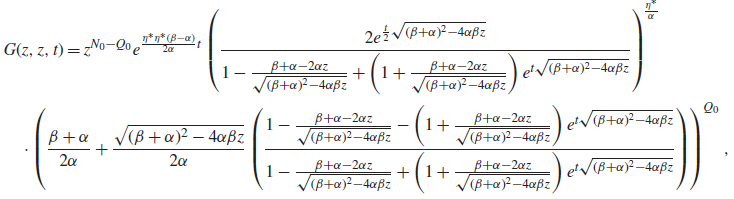

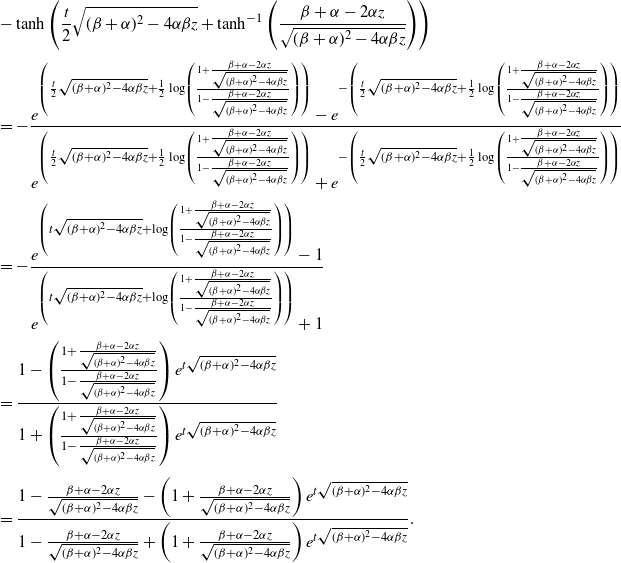

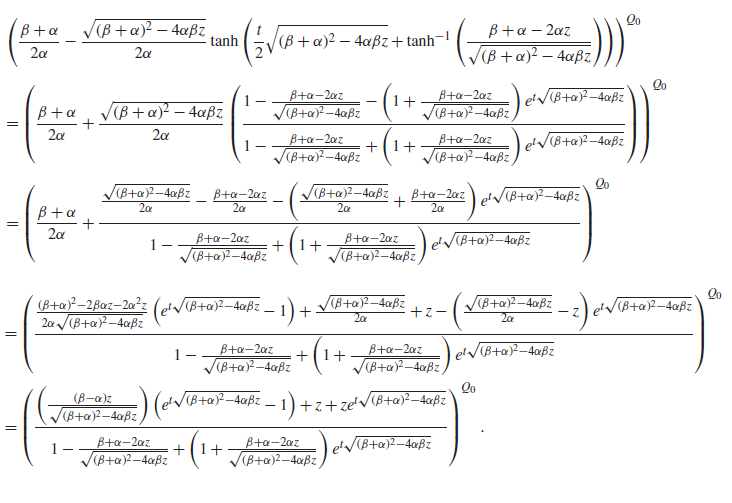

Proposition 3. Let

![]() $N_t$

be the number of arrivals by time

$N_t$

be the number of arrivals by time

![]() $t \geq 0$

in an ESEP with baseline intensity

$t \geq 0$

in an ESEP with baseline intensity

![]() $\eta^* > 0$

, intensity jump

$\eta^* > 0$

, intensity jump

![]() $\alpha > 0$

, and expiration rate

$\alpha > 0$

, and expiration rate

![]() $\beta > \alpha$

. Then, for

$\beta > \alpha$

. Then, for

![]() $z \in [0,1]$

, the probability generating function of

$z \in [0,1]$

, the probability generating function of

![]() $N_t$

is given by

$N_t$

is given by

\begin{align}&{\mathbb{E}\!\left[z^{N_t}\right]}=e^{\frac{\eta^*(\beta-\alpha)}{2\alpha}t}\left(\frac{2e^{\frac{t}{2} \sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}}{1-\frac{ \beta+\alpha-2\alpha z}{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}+\left(1 + \frac{ \beta+\alpha-2\alpha z}{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}\right)e^{t \sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}}\right)^{\!\frac{\eta^*}{\alpha}}\nonumber \\&\quad\cdot \left(\!\frac{\beta + \alpha}{2\alpha}+\frac{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}{2\alpha}\!\left(\!\frac{1-\frac{ \beta+\alpha-2\alpha z}{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}-\left(1 + \frac{ \beta+\alpha-2\alpha z}{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}\right)e^{t \sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}}{1-\frac{ \beta+\alpha-2\alpha z}{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}\!+\!\left(1 + \frac{ \beta+\alpha-2\alpha z}{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}\right)e^{t \sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}}\right)\!\right)^{Q_0},\end{align}

\begin{align}&{\mathbb{E}\!\left[z^{N_t}\right]}=e^{\frac{\eta^*(\beta-\alpha)}{2\alpha}t}\left(\frac{2e^{\frac{t}{2} \sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}}{1-\frac{ \beta+\alpha-2\alpha z}{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}+\left(1 + \frac{ \beta+\alpha-2\alpha z}{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}\right)e^{t \sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}}\right)^{\!\frac{\eta^*}{\alpha}}\nonumber \\&\quad\cdot \left(\!\frac{\beta + \alpha}{2\alpha}+\frac{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}{2\alpha}\!\left(\!\frac{1-\frac{ \beta+\alpha-2\alpha z}{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}-\left(1 + \frac{ \beta+\alpha-2\alpha z}{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}\right)e^{t \sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}}{1-\frac{ \beta+\alpha-2\alpha z}{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}\!+\!\left(1 + \frac{ \beta+\alpha-2\alpha z}{\sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}\right)e^{t \sqrt{(\beta+\alpha)^2 - 4\alpha\beta z}}}\right)\!\right)^{Q_0},\end{align}

where

![]() $Q_0$

is the active number in system at time 0.

$Q_0$

is the active number in system at time 0.

Proof. Because of the cumbersome length of some equations, the proof is given in Appendix D.

In addition to calculating the probability generating function, we can also find a matrix calculation for the transient probability mass function of the counting process. To do so, we recognize that the time until the next arrival occurs can be treated as the time to absorption in a continuous-time Markov chain. By building from this idea to construct a transition matrix for several successive arrivals, we find the form for the distribution given in Proposition 4.

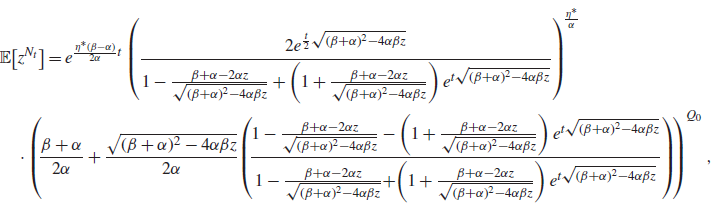

Proposition 4. Let

![]() $N_t$

be the number of arrivals by time t in an ESEP with baseline intensity

$N_t$

be the number of arrivals by time t in an ESEP with baseline intensity

![]() $\eta^* > 0$

, intensity jump

$\eta^* > 0$

, intensity jump

![]() $\alpha > 0$

, and expiration rate

$\alpha > 0$

, and expiration rate

![]() $\beta > \alpha$

. Further, let

$\beta > \alpha$

. Further, let

![]() $Q_0 = k$

be the initial active number in system. Then for

$Q_0 = k$

be the initial active number in system. Then for

![]() $i \in \mathbb{N}$

, define the matrices

$i \in \mathbb{N}$

, define the matrices

![]() $\textbf{D}_i \in \mathbb{R}^{k + i + 1 \times k + i + 1}$

and

$\textbf{D}_i \in \mathbb{R}^{k + i + 1 \times k + i + 1}$

and

![]() $\textbf{S}_i \in \mathbb{R}^{k+i+1 \times k + i+2}$

as

$\textbf{S}_i \in \mathbb{R}^{k+i+1 \times k + i+2}$

as

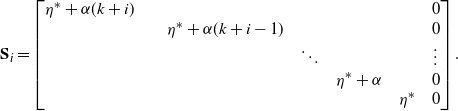

\begin{align*}\textbf{D}_i&=\begin{bmatrix}-(\eta^* + (k+i)(\alpha + \beta)) &\quad (k+i)\beta & & &\\ & \quad -(\eta^*+ (k+i-1)(\alpha + \beta)) & & &\\ & & \ddots &&\\ &&&\quad -(\eta^*+ \alpha+\beta)&\beta\\ &&&&\quad -\eta^*\end{bmatrix}\end{align*}

\begin{align*}\textbf{D}_i&=\begin{bmatrix}-(\eta^* + (k+i)(\alpha + \beta)) &\quad (k+i)\beta & & &\\ & \quad -(\eta^*+ (k+i-1)(\alpha + \beta)) & & &\\ & & \ddots &&\\ &&&\quad -(\eta^*+ \alpha+\beta)&\beta\\ &&&&\quad -\eta^*\end{bmatrix}\end{align*}

and

\begin{align*}\textbf{S}_i&=\begin{bmatrix}\eta^* + \alpha(k+i)\quad & & & & & \quad 0\\ & \quad\eta^* + \alpha(k+i-1) & & && \quad 0\\ & & \quad\ddots &&& \quad\vdots\\ &&&\quad\eta^* + \alpha&& \quad 0\\ &&&&\quad\eta^* &\quad 0\end{bmatrix}.\end{align*}

\begin{align*}\textbf{S}_i&=\begin{bmatrix}\eta^* + \alpha(k+i)\quad & & & & & \quad 0\\ & \quad\eta^* + \alpha(k+i-1) & & && \quad 0\\ & & \quad\ddots &&& \quad\vdots\\ &&&\quad\eta^* + \alpha&& \quad 0\\ &&&&\quad\eta^* &\quad 0\end{bmatrix}.\end{align*}

Further, let

![]() $\textbf{Z}_n \in \mathbb{R}^{\hat d_n \times \hat d_n}$

for

$\textbf{Z}_n \in \mathbb{R}^{\hat d_n \times \hat d_n}$

for

![]() $\hat d_n = \frac{n(n+1)}{2} + (n+1)(k+1)$

be a matrix such that

$\hat d_n = \frac{n(n+1)}{2} + (n+1)(k+1)$

be a matrix such that

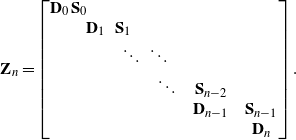

\begin{align*}\textbf{Z}_n=\begin{bmatrix}\textbf{D}_0 & \textbf{S}_0\quad &&&&\\&\quad \textbf{D}_1 & \textbf{S}_1 &&&\\&&\quad \ddots & \ddots &&\\&&& \quad\ddots & \quad \textbf{S}_{n-2} &\\&&&&\quad \textbf{D}_{n-1} & \quad \textbf{S}_{n-1}\\&&&&&\quad \textbf{D}_n\end{bmatrix}.\end{align*}

\begin{align*}\textbf{Z}_n=\begin{bmatrix}\textbf{D}_0 & \textbf{S}_0\quad &&&&\\&\quad \textbf{D}_1 & \textbf{S}_1 &&&\\&&\quad \ddots & \ddots &&\\&&& \quad\ddots & \quad \textbf{S}_{n-2} &\\&&&&\quad \textbf{D}_{n-1} & \quad \textbf{S}_{n-1}\\&&&&&\quad \textbf{D}_n\end{bmatrix}.\end{align*}

Then the probability that

![]() $N_t = n$

is given by

$N_t = n$

is given by

where

![]() ${{\textbf{v}}_{{j}}} \in \mathbb{R}^{\hat d_n}$

is the unit column vector for the jth coordinate, and

${{\textbf{v}}_{{j}}} \in \mathbb{R}^{\hat d_n}$

is the unit column vector for the jth coordinate, and

![]() ${{\textbf{v}}_{{:}}} = \sum_{j = 0}^{k+n} {{\textbf{v}}_{{\hat d_n - j}}}$

.

${{\textbf{v}}_{{:}}} = \sum_{j = 0}^{k+n} {{\textbf{v}}_{{\hat d_n - j}}}$

.

Proof. This follows directly from viewing

![]() $\textbf{Z}_n$

as a sub-matrix of the generator matrix of a continuous-time Markov chain, much as one can do to calculate probabilities of phase-type distributions. Specifically, the sub-generator matrix is defined on the state space

$\textbf{Z}_n$

as a sub-matrix of the generator matrix of a continuous-time Markov chain, much as one can do to calculate probabilities of phase-type distributions. Specifically, the sub-generator matrix is defined on the state space

![]() $\mathcal{S} = \bigcup_{i=0}^n \{(0,i), (1,i), \dots, (k+i-1,i),(k+i,i)\}$

. In this scenario, the state

$\mathcal{S} = \bigcup_{i=0}^n \{(0,i), (1,i), \dots, (k+i-1,i),(k+i,i)\}$

. In this scenario, the state

![]() $(s_1, s_2)$

represents having

$(s_1, s_2)$

represents having

![]() $s_1$

entities in system and having seen

$s_1$

entities in system and having seen

![]() $s_2$

arrivals since time 0. Then,

$s_2$

arrivals since time 0. Then,

![]() $\textbf{D}_i$

is the sub-generator matrix for transitions among the sub-state space

$\textbf{D}_i$

is the sub-generator matrix for transitions among the sub-state space

![]() $\{(k+i,i), (k+i-1,i), \dots, (1,i),(0,i)\}$

to itself (where the states are ordered in that fashion). Similarly,

$\{(k+i,i), (k+i-1,i), \dots, (1,i),(0,i)\}$

to itself (where the states are ordered in that fashion). Similarly,

![]() $\textbf{S}_i$

is for transitions from states in

$\textbf{S}_i$

is for transitions from states in

![]() $\{(k+i,i), (k+i-1,i), \dots, (1,i),(0,i)\}$

to states in

$\{(k+i,i), (k+i-1,i), \dots, (1,i),(0,i)\}$

to states in

![]() $\{(k+i+1, i+1), (k+i,i+1), \dots, (1,i+1),(0,i+1)\}$

. One can then consider this from an absorbing continuous-time Markov chain perspective, since if

$\{(k+i+1, i+1), (k+i,i+1), \dots, (1,i+1),(0,i+1)\}$

. One can then consider this from an absorbing continuous-time Markov chain perspective, since if

![]() $n+1$

arrivals occur it is not possible to transition back to any state in which n arrivals had occurred. Hence, we only need to use the matrix

$n+1$

arrivals occur it is not possible to transition back to any state in which n arrivals had occurred. Hence, we only need to use the matrix

![]() $\textbf{Z}_n$

to consider up to n arrivals. Then,

$\textbf{Z}_n$

to consider up to n arrivals. Then,

![]() $e^{\textbf{Z}_n t}$

is the sub-matrix for probabilities of transitions among states in

$e^{\textbf{Z}_n t}$

is the sub-matrix for probabilities of transitions among states in

![]() $\mathcal{S}$

, where the rows will sum to less than 1 as it is possible that the chain has experienced more than n arrivals by time t. Finally, because

$\mathcal{S}$

, where the rows will sum to less than 1 as it is possible that the chain has experienced more than n arrivals by time t. Finally, because

![]() $Q_0 = k$

we know that the chain starts in state (k,0); further, because we are seeking the probability that there have been exactly n arrivals by time t, we want the probability of transitions from (k, 0) to any of the states in

$Q_0 = k$

we know that the chain starts in state (k,0); further, because we are seeking the probability that there have been exactly n arrivals by time t, we want the probability of transitions from (k, 0) to any of the states in

![]() $\{(k+n,n), (k+n-1,n), \dots, (1,n),(0,n)\}$

.

$\{(k+n,n), (k+n-1,n), \dots, (1,n),(0,n)\}$

.

With these fundamental quantities in hand, let us now turn to explore more nuanced connections between the ESEP and other stochastic processes in the following section. Doing so will provide further comparison between the Hawkes process and the ESEP, and moreover will formally connect the notion of self-excitement to similar concepts such as contagion, virality, and rich-get-richer effects.

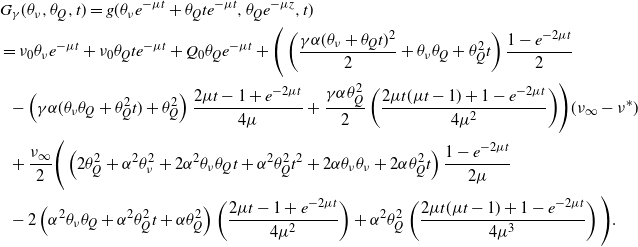

3. Relating ephemeral self-excitement to branching processes, random walks, and epidemics

Aside from the original definition, the most frequently utilized result for Hawkes processes is perhaps the immigration–birth representation first shown in [Reference Hawkes and Oakes37]. By viewing a portion of arrivals as immigrants—externally driven and stemming from a homogeneous Poisson process—and then viewing the remaining portion as offspring—excitation-driven descendants of the immigrants and the prior offspring—one can take new perspectives on self-exciting processes. From this position, if an arrival is a descendant then it has a unique parent, the excitement of which spurred this arrival into existence. Every entity has the potential to generate offspring. This viewpoint takes on added meaning in the context of ephemeral self-excitement, as an entity only has the opportunity to generate descendants so long as it remains in the system. In this section, we will use this idea to connect self-exciting processes to well-known stochastic models that have applications ranging from public health to Bayesian statistics. Furthermore, these connections will also help us form comparisons between the Hawkes process and the model we have introduced, the ESEP. The different dynamics are at the forefront of this process comparison, as the branching structure is dictated by the self-excitation caused by a single arrival. For the Hawkes process, this increase in the arrival rate is eternal but ever-diminishing, whereas in the ESEP the jump is ephemeral but constant when it does exist.

3.1. Discrete-time perspectives through branching processes

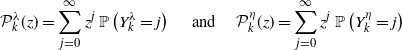

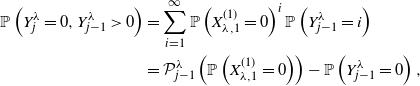

Let us first view these processes through a discrete-time lens as branching processes. In this subsection we will interpret classical branching process results in application to these self-exciting processes. Taking the immigration–birth representation as inspiration, we start by considering the distribution of the total number of offspring of a single arrival. That is, we want to calculate the probability mass function for the number of arrivals that are generated directly from the excitement caused by the initial arrival. To constitute the total number of offspring, we will consider all the children of this initial entity across all time. For the ESEP, this equals the number of arrivals generated by the entity throughout its duration in the system; in the Hawkes process this counts the number of arrivals spurred by the entity as time goes to infinity. Given that the stability conditions are satisfied throughout, in Proposition 5 we calculate these distributions by way of inhomogeneous Poisson processes, yielding a Poisson mixture form for each.

Proposition 5. Let

![]() $\beta > \alpha > 0$

. Let

$\beta > \alpha > 0$

. Let

![]() $X^\eta$

be the number of new arrivals generated by the excitement caused by an arbitrary initial arrival throughout its duration in the system in an ESEP with jump size

$X^\eta$

be the number of new arrivals generated by the excitement caused by an arbitrary initial arrival throughout its duration in the system in an ESEP with jump size

![]() $\alpha$

and expiration rate

$\alpha$

and expiration rate

![]() $\beta$

. Then this offspring distribution is geometrically distributed with probability mass function

$\beta$

. Then this offspring distribution is geometrically distributed with probability mass function

Similarly, let

![]() $X^\lambda$

be the number of new arrivals generated by the excitement caused by an arbitrary initial arrival in a Hawkes process with jump size

$X^\lambda$

be the number of new arrivals generated by the excitement caused by an arbitrary initial arrival in a Hawkes process with jump size

![]() $\alpha$

and decay rate

$\alpha$

and decay rate

![]() $\beta$

. This offspring distribution is then Poisson distributed with probability mass function

$\beta$

. This offspring distribution is then Poisson distributed with probability mass function

where all

![]() $k \in \mathbb{N}$

.

$k \in \mathbb{N}$

.

Proof. Without loss of generality, we assume that the initial arrival in each process occurred at time 0. Then, at time

![]() $t \geq 0$

, the excitement generated by these initial arrivals has intensities given by

$t \geq 0$

, the excitement generated by these initial arrivals has intensities given by

![]() $\alpha e^{-\beta t}$

and

$\alpha e^{-\beta t}$

and

![]() $\alpha \textbf{1}\{t < S\}$

for the Hawkes and ESEP processes, respectively, where

$\alpha \textbf{1}\{t < S\}$

for the Hawkes and ESEP processes, respectively, where

![]() $S \sim \textrm{Exp}(\beta)$

. Using [Reference Daley and Vere-Jones16], one can note that the offspring distributions across all time can then be expressed as

$S \sim \textrm{Exp}(\beta)$

. Using [Reference Daley and Vere-Jones16], one can note that the offspring distributions across all time can then be expressed as

which are equivalently stated as

![]() $X^\lambda \sim \textrm{Pois}\left(\alpha/\beta\right)$

and

$X^\lambda \sim \textrm{Pois}\left(\alpha/\beta\right)$

and

![]() $X^\eta \sim \textrm{Pois}\left(\alpha S\right)$

. This now immediately yields the stated distributions for

$X^\eta \sim \textrm{Pois}\left(\alpha S\right)$

. This now immediately yields the stated distributions for

![]() $X^\lambda$

and

$X^\lambda$

and

![]() $X^\eta$

, as the Poisson–exponential mixture is known to yield a geometric distribution; see for example the overview of Poisson mixtures in [Reference Karlis and Xekalaki39].

$X^\eta$

, as the Poisson–exponential mixture is known to yield a geometric distribution; see for example the overview of Poisson mixtures in [Reference Karlis and Xekalaki39].

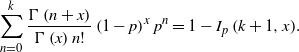

We now move towards considering the total progeny of an initial arrival, meaning the total number of arrivals generated by the excitement of an initial arrival and the excitement of its offspring, and of their offspring, and so on across all time. It is important to note that in contrast to the number of offspring, the progeny includes the initial arrival itself. As we will see, the stability of the self-exciting processes implies that this total number of descendants is almost surely finite. This demonstrates the necessity of immigration for these processes to survive. From the offspring distributions in Proposition 5, the Hawkes descendant process is a Poisson branching process, and similarly the ESEP is a geometric branching process. These are well-studied models in branching processes, so we have many results available to us. In fact, we now use a result for random walks with potentially multiple simultaneous steps forward to derive the progeny distributions for these two processes. This is through the well-known hitting time theorem, stated below in Lemma 1.

Lemma 1. (Hitting time theorem.) The total progeny Z of a branching process with descendant distribution equivalent to

![]() $X_1$

is

$X_1$

is

where

![]() $X_1, \dots, X_k$

are i.i.d. for all

$X_1, \dots, X_k$

are i.i.d. for all

![]() $k \in \mathbb{Z}^+$

.

$k \in \mathbb{Z}^+$

.

Proof. See [Reference Otter52] for the original statement and proof in terms of random walks; a review and elementary proof are given in the brief note [Reference Van der Hofstad and Keane58].

We now use the hitting time theorem to give the progeny distributions for the Hawkes process and the ESEP in Proposition 6. This is a common technique for branching processes, and it now yields valuable insight into these two self-exciting models.

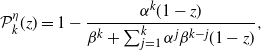

Proposition 6. Let

![]() $\beta > \alpha > 0$

. Let

$\beta > \alpha > 0$

. Let

![]() $Z^\eta$

be a random variable for the total progeny of an arbitrary arrival in an ESEP with intensity jump

$Z^\eta$

be a random variable for the total progeny of an arbitrary arrival in an ESEP with intensity jump

![]() $\alpha$

and expiration rate

$\alpha$

and expiration rate

![]() $\beta$

. Likewise, let

$\beta$

. Likewise, let

![]() $Z^\lambda$

be a random variable for the total progeny of an arbitrary arrival in a Hawkes process with intensity jump

$Z^\lambda$

be a random variable for the total progeny of an arbitrary arrival in a Hawkes process with intensity jump

![]() $\alpha$

and decay rate

$\alpha$

and decay rate

![]() $\beta$

. Then the probability mass functions for

$\beta$

. Then the probability mass functions for

![]() $Z^\eta$

and

$Z^\eta$

and

![]() $Z^\lambda$

are given by

$Z^\lambda$

are given by

where

![]() $k \in \mathbb{Z}^+$

.

$k \in \mathbb{Z}^+$

.

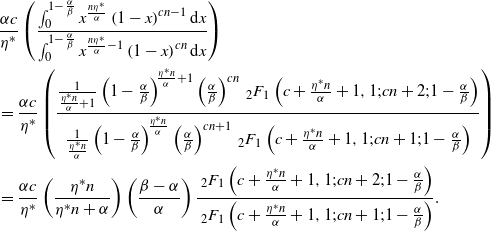

Proof. This follows from applying Lemma 1 to Proposition 5. Because the sum of independent Poisson random variables is Poisson distributed with the sum of the rates, we have that

where

![]() $K_1 \sim \textrm{Pois}\left(\frac{\alpha k}{\beta}\right)$

. This now yields the expression for the probability mass function for

$K_1 \sim \textrm{Pois}\left(\frac{\alpha k}{\beta}\right)$

. This now yields the expression for the probability mass function for

![]() $Z^\lambda$

. Similarly for

$Z^\lambda$

. Similarly for

![]() $Z_\eta$

we note that the sum of independent geometric random variables has a negative binomial distribution, which implies that

$Z_\eta$

we note that the sum of independent geometric random variables has a negative binomial distribution, which implies that

where

![]() $K_2 \sim \textrm{NegBin}\left(k,\frac{\alpha}{\beta + \alpha}\right)$

, and this completes the proof.

$K_2 \sim \textrm{NegBin}\left(k,\frac{\alpha}{\beta + \alpha}\right)$

, and this completes the proof.

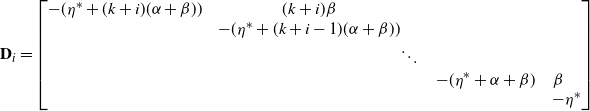

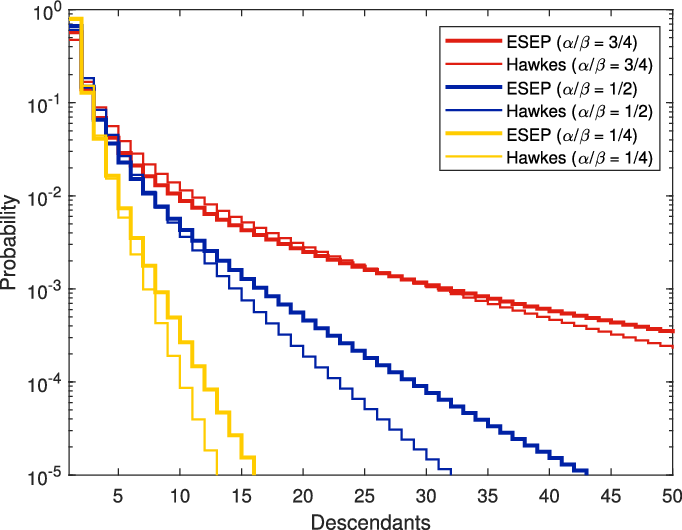

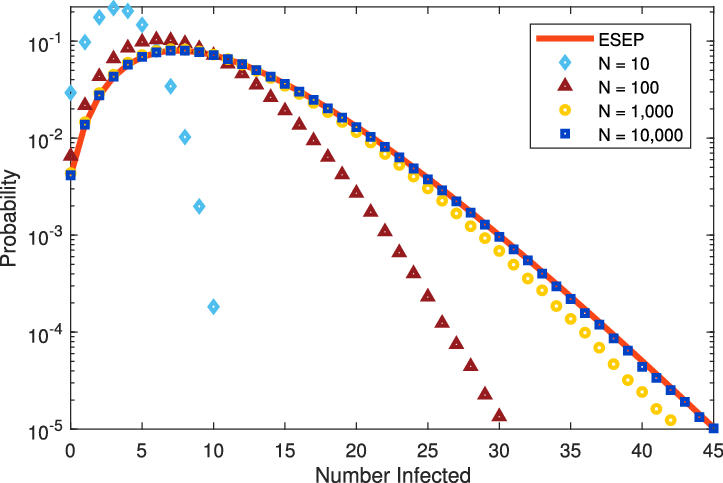

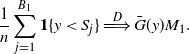

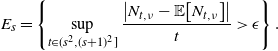

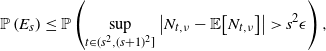

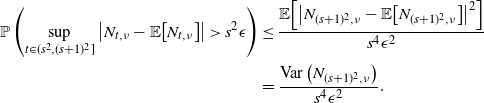

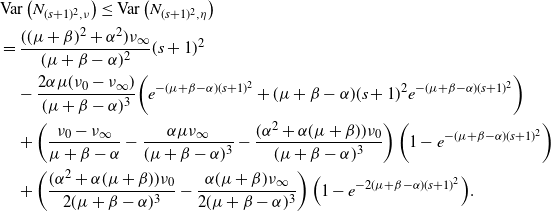

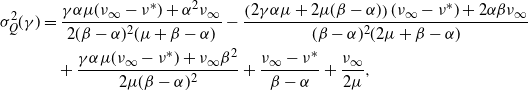

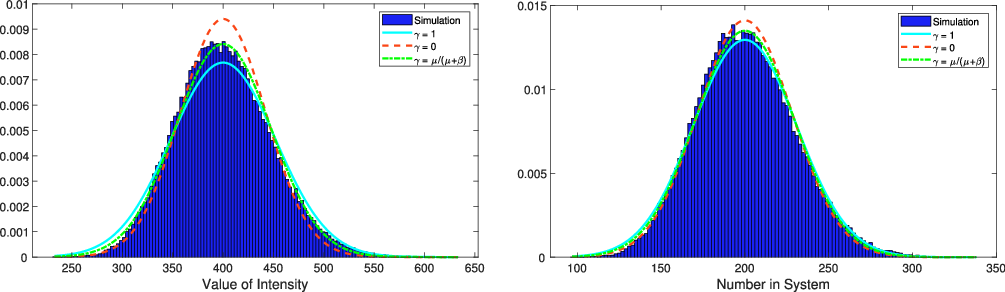

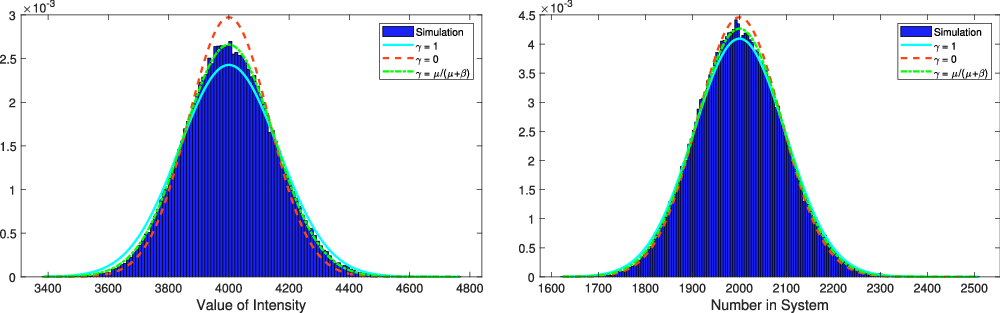

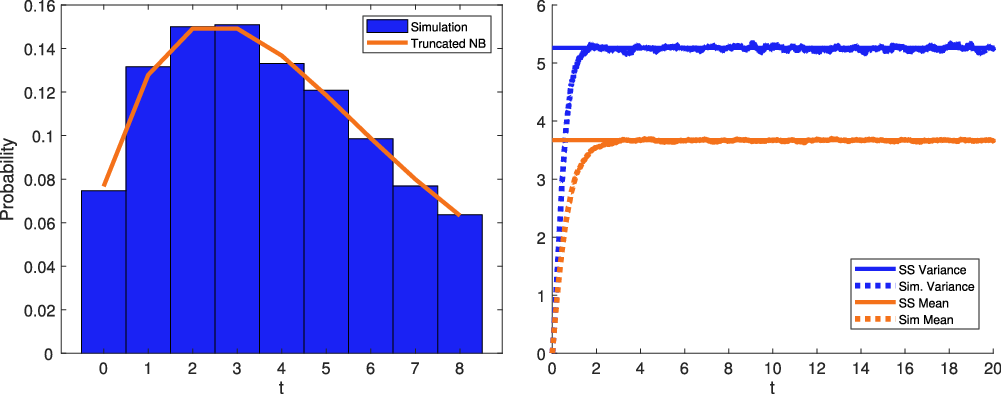

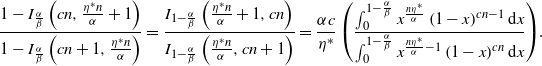

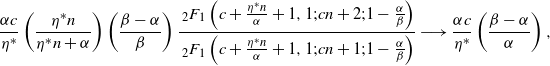

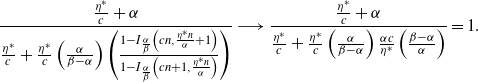

For a visual comparison of the descendants in the ESEP and the Hawkes process, we plot these two progeny distributions for equivalent parameters in Figure 2. As suggested by the variance ordering in Proposition 2, the tail of the ESEP progeny distribution is heavier than that of the Hawkes process.

Figure 2. Progeny distributions for the ESEP and the Hawkes process with matching parameters.

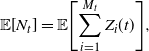

We can note that while one can calculate the mean of each progeny distribution via the probability mass functions in Proposition 6, it can also easily be found using Wald’s identity. Through standard infinitesimal generator approaches, one can calculate that the expected number of arrivals (including by immigration) in the ESEP is

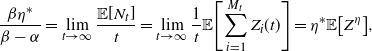

However, using these branching process representations, we can also express this as

\begin{align*}{\mathbb{E}\!\left[N_t\right]} = {\mathbb{E}\!\left[\sum_{i=1}^{M_t} Z_{i}(t)\right]},\end{align*}