1. Introduction

Originally defined as “dedicated Web-based online courses for second languages with unrestricted access and potentially unlimited participation” (Bárcena & Martín-Monje, Reference Bárcena, Martín-Monje, Martín-Monje and Bárcena2014: 1), language MOOCs (or LMOOCs) have emerged as an enticing alternative to traditional language education. Over the past decade, they have witnessed exponential growth, driven by advancements in educational technology and the increasing demand for accessible language learning opportunities (Sallam, Martín-Monje & Li, Reference Sallam, Martín-Monje and Li2022; Zhang & Sun, Reference Zhang and Sun2023). Notably, during the COVID-19 pandemic, language learning ranked among the top 10 subjects for MOOC learners globally (Shah, Reference Shah2020). Given their evolving nature and expanding applications, LMOOCs have been redefined as “dedicated Web-based online courses which deal with various aspects related to second languages, such as language learning, language teaching and learning methodology, linguistic expression of cultures or language teacher education. They have unrestricted access and potentially unlimited participation” (Martín-Monje & Borthwick, Reference Martín-Monje, Borthwick, Wang and Cárdenos Claros2024: 176). This redefinition reflects the adaptability of LMOOCs to encompass diverse educational contexts, making them a critical component of modern language education. With the proliferation of LMOOCs in the past decade, much scholarly attention has been drawn to the potential and opportunities provided in this field. For example, early studies have discussed the prospect of integrating MOOCs into second language education (Perifanou & Economides, Reference Perifanou, Economides, Gómez Chova, López Martinez and Candel Torres2014b; Qian & Bax, Reference Qian and Bax2017). Subsequent studies have explored various aspects of LMOOC learning, such as learners’ attention, engagement and autonomy (Ding & Shen, Reference Ding and Shen2022; Jiang & Peng, Reference Jiang and Peng2025; Zeng, Zhang, Gao, Xu & Zhang, Reference Zeng, Zhang, Gao, Xu and Zhang2022), learner interaction and communication (Appel & Pujolà, Reference Appel and Pujolà2021; Martín-Monje, Castrillo & Mañana-Rodríguez, Reference Martín-Monje, Castrillo and Mañana-Rodríguez2018), as well as learner satisfaction and achievement (Bartalesi-Graf, Agonács, Matos & O’Steen, Reference Bartalesi-Graf, Agonács, Matos and O’Steen2024; Wright & Furneaux, Reference Wright and Furneaux2021). While extensive research has highlighted the potential of LMOOCs in language education, studies have identified significant challenges, such as inappropriate course design, inefficient learner support, and unfit assessment (Bárcena, Martín-Monje & Read, Reference Bárcena, Martín-Monje and Read2015; Hsu, Reference Hsu2023; Luo & Ye, Reference Luo and Ye2021; Sokolik, Reference Sokolik, Martín-Monje and Bárcena Madera2014). Furthermore, a critical review of the design features of LMOOCs confirmed their underlying problems, including limited personalization, interactivity, and flexibility (Chong, Khan & Reinders, Reference Chong, Khan and Reinders2024). These quality concerns have drawn scholarly attention, leading to initial explorations and discussions. Prior studies have discussed issues of “what constitutes an effective LMOOC” or “how to design a framework for language learning in an LMOOC” (Perifanou, Reference Perifanou2014a; Read & Bárcena, Reference Read and Bárcena2020; Sokolik, Reference Sokolik, Martín-Monje and Bárcena Madera2014). These quality-related discussions are generally grounded in personal experience or insights from researchers’ perspectives, leaving substantial room for evidence-based investigations into the quality of LMOOCs.

The quality of MOOCs, understood as a multidimensional concept reflecting teaching and learning processes from various perspectives, plays a crucial role in determining the success of MOOCs (Albelbisi, Reference Albelbisi2020; Stracke & Trisolini, Reference Stracke and Trisolini2021). Building on this notion, this study defines the quality of LMOOCs as the comprehensive quality of various course components, including instructional design, course content, L2 instruction, learning activities, assessment, and technological environment, etc. Since LMOOCs have gained significant momentum worldwide, there is an urgent need to establish a robust evaluation framework for assessing LMOOC quality. To date, limited empirical research has addressed the issue, and no existing instrument has been developed to gauge learners’ evaluation of LMOOC quality.

The present study introduces an LMOOC Quality Evaluation Scale (LQES) for assessing the quality of LMOOCs from learners’ perspectives. Adopting a mixed-method approach, the study develops theoretically and empirically grounded rubrics that encompass dimensions of LMOOC design, teaching, and implementation. The study then validates the scale in the Chinese context, which has the largest number of LMOOC learners in the world.Footnote 1 To our knowledge, the present study is the first of its kind and has answered Zhang and Sun’s (Reference Zhang and Sun2023) call for developing standards for quality assurance of LMOOCs based on empirical evidence. The LQES provides an original and comprehensive framework for understanding the complexities of LMOOC quality. The study is guided by the following research questions:

-

RQ1: What are the key dimensions underpinning learners’ evaluation of LMOOC quality?

-

RQ2: To what extent is the LQES developed in the current study reliable and valid?

2. Literature review

2.1 Quality standards for MOOCs

Regarding the success of MOOCs, it is widely acknowledged that high enrollment and completion rates alone are insufficient indicators (Khalil & Ebner, Reference Khalil, Ebner, Viteli and Leikomaa2014). Understanding the success of a MOOC as process-defined rather than outcomes-defined, Stephen Downes offered four key success factors for a MOOC: autonomy, diversity, openness, and interactivity (Creelman, Ehlers & Ossiannilsson, Reference Creelman, Ehlers and Ossiannilsson2014). Other scholars argue that the success of MOOCs should be viewed from perspectives such as learners’ background, engagement, motivation (Rõõm, Luik & Lepp, Reference Rõõm, Luik and Lepp2023), as well as course quality, system quality, information quality, and service quality (Albelbisi, Reference Albelbisi2020). Among all variables, a consensus has emerged that a comprehensive MOOC quality is a critical indicator of learner success and significantly influences their satisfaction and continuous intention (Gu, Xu & Sun, Reference Gu, Xu and Sun2021; Stracke & Trisolini, Reference Stracke and Trisolini2021).

Quality criteria of MOOCs differ from traditional courses given their unique features such as asynchronous and self-paced learning mode (Creelman et al., Reference Creelman, Ehlers and Ossiannilsson2014). While many MOOCs are developed by reputable institutions, their quality and rigor vary widely, failing to meet the needs of a massive number of learners. Additionally, due to the large-scale nature of MOOCs, challenges such as inadequate learner support, unfit assessment, inappropriate course design, and poor pedagogical method remain within the field (Conole, Reference Conole2016; Gamage, Fernando & Perera, Reference Gamage, Fernando and Perera2015; Margaryan, Bianco & Littlejohn, Reference Margaryan, Bianco and Littlejohn2015). These issues have captured researchers’ attention and provoked widespread discussion on the quality assurance of MOOCs. Therefore, there is a broad consensus on the urgent need to establish benchmarks for evaluating the quality of MOOCs (Gamage et al., Reference Gamage, Fernando and Perera2015; Hood & Littlejohn, Reference Hood and Littlejohn2016; Xiao, Qiu & Cheng, Reference Xiao, Qiu and Cheng2019).

Among existing research concerning MOOC quality, quality dimensions and frameworks for MOOCs have been proposed from different perspectives. The pedagogical dimension is considered the most important dimension influencing the quality of MOOCs (Stracke & Trisolini, Reference Stracke and Trisolini2021). First, instructional design is a critical indicator of MOOC quality in promoting learner engagement and learning effectiveness (Jung, Kim, Yoon, Park & Oakley, Reference Jung, Kim, Yoon, Park and Oakley2019). Margaryan et al. (Reference Margaryan, Bianco and Littlejohn2015), employing first principles of instruction, assessed the instructional design quality of MOOCs (76 courses in total) and revealed the coexistence of well-packaged content and poor instructional design. Conole (Reference Conole2016) employed her 7Cs framework – Conceptualize, Capture, Communicate, Collaborate, Consider, Combine, and Consolidate – to refine the pedagogical design and implementation of MOOCs.

Second, the significant role of interaction and evaluation design in MOOC learning has been highlighted in recent studies. Four types of interaction (learner–facilitator, learner–resource, learner–learner, and group–group interaction) act as crucial factors impacting learners’ perceived quality of MOOCs (Stracke & Tan, Reference Stracke, Tan, Kay and Luckin2018), and peer assessment design is a key predictor of learners’ satisfaction and completion in MOOCs (Gamage et al., Reference Gamage, Staubitz and Whiting2021; Yousef, Chatti, Schroeder & Wosnitza, Reference Yousef, Chatti, Schroeder and Wosnitza2014). Besides the pedagogical dimension, the technical dimension is also acknowledged to be closely related to the quality assurance of MOOCs (Cross et al., Reference Cross, Keerativoranan, Carlon, Tan, Rakhimberdina and Mori2019; Stracke & Trisolini, Reference Stracke and Trisolini2021). In this dimension, MOOC platforms and delivery systems play fundamental roles in ensuring online learning success (Fernández-Díaz, Rodríguez-Hoyos & Calvo Salvador, Reference Fernández-Díaz, Rodríguez-Hoyos and Calvo Salvador2017). User interface and video features are proved to be indispensable factors for optimizing accessibility, navigation, and scaffolding (Deng & Gao, Reference Deng and Gao2023; Maloshonok & Terentev, Reference Maloshonok and Terentev2016). Combining the above two dimensions, Yousef et al.’s (Reference Yousef, Chatti, Schroeder and Wosnitza2014) widely cited study categorized MOOC quality standards into two levels: the pedagogical level and the technological level.

Considering the multifaceted nature of MOOC quality, recent research has integrated multiple dimensions into the frameworks for assessing MOOC quality. As for other major quality factors, course content serves as an indispensable element in influencing MOOC quality (Ucha, Reference Ucha2023), which consists of teaching videos and supplementary resources, etc. (Huang, Zhang & Liu, Reference Huang, Zhang and Liu2017; Ma, Reference Ma2018). Teaching group and teachers’ competence are also closely linked to learners’ perceived effectiveness of MOOCs (Ferreira, Arias & Vidal, Reference Ferreira, Arias and Vidal2022; Huang et al., Reference Huang, Zhang and Liu2017). Recognizing the necessity of incorporating these relevant elements in the quality evaluation of MOOCs, extensive studies have established MOOC quality evaluation frameworks consisting of indicators such as course content, instructional design, teaching group, technology, teaching implementation, learner support, etc. (Dyomin, Mozhaeva, Babanskaya & Zakharova, Reference Dyomin, Mozhaeva, Babanskaya, Zakharova, Delgado Kloos, Jermann, Pérez-Sanagustín, Seaton and White2017; Poce, Amenduni, Re & De Medio, Reference Poce, Amenduni, Re and De Medio2019; Stracke & Tan, Reference Stracke, Tan, Kay and Luckin2018; Yang, Zhou, Zhou, Hao & Dong, Reference Yang, Zhou, Zhou, Hao and Dong2020).

Although the aforementioned studies have significantly advanced the understanding of MOOC quality, they have certain limitations: first, from a research design perspective, the quality evaluation standards are mostly proposed by MOOC designers and researchers. As the recipients and target audience of MOOCs, learners are an inseparable component of MOOC learning (Creelman, Ehlers & Ossiannilsson, Reference Creelman, Ehlers and Ossiannilsson2014) and their perceptions of courses are indicative of effectiveness and the ultimate success of MOOCs. Second, regarding data analysis methods, most MOOC quality evaluation frameworks derive from literature reviews or theoretical reflections, focusing more on qualitative rather than quantitative methods (Ma, Reference Ma2018; Yang et al., Reference Yang, Zhou, Zhou, Hao and Dong2020). Therefore, the current situation necessitates a more comprehensive evaluation method and calls upon more empirical evidence underpinning LMOOC quality from learners’ perspectives.

2.2 Quality evaluation of LMOOCs

Following the practice of xMOOCs, LMOOCs are mostly institutionalized and rigorously structured online language courses (Jitpaisarnwattana, Reinders & Darasawang, Reference Jitpaisarnwattana, Reinders and Darasawang2019). Since the proliferation of LMOOCs in recent years, much scholarly attention has been devoted to learner experience and behaviors (Appel & Pujolà, Reference Appel and Pujolà2021; Bartalesi-Graf et al., Reference Bartalesi-Graf, Agonács, Matos and O’Steen2024; Martín-Monje et al., Reference Martín-Monje, Castrillo and Mañana-Rodríguez2018), learner motivation, autonomy and emotions (Beaven, Codreanu & Creuzé, Reference Beaven, Codreanu, Creuzé, Martín-Monje and Bárcena Madera2014; Ding & Shen, Reference Ding and Shen2022; Luo & Wang, Reference Luo and Wang2023), as well as learning outcomes in LMOOC learning (Wright & Furneaux, Reference Wright and Furneaux2021). LMOOC quality is not a primary focus in the initial stages of LMOOC research and only a handful of studies have delved into this topic.

Perifanou and Economides (Reference Perifanou, Economides, Gómez Chova, López Martinez and Candel Torres2014b) made a first step in evaluating the quality of LMOOC environments and proposed a six-dimension standard for constructing an adaptive and personalized LMOOC learning environment, including content, pedagogy, assessment, community, technical infrastructure, and financial issues. Focusing on the “human dimension,” Bárcena, Martín-Monje and Read (Reference Bárcena, Martín-Monje and Read2015) discussed problems influencing LMOOC quality and highlighted the significance of online social interactions, feedback, and learner support in online language learning. Ding & Shen (Reference Ding and Shen2020) conducted a content analysis of learner reviews on 41 English LMOOCs, indicating that content design, video presentation, and MOOC instructors were of most importance to the learners. Based on grounded theory, Luo and Ye (Reference Luo and Ye2021) identified key quality criteria of LMOOCs from learners’ perspectives, consisting of instructor, teaching content, pedagogy, technology, and teaching management dimensions. Hsu (Reference Hsu2023) used Reference DeLone and McLean1992 Information System Success Model to define the success of LMOOCs, revealing that system quality acts as a significant factor influencing learners’ intention and satisfaction toward LMOOCs. To conclude, existing research on LMOOC quality primarily consists of qualitative studies based on researchers’ reflections or learners’ interviews and comments. Few empirical studies have thoroughly investigated the quality of LMOOCs, and no instrument currently exists to assess learners’ evaluation of LMOOC quality.

With the specific purpose of assessing the quality of MOOCs for L2 learning, the current study introduces the LQES for measuring L2 learners’ evaluation of LMOOC quality. This study integrates perspectives from experts and learners to design and develop a questionnaire for assessing the quality of LMOOCs. This discipline-specific instrument better captures the features of the development, delivery, and implementation of LMOOCs. Combining quantitative and qualitative methods, the current study aims to explore the key dimensions influencing L2 learners’ assessment of LMOOC quality.

3. Developing and validating LMOOC Quality Evaluation Scale (LQES)

3.1 Methods

The present study adopted a large-scale mixed-method approach. We collected data from multiple sources: literature reviews of previous studies, interviews of focus group and LMOOC experts, and questionnaires of learners’ evaluation of LMOOC quality. This study employed both quantitative and qualitative methods for the scale’s development and validation.

3.2 Participants and settings

To investigate different types of language MOOCs, the present study selected 15 representative LMOOCs from three major MOOC platforms in China: icourse.org, XuetangX, and UMOOCs. All these courses have been open to the public for at least eight sessions, with enrollment number ranging from 50,000 to 200,000. Among the 15 LMOOCs, seven courses are recognized as national-level courses and eight as provincial-level courses. Our survey was conducted from March to September 2023. In collaboration with LMOOC providers, an online survey was distributed to learners who had completed their courses to understand their perceptions of LMOOC quality. A total of 2,549 questionnaire responses were collected, of which 2,327 were valid and complete. These respondents were from various places in mainland China, including Beijing, Zhejiang, Jiangsu, Jiangxi, Jilin, Gansu, Xinjiang, Guangxi, and Guangdong. With their consent, 2,315 questionnaires were retained for further analysis.

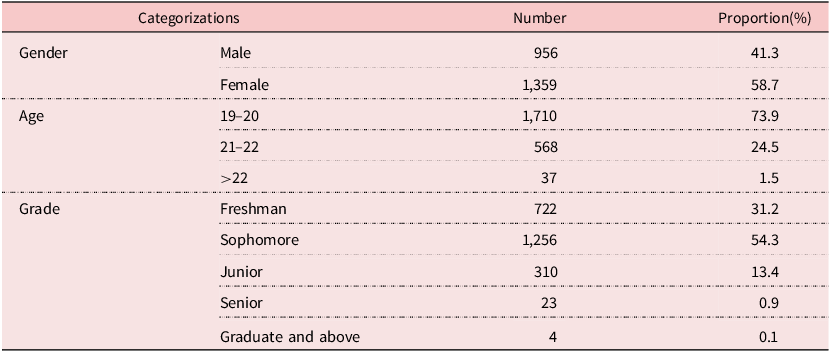

According to the new taxonomy of LMOOCs proposed by Díez-Arcón and Agonács (Reference Díez-Arcón and Agonács2024), 15 LMOOCs in our study cover four modalities: general language learning LMOOCs (e.g. Practical College English Grammar & English Translation Techniques), LMOOCs for academic purposes (e.g. Academic English Listening and Speaking & Academic English Writing), LMOOCs for professional purposes (e.g. Maritime English and Conversations & Advanced Medical English Vocabulary), and cultural-oriented LMOOCs (e.g. Impressions of British & American Culture & An Overview of Chinese Culture). All the courses are delivered in English, and their teaching materials are also written in English. In their learning process, students felt free to control their LMOOC learning according to their own learning speed and habit. At the end of the semester, they need to complete an online survey reporting their evaluation of the quality of the LMOOC they have participated in. Permission from students was obtained before data collection. Table 1 presents the demographic information of our respondents.

Table 1. Demographic information of participants

3.3 Scale development procedures

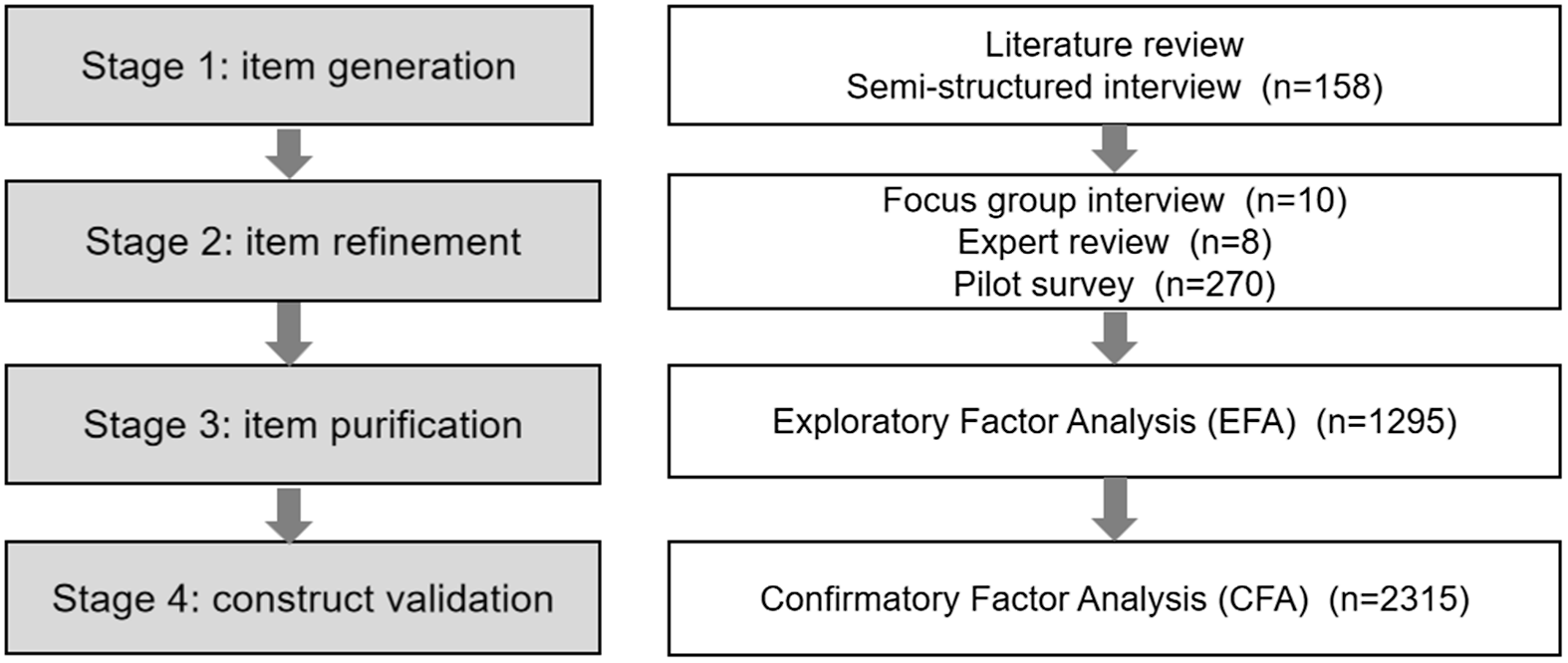

The scale development and validation in this study were carried out in four distinct phases: (1) generation of an initial item pool, (2) refinement of the scale items, (3) exploration of the scale’s factor structure, and (4) validation and confirmation of the final scale. In the first stage involving the generation of scale items, 158 learners (66 male, 92 female) participated in a semi-structured interview, 10 learners (5 male, 5 female) took part in a focus group interview, while 270 (156 male, 114 female) learners participated in a pilot test. In the second stage, to identify the underlying factor structure of the scale, an exploratory factor analysis (EFA) was conducted on 1,295 students. In the third stage, construct validation was performed on all 2,315 students through confirmatory factor analysis (CFA). Figure 1 illustrates the four stages involved in the scale development process.

Figure 1. Scale development procedures.

3.3.1 Generation of the item pool

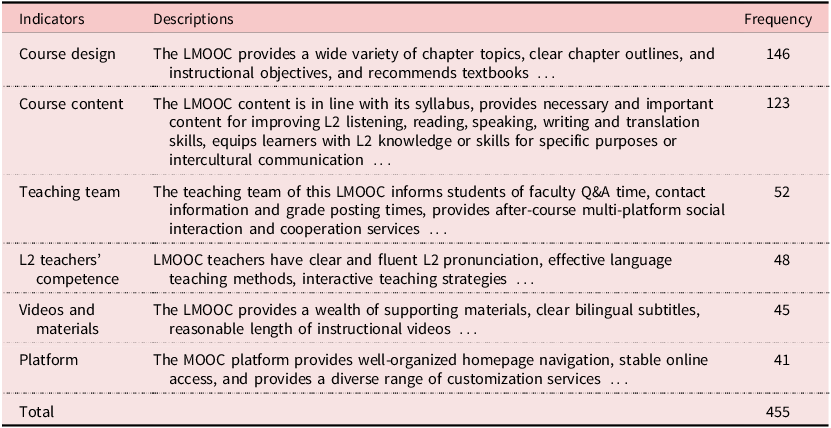

To generate items for the LQES, we employed a combination of inductive and deductive methods, which has been recommended by previous studies on scale development (Cheng & Clark, Reference Cheng and Clark2017; Morgado, Meireles, Neves, Amaral & Ferreira, Reference Morgado, Meireles, Neves, Amaral and Ferreira2017). The inductive approach to scale item generation involves deriving items from observations, reflections, or empirical data that reflect the perspectives of the target audience. The deductive approach involves an extensive literature review reflecting the constructs or dimensions of the existing theories or conceptual frameworks. First, since the LQES was developed from learners’ perspectives, we adopted the inductive approach by utilizing a semi-structured interview to capture L2 learners’ perceptions about LMOOC quality. The interview was conducted among 158 students from two universities located in southern China by recalling their recent LMOOC learning experience. Drawing on Li, Li and Zhao’s (Reference Li, Li and Zhao2020) qualitative study on the quality of MOOCs, our semi-structured interview focused on the three questions: (1) What is the quality of an LMOOC? (2) What are the characteristics and features demonstrated by a good-quality LMOOC? (3) What are the core dimensions or key issues in constructing a good-quality LMOOC? The content analysis of participants’ answers yielded 455 pieces of useful information. Themes related to learners’ perceptions of LMOOC quality were extracted as indicators for scale development. LMOOC quality indicators and descriptions emerging in learners’ perceptions are presented in Table 2.

Table 2. Indicators and descriptions in the semi-structured interview

Second, this study utilized the deductive approach through a comprehensive review of existing research concerning the quality of MOOCs and LMOOCs. The literature review aims to obtain key indicators and descriptions in previous research that echoed the findings of the semi-structured interview. Relevant studies revealed different categories involving similar descriptions to LMOOC learners’ perceptions, including course objectives, instructional design, course content, learning process, teachers’ competence, learner support, and technical environment. In the process of analyzing descriptions below key indicators, we find the classification of some items like “The course provides clear introduction to teaching objectives and guidelines for learning this course” is overlapping since it concerns both indicators of teaching objectives and learner support. We thus only extracted relevant item descriptions without a clear classification into specific quality indicators. To ensure full comprehension of the scale, the items were translated into Chinese, the native language of our participants. Based on the item descriptions generated from the semi-structured interview and literature review, an initial item pool of 43 items was generated. A 5-point Likert scale was adopted as the response format, ranging from 1 (strongly disagree) to 5 (strongly agree). Similar designs using an odd-number 5-point response scale to investigate learners’ experience of MOOCs were adopted in the development of a MOOC success scale (Albelbisi, Reference Albelbisi2020), the establishment of a MOOC evaluation system (Tzeng, Lee, Huang, Huang & Lai, Reference Tzeng, Lee, Huang, Huang and Lai2022), and the exploration of MOOC learners’ motivation (Chen, Gao, Yuan & Tang, Reference Chen, Gao, Yuan and Tang2020).

3.3.2 Refinement of scale items

To enhance item readability, eliminate redundant items, and generate new items in the initial pool, a focus group interview and an LMOOC expert review were conducted in the following part. Ten LMOOC learners from this research group’s university volunteered to join the focus group interview. Participants evaluated the intelligibility of 43 items, with an additional option, “I don’t understand this statement,” included to evaluate face validity. Any new item or keyword not previously identified in the initial pool was retained for further review. The focus group interview results suggested that most of the items could be easily understood by Chinese LMOOC learners. Among the 43 items, 7 items were reconstructed for clarity and 4 new items reflecting the features of LMOOC learning in the Chinese context were added to the initial item pool. After the focus group interview, the total number of items selected for further analysis rose to 47 (see Appendix 1 in the supplementary material).

The second step of item refinement involved LMOOC experts’ review. The study invited eight LMOOC experts who are either LMOOC designers or researchers and have at least five years of experience developing or researching LMOOCs. An email containing a link to an online survey was distributed to all experts. They checked the scale by judging (1) whether the items effectively measure the target constructs, (2) whether the statements are adequate for assessing the quality of LMOOCs, and (3) how many quality indicators can be identified in the initial item pool. They were also invited to rate their level of agreement with 47 items and offer feedback on each item, the structure of the scale, and its overall design. The experts concurred that the items adequately addressed the necessary quality issues of LMOOCs. Five items were further adjusted and reworded according to the suggestions from the experts. Afterward, by comparing and combining the main indicators, seven quality indicators of LMOOCs were finally defined: course objectives, course content, assessment, L2 teachers’ competence, teaching implementation, learner support, and technical environment. Meanwhile, items from various different frameworks that shared similar meanings were combined. Following this step, a preliminary set of 41 quality items was retained for further analysis. Finally, the three authors of this paper cross-checked all the indicators and items to resolve any disagreements before further application of the scale.

A pilot survey was conducted to reassess the items’ face validity, and 270 LMOOC learners from three universities participated in it. Learners were asked to reflect on a recent LMOOC they learned and gave their responses to 41 statements on a 5-point Likert scale. At the end of the questionnaire, students were asked to respond to a yes/no question to determine whether they experienced any difficulties in understanding the items. If they answered “yes,” they were prompted to specify which item(s) they found unclear or problematic. The participants suggested that all the statements were understandable, although further refinements were necessary. Finally, the 41 items were revised and classified into seven dimensions: course objectives (6 items), course content (10 items), assessment (3 items), L2 teachers’ competence (8 items), teaching implementation (4 items), learner support (4 items), and technical environment (6 items). The items retained after the pilot survey were subsequently subjected to a purification process (see Appendix 2).

3.3.3 Exploratory factor analysis (EFA)

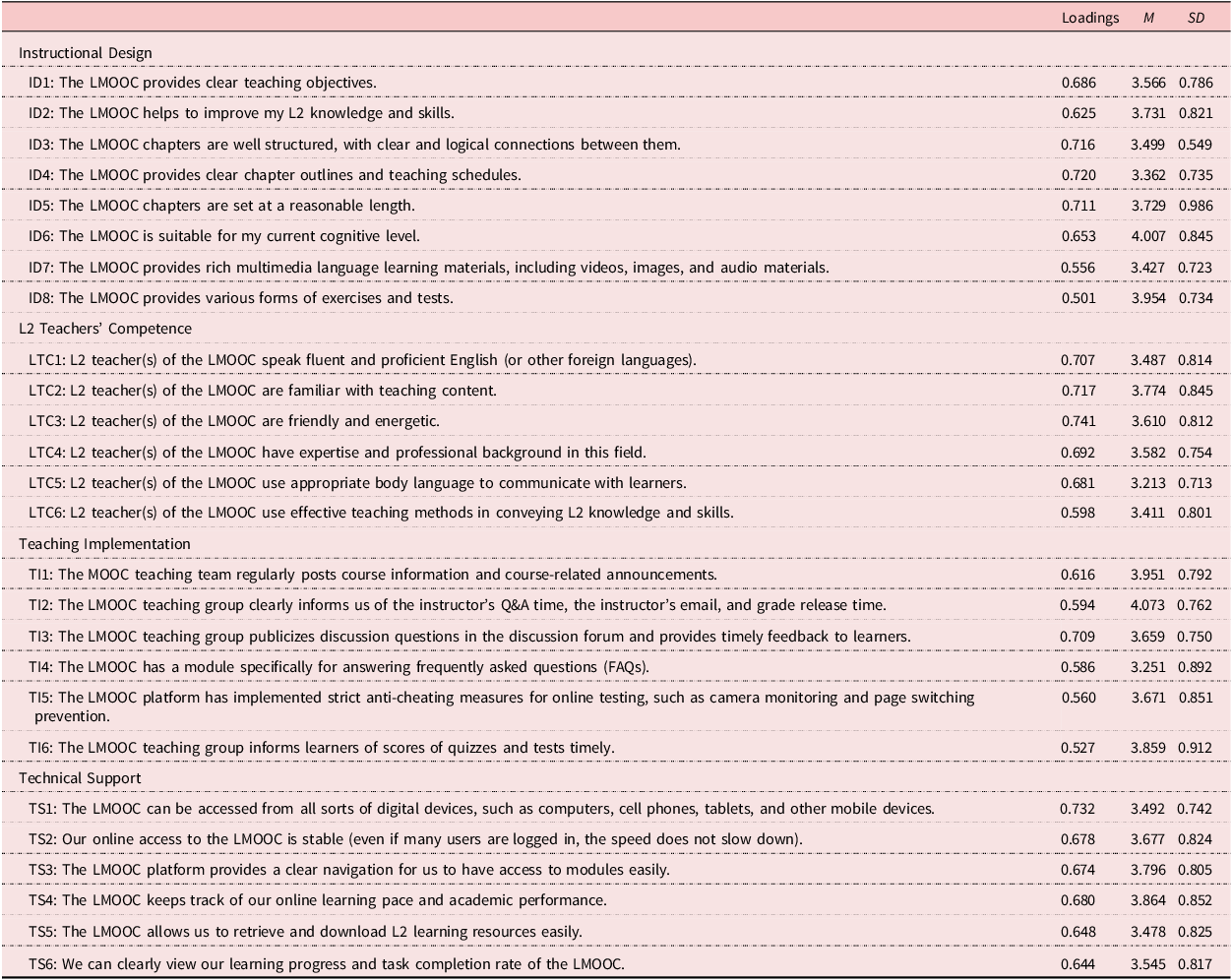

In the item purification stage, we employed EFA to illuminate the underlying factor structure of the 41-item scale. EFA is often commonly used in the early stage of scale development to refine and optimize items, laying the groundwork for subsequent confirmatory analysis. In the present study, EFA was tested on 1,295 LMOOC learners from eight universities. We used SPSS Version 26.0 to analyze the internal consistency and reliability of the draft questionnaire. We first conducted Bartlett’s test of sphericity and Kaiser–Meyer–Olkin (KMO) measure of sampling adequacy. The results (KMO = 0.985, > 0.60, p < 0.001) showed that the data were suitable for subsequent factor analysis. Then EFA with principal axis factoring and promax rotation was performed with the preliminary set of 41 scale items. Fifteen items with loading values below 0.500 or items that did not load onto any factor were removed. The remaining 26 items had eigenvalues greater than 1. As shown in Table 3, EFA results yielded a four-factor solution for the LQES, and the item loadings within their respective factors ranged from 0.501 to 0.741, indicating satisfactory reliability.

Table 3. Factor loadings of the items for the LMOOC Quality Evaluation Scale (LQES)

Exploring the factor structure presented in Appendix 3, eight items from original factors of course objectives, course content, and assessment loaded onto a new factor called Instructional Design. Six items loaded onto their original factor of L2 Teachers’ Competence. Six items from original factors of assessment, teaching implementation, and learner support loaded onto the factor of Teaching Implementation. Six items from original factors of learner support and technology environment loaded onto a new factor called Technical Support. The variances explained by the four factors were 42.28%, 13.27%, 8.62%, and 5.74% respectively, accounting for 69.91% in total, higher than the cut-off value of 50%. The questionnaire of the Chinese version is presented in Appendix 4.

3.3.4 Confirmatory factor analysis (CFA)

In accordance with the standard procedures for scale development and validation (e.g. DeVellis, Reference DeVellis2003; Hair, Sarstedt, Ringle & Mena, Reference Hair, Sarstedt, Ringle and Mena2012), CFA was performed to evaluate the validity and reliability of the four-factor scale. To confirm the assumed four-factor structure, CFA was conducted with the maximum likelihood method on the remaining 26 items using AMOS with a larger sample of 3,508 LMOOC learners from 16 universities. Fit indices recommended by Shi, Lee and Maydeu-Olivares (Reference Shi, Lee and Maydeu-Olivares2019) were utilized to assess the model fit. These evaluation metrics considered the ratio of chi-square to degrees of freedom (χ2/df), root-mean-square error of approximation (RMSEA), standardized root-mean-square residual (SRMR), Tucker–Lewis index (TLI), goodness-of-fit index (GFI), normed fit index (NFI), and comparative fit index (CFI). According to Perry, Nicholls, Clough and Crust (Reference Perry, Nicholls, Clough and Crust2015), a model is considered to have a reasonable fit if it meets the following criteria: (1) χ2/df ranges from 2 to 3, with smaller values preferred; (2) GFI is above .90; (3) RMSEA ranges from .05 to .08, with RMSEA below 0.05 considered indicative of good model fit (with the lower limit of the 90% confidence interval below .05 and the upper limit under .10); (4) SRMR is below .10; (5) NFI surpasses 0.90; (6) TLI is greater than 0.90; and (7) CFI exceeds .90.

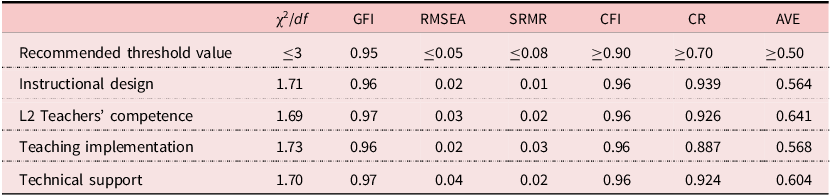

Based on multiple criteria (refer to Table 4), the initial CFA results suggested that the model demonstrated a strong fit to the data, with χ2/df = 1.711, GFI = 0.96, RMSEA = 0.02, SRMR = 0.01, NFI = 0.92, TLI = 0.97, and CFI = 0.96. The findings indicated that the four-factor model aligned well with the sample data. For the four dimensions – Instructional Design, L2 Teachers’ Competence, Teaching Implementation, and Technical Support – the indices (χ2/df, GFI, RMSEA, SRMR, and CFI) demonstrated satisfactory values.

Table 4. Evaluation of the goodness of fit of the scale

Note. GFI = goodness-of-fit index; RMSEA = root-mean-square error of approximation; SRMR = standardized root-mean-square residual; CFI = comparative fit index; CR = composite reliability; AVE = average variance extracted.

Additionally, composite reliability (CR) was used to assess reliability, reflecting the proportion of true variance relative to the total variance of the scores. According to Ab Hamid, Sami and Mohmad Sidek (Reference Ab Hamid, Sami and Mohmad Sidek2017), CR values above 0.70 indicate good reliability and acceptable reliability with values between 0.6 and 0.7. Convergent validity was assessed using the average variance extracted (AVE), which quantifies the extent to which the variance in observed variables is accounted for by their corresponding latent constructs. According to Cheung, Cooper-Thomas, Lau and Wang (Reference Cheung, Cooper-Thomas, Lau and Wang2024), AVE is a stricter criterion than CR, allowing researchers to conclude adequate convergent validity even if AVE falls below 0.50. In this study, CR values exceeding 0.80 confirmed high reliability, as the recommended threshold value was 0.70. Additionally, AVE values were above 0.50, demonstrating good convergent validity.

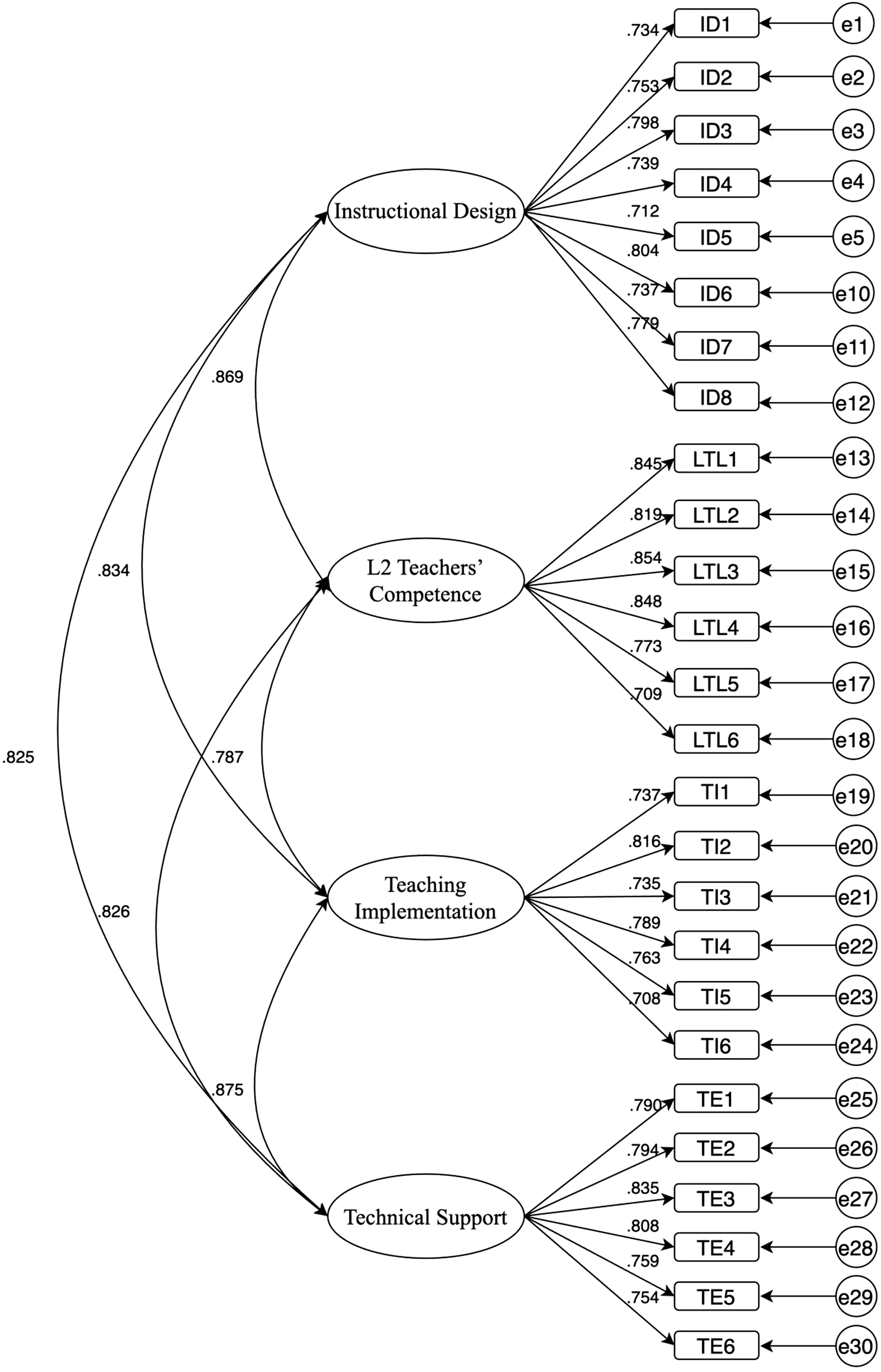

As shown in Figure 2, the standardized path diagram for the four-factor structure of the LQES is demonstrated. The finalized LQES included 26 items: 8 items of Instructional Design, 6 items of L2 Teachers’ Competence, 6 items of Teaching Implementation, and 6 items of Technical Support. Figure 2 indicates the structure coefficients for the LQES model, illustrating the standardized regression weights across the four identified dimensions. All structure coefficients exceeded the threshold values of 0.7, indicating acceptable structure coefficients for Instructional Design (from 0.712 to 0.804), L2 Teachers’ Competence (from 0.709 to 0.854), Teaching Implementation (from 0.708 to 0.816), and Technical Support (from 0.754 to 0.835). Furthermore, this study explored a higher-order model in which Instructional Design and L2 Teachers’ Competence were combined and represented as a second-order factor, as well as a bifactor model and a general factor model. Among these tested models, the hypothesized four-factor structure demonstrated the most optimal overall model fit and the most favorable reliability estimates.

Figure 2. Structure coefficients for the LMOOC Quality Evaluation Scale (LQES) Model.

4. Discussion and conclusion

In the present study, we developed an LMOOC Quality Evaluation Scale (LQES) for measuring L2 learners’ perceptions of LMOOC quality. The 26-item LQES consisted of four components: Instructional Design (8 items), L2 Teachers’ Competence (6 items), Teaching Implementation (6 items), and Technical Support (6 items).

Specifically, the quality indicator of Instructional Design included items related to LMOOC objectives, content, and assessment, reflecting how LMOOCs are designed and developed, as well as how learners’ academic performance is measured. Its inclusion of three indicators –objectives, content, and assessment – aligns with Stracke and Trisolini’s (Reference Stracke and Trisolini2021) findings that instructional design serves as the main component of pedagogy of online courses, consisting of learning objectives, design approaches, course content, and assessment. The EFA results revealed that the Instructional Design factor accounted for the largest proportion of the total variance of the LQES, echoing the opinion that instructional design is “a key component of the overall quality and pedagogic effectiveness of a learning experience” (Margaryan et al., Reference Margaryan, Bianco and Littlejohn2015: 78). Compared with other factors, LMOOC learners pay more attention to the clarity of teaching objectives, richness of teaching content (videos and supplementary materials), and diversity of assessment forms. For example, in the semi-structured interview, student Yu described an LMOOC she had taken as “a well-designed course with an introduction video for each chapter just like the trailer for a movie.” Student Li said jokingly, “I do not care how long an LMOOC video lasts and I just hope to learn some interesting and useful content different from my textbooks.”

Although previous studies have confirmed the critical role of the teacher in determining the quality of MOOCs (Huang et al., Reference Huang, Zhang and Liu2017; Ma, Reference Ma2018; Qiu & Ou, Reference Qiu and Ou2015), few empirical studies have investigated L2 teachers’ competence in evaluating the quality of LMOOCs. The quality indicator of L2 Teachers’ Competence in this study included items about L2 teachers’ oral English proficiency, expertise, and familiarity with the course, as well as teaching manners and methods. This dimension is the second influential factor in forming learners’ perceptions of LMOOC quality. Student Xi mentioned her favorite LMOOC in which “the teacher speaks fluent and clear English with an appropriate speed, which helps us to fully understand the complicated academic writing skills.” However, student Zhang described his disappointment with an LMOOC in which the teacher spent 30 minutes reading PowerPoint pages about a translation technology without any facial expressions.

In addition, the quality indicator of Teaching Implementation involves maintaining effective communication, ensuring timely feedback, supporting academic integrity, and fostering a harmonious online learning atmosphere in LMOOC teaching. This dimension is the third influential factor in shaping learners’ perceptions of LMOOC quality and plays a pivotal role in the quality evaluation framework of MOOCs (Yang et al., Reference Yang, Zhou, Zhou, Hao and Dong2020). Learners attached great importance to the feedback they receive from peer learners and teachers in LMOOC learning. Student Wu said she was excited about a storytelling task in the discussion forum of a cultural LMOOC, in which learners were asked to share a story about cultural heritage from their hometown in English. Teachers then gave likes and comments on learners’ stories, which made the latter feel encouraged and rewarded. However, many students expressed their disappointment with the silent teacher–student and student–student interactions in the discussion forums of LMOOCs. The findings confirmed the findings in previous studies suggesting that the lack of personalized guidance and interactions facing a heterogeneous target group remained an intrinsic problem in the implementation of MOOCs (Bárcena et al., Reference Bárcena, Martín-Monje and Read2015; Castellanos-Reyes, Reference Castellanos-Reyes2021; Stracke & Tan, Reference Stracke, Tan, Kay and Luckin2018).

Besides the above three quality indicators, the Technical Support factor is the fourth influential factor in forming students’ evaluation of LMOOC quality. This dimension included items related to the accessibility of LMOOC resources and the stability and multi-functionality of LMOOC platforms. Learners’ evaluation of technical support reflects their relationship with the technology that supports LMOOCs, such as how the platforms help learners navigate, search, study, download materials, and keep track of their learning process. Many learners in this study described today’s LMOOC platforms as “user friendly” and felt that it is easy to proceed with the help of the navigation page. Some problems remain concerning the accessibility of LMOOCs, as student Liu complained that “some LMOOCs are not attainable after the term is over.” The above four quality indicators of the LQES reveal the key components of the quality of LMOOCs and reflect L2 learners’ real expectations from LMOOCs.

The overall results from the reliability and validity analyses indicate that the 26-item LQES exhibits good psychometric properties. The scale proves to be a reliable and valid tool for measuring learners’ evaluation of LMOOC quality. Further CFA results demonstrate that this four-factor measure has a good fit, with each factor showing strong convergent and discriminant validity. These results suggest that the LQES, which adopts a large-scale mixed-method approach, is a promising instrument for measuring the quality and effectiveness of LMOOC learning.

The quantitative and qualitative findings in developing the LQES have theoretical and practical implications. Theoretically, the identification of a four-factor structure of the LQES provides a better understanding of the complexities of LMOOC learning. This study addresses the notable lack of multidimensional instruments for assessing the quality of LMOOCs. Through large-scale surveys, semi-structured interviews, focus group discussions, and expert reviews, we investigated learners’ perceptions of LMOOC quality, contributing to the theoretical advancement of MOOC evaluation by integrating language education–specific dimensions into the assessment model. Pedagogically, the research findings provide implications for LMOOC practitioners. First, the LQES provides scientifically rigorous standards for measuring LMOOC quality, serving as a diagnostic tool to provide feedback to LMOOC educators. The data-driven insights inform instructional designers and teachers of learners’ evaluation of the key factors that contribute to high-quality LMOOC experiences. Therefore, they can adjust pedagogical approaches and resources to enhance learner engagement, motivation, and satisfaction, leading to improved learning outcomes. In the near future, generative AI could be used to create diverse teaching materials, including quizzes, interactive exercises, and simulations for learners. Meanwhile, AI chatbots and virtual assistants will answer learners’ questions in real time, provide guidance, and facilitate discussions. The integration of AI technologies can deliver more interactive, personalized, adaptive, and customized learning experiences to improve the pedagogical and technological quality of LMOOCs. Second, the LQES scale fosters professional development among language educators. Through understanding the criteria for high-quality MOOCs, LMOOC educators can adopt innovative instructional methods and regularly refine their courses to ensure their continuous improvement. By identifying successful LMOOC teaching practices and case studies through this evaluation scale, educators can share valuable insights with peers, fostering collaboration within the language education community. Finally, since the main MOOC platforms worldwide provide xMOOCs, this study, conducted in the Chinese context, will provide implications for other EFL contexts. How Chinese L2 learners perceive and evaluate the quality of LMOOCs may serve as a useful reminder for LMOOC learners and instructors in other countries and regions.

We acknowledge the following limitations of this study. First, although 2,315 learners from 15 LMOOCs cover a wide range of participants and LMOOC themes, the participants in this study cannot be considered representative of all LMOOC learners. Second, additional validation of the translation versions into other languages from the LQES should be implemented since this study is conducted in a Chinese EFL context. Lastly, a longitudinal study could be conducted in the future to reveal the dynamic changes in learners’ perceptions and evaluations of LMOOC quality. To conclude, the present study develops an LMOOC Quality Evaluation Scale (LQES) for the first time and elucidates the key quality indicators underpinning L2 learners’ evaluation of LMOOC quality. The LQES paves the way for developing instruments for measuring the quality and effectiveness of other technology-enhanced language learning practices.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/S0958344025000072

Data availability statement

Data available on request from the authors.

Authorship contribution statement

Rong Luo: Conceptualization, Project administration, Methodology, Writing – original draft; Rou Huang: Data collection, Writing – review & editing; Gaojun Shi: Methodology, Data analysis, Writing – review & editing.

Funding disclosure statement

This research is supported by the Fundamental Research Funds for the Central Universities (Qingfeng Project of Zhejiang University) and Zhejiang Provincial Program for Educational Science Research (2025SCG 229).

Competing interests statement

The authors declare no competing interests.

Ethical statement

Ethical review and approval were not required for the study in accordance with the local legislation and institutional requirements. Informed consent was gathered from all participants.

GenAI use disclosure statement

The authors declare no use of generative AI.

About the authors

Rong Luo is an associate professor at the School of International Studies, Zhejiang University. Her research interests include language MOOCs (LMOOCs), technology-enhanced second language acquisition, and media education.

Rou Huang is a postgraduate student at the School of International Studies, Hangzhou Normal University. Her research interests are LMOOCs and learner autonomy in technology-enhanced language learning.

Gaojiao Shi is a PhD student at the College of Education, Zhejiang University. His research interests include online learning and educational technology.