1. Introduction

This article provides a survey of several works in the research area known as automatic amortized resource analysis (AARA). We primarily aim at a general overview of the state of the art in AARA. However, we also highlight Martin Hofmann’s leading role in the development of AARA and offer some views on the historical context and the influence of Hofmann’s work. While some of these views are subjective, we found it fitting to include them in the context of this special issue.

AARA is a technique for automatically or semi-automatically deriving symbolic bounds on the resource consumption of programs at compile time. A resource is a quantity, such as time and space, that is consumed during the evaluation of a program. By symbolic bound, we mean that the bound of a program is given by a function of the input to that program. Such symbolic bounds should of course be meaningful to a user and computationally simple. In the case of AARA, bounds are usually given as simple arithmetic expressions. Research on AARA has focused on the foundational and algorithmic aspects of automatic resource analysis, but the work has been motivated by applications such as resource certification of embedded systems (Hammond et al. Reference Hammond, Dyckhoff, Ferdinand, Heckmann, Hofmann, Loidl, Michaelson, Sérot and Wallace2006) and smart contracts (Das et al. Reference Das, Balzer, Hoffmann, Pfenning and Santurkar2021).

AARA has initially been developed by Hofmann and Jost (Reference Hofmann and Jost2003) in 2003 to derive linear upper bounds on the heap-space usage of first-order functional programs with an eager evaluation strategy. Subsequently, AARA has been applied to derive non-linear (Hoffmann and Hofmann Reference Hoffmann and Hofmann2010b; Hofmann and Moser Reference Hofmann and Moser2018; Kahn and Hoffmann Reference Kahn and Hoffmann2020) worst-case (upper) bounds and best-case (lower) bounds (Ngo et al. Reference Ngo, Dehesa-Azuara, Fredrikson and Hoffmann2017) for other resources such as time or user-defined cost metrics (Jost et al. Reference Jost, Loidl, Hammond, Scaife and Hofmann2009a). It has also been extended to additional language features including higher-order functions (Jost et al. Reference Jost, Hammond, Loidl and Hofmann2010), object-oriented programs (Bauer et al. Reference Bauer, Jost, Hofmann, Sutcliffe and Veanes2018; Hofmann and Jost Reference Hofmann and Jost2006; Hofmann and Rodriguez Reference Hofmann and Rodriguez2009), lazy evaluation (Simões et al. Reference Simões, Vasconcelos, Florido, Jost and Hammond2012; Vasconcelos et al. Reference Vasconcelos, Jost, Florido and Hammond2015), parallel evaluation (Hoffmann and Shao Reference Hoffmann and Shao2015), programs with side-effects (Atkey Reference Atkey2010; Carbonneaux et al. Reference Carbonneaux, Hoffmann and Shao2015), probabilistic programs (Ngo et al. Reference Ngo, Carbonneaux and Hoffmann2018; Wang et al. Reference Wang, Kahn and Hoffmann2020), and concurrent programs with session types (Das et al. Reference Das, Hoffmann and Pfenning2018). Traditionally, AARA has been formulated with an affine type system (Hofmann and Jost Reference Hofmann and Jost2003; Hoffmann et al. Reference Hoffmann, Das and Weng2017; Jost et al. Reference Jost, Hammond, Loidl and Hofmann2010), but it can also be formulated as a Hoare-style logic (Carbonneaux et al. Reference Carbonneaux, Hoffmann and Shao2015; Carbonneaux et al. Reference Carbonneaux, Hoffmann, Reps and Shao2017) or an extension of separation logic (Atkey Reference Atkey2010; Charguéraud and Pottier Reference Charguéraud and Pottier2019; Mével et al. Reference Mével, Jourdan and Pottier2019). Table 1 lists most of these works and their primary features.

Table 1. Overview body of work

Despite the relatively large number of publications on the subject, the development of AARA has been remarkably homogeneous and most of the different features and extensions are compatible with each other. All the aforementioned works share the following key principles of AARA:

-

The compile-time analysis is described by inductively defined and efficiently checkable inference rules, so that derivation trees are proofs of resource bounds. Moreover, the inference of derivation trees is a two-step process that starts with a traditional inference method such as Hindley–Milner–Damas and proceeds with the addition of resource annotations that are derived by solving a numeric optimization problem, usually a linear program.

-

Bound analysis is based on the potential method of (manual) amortized analysis (Tarjan Reference Tarjan1985) and can take into account amortization effects across a sequence of operations.

-

The analysis is proven sound with respect to a precise definition of resource consumption that is given by a cost semantics that associates closed programs with an evaluation cost.

In this survey, we aim to focus on the high-level ideas of AARA. However, to keep this article concise, we do so using some notions and technical terms from the literature without their formal definitions. Readers that are familiar with basic programming language concepts such as inductive definitions, type systems, operational semantics, program logics, and linear logic shall be able to understand the notions without consulting previous work.

We decided to roughly follow the chronological development of AARA, grouping together individual topics if it is beneficial for a streamlined presentation. We start by briefly discussing Hofmann’s work in implicit computational complexity that led to the development of AARA in Section 2. We then cover AARA with linear potential functions in Section 3, higher-order in Section 4, non-linear potential functions in Section 5, and lazy evaluation in Section 6. AARA for imperative and object-oriented programs is discussed in Section 7, and Section 8 covers parallel evaluation and concurrency. Finally, Section 9 discusses bounds on the expected cost of probabilistic programs, Section 10 contains remarks about larger research projects that supported the development of AARA, and Section 11 contains concluding remarks.

2. Setting the Stage

AARA originated in the research area of implicit computation complexity (ICC). The goal of ICC is to find natural characterizations of complexity classes through programming languages. During his time in Darmstadt, Hofmann became interested in the thorny question of characterizing the class PTIME with higher-order languages that had been attracting widespread interest at the time. In a series of articles (Hofmann Reference Hofmann1999; Hofmann Reference Hofmann2002; Hofmann Reference Hofmann2003), he was able to prove a beautiful and ingenious result: PTIME corresponds to higher-order programs with structural recursion if the computation is non-size-increasing, a property that could be elegantly encoded with local type rules based on linear logic. More information about ICC and Hofmann’s work in the area can be found in the survey articles by Hofmann (Reference Hofmann2000a) and Dal Lago (Reference Dal Lago2022).

The line of work reviewed in this survey could be considered to be started by Hofmann in an article in 2000 (Hofmann Reference Hofmann2000b). In this work, he shows how general-recursive first-order functions can be compiled into a fragment of the C programming language without dynamic memory allocation (“malloc-free C code”), thus demonstrating the links of his theoretical results in ICC (Hofmann Reference Hofmann1999; Hofmann03) to practical programs. The essential idea was the introduction of a linear (or affine) resource type

![]() $\diamond$

, whose values represent freely usable memory locations for storage, encoded in C by pointers of type void *. Considering

$\diamond$

, whose values represent freely usable memory locations for storage, encoded in C by pointers of type void *. Considering

![]() $\diamond$

to be a linear type is perfectly natural, since a free memory location can obviously only be used once to store data, after which it is no longer free. While the programs are permitted to create and destroy dynamic data structures such as lists, each creation must be justified by spending a value of type

$\diamond$

to be a linear type is perfectly natural, since a free memory location can obviously only be used once to store data, after which it is no longer free. While the programs are permitted to create and destroy dynamic data structures such as lists, each creation must be justified by spending a value of type

![]() $\diamond$

. Vice versa, destruction may return values of type

$\diamond$

. Vice versa, destruction may return values of type

![]() $\diamond$

to be recycled again elsewhere. Thus, such programs must receive all the memory to be used during their execution through their input.

$\diamond$

to be recycled again elsewhere. Thus, such programs must receive all the memory to be used during their execution through their input.

Now that a first-order linear program’s dynamic memory usage could be read off from its type signature by the number of arguments of type

![]() $\diamond$

within its input, Hofmann asked how the usage of

$\diamond$

within its input, Hofmann asked how the usage of

![]() $\diamond$

types could be automatically inserted into an ordinary first-order linear functional programming language without a programmer’s intervention. In 2001, Hofmann posed this question to the second author, who answered it in his diploma thesis (Jost Reference Jost2002).

$\diamond$

types could be automatically inserted into an ordinary first-order linear functional programming language without a programmer’s intervention. In 2001, Hofmann posed this question to the second author, who answered it in his diploma thesis (Jost Reference Jost2002).

After a first inference for the direct insertion of

![]() $\diamond$

turned out to be feasible, but cumbersome, it quickly became clear that the

$\diamond$

turned out to be feasible, but cumbersome, it quickly became clear that the

![]() $\diamond$

type should be abstracted away into natural number annotations. This avoids unnecessary distinctions between arguably equivalent types such as

$\diamond$

type should be abstracted away into natural number annotations. This avoids unnecessary distinctions between arguably equivalent types such as

![]() $(A \otimes \diamond) \rightarrow B$

and

$(A \otimes \diamond) \rightarrow B$

and

![]() $(\diamond \otimes A) \rightarrow B$

, which would have prevented completeness of the inference in a trivial way. Instead, a type like

$(\diamond \otimes A) \rightarrow B$

, which would have prevented completeness of the inference in a trivial way. Instead, a type like

![]() $(\diamond \otimes A \otimes \diamond)$

, for example, would be written as (A, 2) using a natural number to denote the contained amount of

$(\diamond \otimes A \otimes \diamond)$

, for example, would be written as (A, 2) using a natural number to denote the contained amount of

![]() $\diamond$

types.

$\diamond$

types.

It was then possible to infer the actual natural number values for these type annotations through integer linear programming (ILP), an outcome that Hofmann had already hoped for from the onset, since he had picked the second author due to his study focus on ILP and functional programming. The constraints of the ILP can be constructed step-by-step along a standard type derivation for a functional program. Examining program examples then led to dropping the restriction to integer solutions of the linear programming (LP). One class of such examples are list-manipulating programs that match on multiple list elements in one recursive iteration, such as the summation of each pair of two consecutive numbers within a list.

Hofmann was outright displeased by the need to allow rational solutions, as this destroyed his compilation technique into malloc-free C code, since there are no pointers to fractional memory locations. However, the second author observed that integer solutions to the linear program could easily be constructed from rational solutions. This is surprising, since generally LP is in P while ILP is NP-complete. Eventually Hofmann and the second author could prove that the derived linear programs always have a benign shapeFootnote 1 that allows the construction of integral solutions from rational solutions, which then formed one of the main results of their POPL article that introduced AARA (Hofmann and Jost Reference Hofmann and Jost2003) as a technique for deriving heap-space bounds for first-order functional programs.

3. Linear Amortized Analysis

This section provides an understanding of the basic mechanics of AARA, based on the first works that dealt with linear resource bounds. We first construct the type rules that form the basis of AARA from their intuitive descriptions. Next, we provide an analysis of a simple program example in Section 3.2 and then discuss adapting the analysis for different resources in Section 3.3. The general soundness proof is sketched afterwards in Section 3.4, and we conclude this section on how AARA is connected to previous manual amortization techniques in Section 3.5. The key observation of this section is that the resource usage is counted relative to the use of data constructors.

3.1 Outline of the type system

For completeness, the language constructs used in this section and most of this article are listed in Figure 1. The expressions are mostly standard, except the last two:

![]() $\mathop{\,\mathsf{tick }\,}$

allows programmers to define the resource usage of a program: If

$\mathop{\,\mathsf{tick }\,}$

allows programmers to define the resource usage of a program: If

![]() $(\mathsf{tick}~q)$

is evaluated, then the resource cost is

$(\mathsf{tick}~q)$

is evaluated, then the resource cost is

![]() $q \in \mathbb{Q}$

. If q is negative, then resources become available. Similarly, the expression

$q \in \mathbb{Q}$

. If q is negative, then resources become available. Similarly, the expression

![]() $\mathop{\,\mathsf{share }\,} x \mathop{\,\mathsf{ as }\,} x_1,x_2 \mathop{\,\mathsf{ in }\,} e$

has no effect on the result of a program: it removes the variable x from the scope and introduces two copies

$\mathop{\,\mathsf{share }\,} x \mathop{\,\mathsf{ as }\,} x_1,x_2 \mathop{\,\mathsf{ in }\,} e$

has no effect on the result of a program: it removes the variable x from the scope and introduces two copies

![]() $x_1$

and

$x_1$

and

![]() $x_2$

into the scope of e. It is a contraction rule as typically found in linear type systems that provides a controlled mechanism of reference duplication. Variables may be reused indefinitely in AARA, but the duplication of a reference might affect the resource usage of a program; thus,

$x_2$

into the scope of e. It is a contraction rule as typically found in linear type systems that provides a controlled mechanism of reference duplication. Variables may be reused indefinitely in AARA, but the duplication of a reference might affect the resource usage of a program; thus,

![]() $\mathop{\,\mathsf{share }\,}$

provides an explicit handle for this.

$\mathop{\,\mathsf{share }\,}$

provides an explicit handle for this.

Figure 1. A simple functional language.

Given a function from a functional program, we want to infer an upper bound on its resource usage as a function of its input. We encode this bound through annotations within the type: base types are unannotated, an recursive data type receives one annotation for each kind of its constructors, and a function type receives two annotations:

The intuitive meaning of a function type

![]() ${A}\buildrel {q/q'} \over \longrightarrow{B}$

is then derived as follows. Given q resources and the resources that are assigned to a function argument v by the annotated type A (see below), the evaluation of the function with argument v does not run out of resources. Moreover, after the evaluation there are q′ resources left in addition to the resources that are assigned to the result of the evaluation by the type B.

${A}\buildrel {q/q'} \over \longrightarrow{B}$

is then derived as follows. Given q resources and the resources that are assigned to a function argument v by the annotated type A (see below), the evaluation of the function with argument v does not run out of resources. Moreover, after the evaluation there are q′ resources left in addition to the resources that are assigned to the result of the evaluation by the type B.

However, let us first encode the above idea into type rules of the form

![]() where

where

![]() $\Gamma$

is a type context mapping variable names to types, e is a term expression of the simple programming language shown in Figure 1, with A an annotated type as described above and

$\Gamma$

is a type context mapping variable names to types, e is a term expression of the simple programming language shown in Figure 1, with A an annotated type as described above and

![]() $q,q' \in {\mathbb{Q}_{\geq 0}}$

. We write

$q,q' \in {\mathbb{Q}_{\geq 0}}$

. We write

![]() $\Gamma_1,\Gamma_2$

for the disjoint union of two type contexts, as usual.

$\Gamma_1,\Gamma_2$

for the disjoint union of two type contexts, as usual.

For simplicity, we will only consider (possibly nested) lists here, but Jost et al. (Reference Jost, Loidl, Hammond, Scaife and Hofmann2009a) show that dealing with arbitrary user-defined recursive data types is straightforward: Each type is annotated with one number for each of its different constructors. This schema would actually entail two annotations for lists, one for the Cons-constructor and one annotation for the Nil-constructor. However, most works omitted the annotation for the Nil-constructor and treated it as constant zero, since in the special case of lists the annotation for the Nil-constructor is entirely redundant. Omitting it thus streamlines the presentation in a paper, but it might be easier to include it in actual implementation to avoid a special case for list types.

Following the previous intuitive description, the rules for variables and constants are straightforward: the amount q of received resources must be greater than the worst-case execution cost c for the respective instruction and the amount q′ of unused resources to be returned:

For bounding heap-space usage, we would choose

![]() $c_{\text{Var}} = 0$

and

$c_{\text{Var}} = 0$

and

![]() $c_{\text{Unit}} = 0$

. These cost constants are simply inserted anywhere where costs might be incurred during execution.

$c_{\text{Unit}} = 0$

. These cost constants are simply inserted anywhere where costs might be incurred during execution.

We formulate leave rules like L:Var and L:Unit in a linear style, that is, with contexts that only mention that variables that appear in the terms. We use the following structural weakening rule to obtain more general affine typings. In an implementation, we can simply integrate weakening with the leave rules and use, for instance, an arbitrary context

![]() $\Gamma$

in the rule L:Unit.

$\Gamma$

in the rule L:Unit.

The following type rule for local definitions shows how resources are threaded into the subexpressions:

For bounding stack-space usage, one might choose

![]() $c_{\text{Let1}} = 1$

,

$c_{\text{Let1}} = 1$

,

![]() $c_{\text{Let2}} = 0$

and

$c_{\text{Let2}} = 0$

and

![]() $c_{\text{Let3}} = -1$

. For worst-case execution time (WCET), all costs constants are non-negative, depending on the actual machine. Heap-space usage is the most simple, with all cost constants in the let rule and most other cost constants being zero. Thus, from now on in this section, we will only consider heap-space usage for brevity and discuss the adaption to other resource later in Section 3.3. Hence, we simply rewrite the type rule for local definitions by:

$c_{\text{Let3}} = -1$

. For worst-case execution time (WCET), all costs constants are non-negative, depending on the actual machine. Heap-space usage is the most simple, with all cost constants in the let rule and most other cost constants being zero. Thus, from now on in this section, we will only consider heap-space usage for brevity and discuss the adaption to other resource later in Section 3.3. Hence, we simply rewrite the type rule for local definitions by:

Note that these type rules assume the decorated q to be numeric constants, but for the inference, the type derivation is constructed assuming fresh names for each of those numeric variables. It is only the validity of the type derivation that depends on the actual numbers, but otherwise no decisions for constructing the type derivation depend on the actual values. So a standard Hindley–Milner–Damas type derivation is performed and all numeric side conditions are simply gathered. Any solution to these numeric constraints then yields a valid type derivation and thus a different bound on resource usage. The solutions are usually obtained by a linear program solver, since the constraints happen to be of the appropriate form.

There are various insignificant choices for the presentation of these rules, for example, unifying some of the numerical variables and making the constraints implicit, as done in many papers to simply shorten the presentation:

Note that these variant type rules now enforce equality between numeric variables instead of certain inequalities. This would deliver exact resource bounds for all possible executions instead of worst-case bounds, which would likely lead to infeasible numeric constraints. Thus, an additional structural type rule L:Relax is then required:

Relying solely on L:Relax for relaxation of numeric constraints has the important benefit of eliminating many boring repetitions from the complicated soundness proof of the annotated type system. Otherwise, resources might be abandoned in many places, as could be seen in L:Let, which would require us to prove three times that it is okay to reduce the resources at hand. For the sake of clarity within this presentation, we will keep to the former style of using explicit numeric constraints within this section.

Likewise, for obtaining concise proofs without too much redundancy, most papers in this field require the program to be automatically converted into a let-normal form (LNF) where sequential composition is only available in let bindings. Other syntactic forms restrict subexpressions to be a variable whenever possible without altering the expressivity of the language. This is similar to but more restrictive than A-normal from Sabry and Felleisen (Reference Sabry and Felleisen1993) where also values may appear in variable positions.

Otherwise, the lengthy proof for case L:Let must be repeated in all other cases of the proof that require proper subexpressions. Since LNF also eases understanding, we follow this conventions here as well. In a practical implementation of AARA, it is easy to drop the requirement for LNF by incorporating the treatment of sequential computations of the let rule in other rules. Alternatively, one can compile unrestricted programs to LNF before the analysis. However, converting a program to LNF might alter its resource usage and additional syntactic forms such as a cost-free let expression must be introduced to ensure that the analysis correctly captures the cost of the source program (Jost et al. Reference Jost, Loidl, Hammond, Scaife and Hofmann2009a).

Cost constants

![]() $c_{\text{Left}_A}$

and

$c_{\text{Left}_A}$

and

![]() $c_{\text{Right}_B}$

must be set to the appropriate size of each value;

$c_{\text{Right}_B}$

must be set to the appropriate size of each value;

![]() $c_{\text{CaseLeft}}$

and

$c_{\text{CaseLeft}}$

and

![]() $c_{\text{CaseRight}}$

may be zero or set to the respective negative values if the match also deallocates the value from memory, which some papers provided as a variant.

$c_{\text{CaseRight}}$

may be zero or set to the respective negative values if the match also deallocates the value from memory, which some papers provided as a variant.

However, in rule L:Left we also see that constructing the value not only requires

![]() $c_{\text{Left}}$

many resources, but also that p-many free resources are set aside and are no longer available to be used immediately. These p resources become only available again for use upon deconstruction of the value, as can be seen in rule L:MatchSum. In this way, the cost bound of a program is given by its input type: For example, input type

$c_{\text{Left}}$

many resources, but also that p-many free resources are set aside and are no longer available to be used immediately. These p resources become only available again for use upon deconstruction of the value, as can be seen in rule L:MatchSum. In this way, the cost bound of a program is given by its input type: For example, input type

![]() ${{A^p} + {B^r}}$

encodes that the program requires p resources if given a value constructed with

${{A^p} + {B^r}}$

encodes that the program requires p resources if given a value constructed with

![]() $\mathop{\,\mathsf{left}\,}$

and r resources for receiving

$\mathop{\,\mathsf{left}\,}$

and r resources for receiving

![]() $\mathop{\,\mathsf{right}\,}$

as its input. Since types are typically nested, more elaborate cost bounds may be formed.

$\mathop{\,\mathsf{right}\,}$

as its input. Since types are typically nested, more elaborate cost bounds may be formed.

It is important to note that the association of potential different data constructors happens only at the type level. Since it was numerously presumed otherwise, let us be clear: Annotated types are never present at runtime! So when we discuss, for instance, that potential becomes available during a case analysis on a sum type, we merely provide an intuition for understanding the type rules.

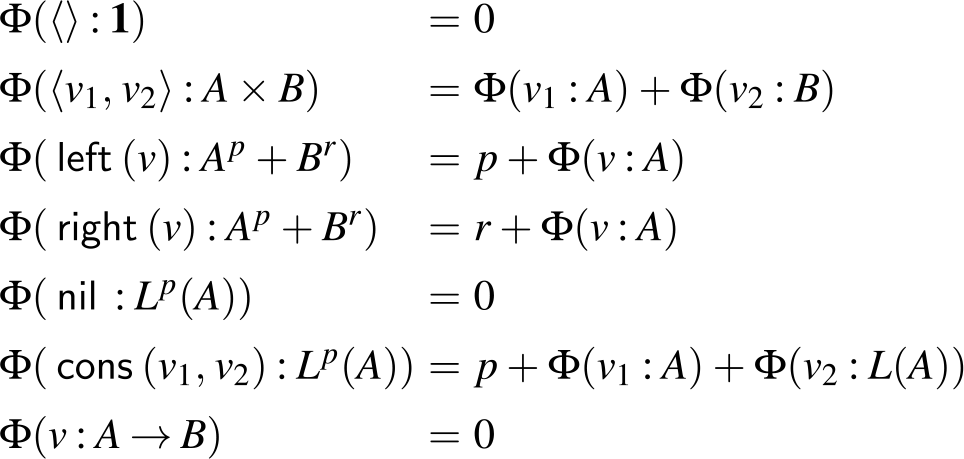

The sum of free resources associated with a concrete value through its annotated type is referred to as the potential of this type and value. When we refer to the potential of a type, we mean the function associated with the type that maps a runtime value of this type to the amount of free resources associated with it. The potential function

![]() $\Phi(\cdot : A) : \unicode{x27E6} A \unicode{x27E7} \to {\mathbb{Q}_{\geq 0}}$

is defined in Figure 2. The potential function is extended pointwise to environments and contexts by

$\Phi(\cdot : A) : \unicode{x27E6} A \unicode{x27E7} \to {\mathbb{Q}_{\geq 0}}$

is defined in Figure 2. The potential function is extended pointwise to environments and contexts by

![]() $\Phi(V:\Gamma) = \sum_{x \in V} \Phi\bigl(V(x):\Gamma(x)\bigr)$

. Note that the pair constructor does not have a potential annotation. It could receive an annotation for conformity, but it does not increase the possible resource bounds to be found, since we know already statically that each runtime value of a pair type contains precisely one pair constructor. In contrast, the sum type

$\Phi(V:\Gamma) = \sum_{x \in V} \Phi\bigl(V(x):\Gamma(x)\bigr)$

. Note that the pair constructor does not have a potential annotation. It could receive an annotation for conformity, but it does not increase the possible resource bounds to be found, since we know already statically that each runtime value of a pair type contains precisely one pair constructor. In contrast, the sum type

![]() ${{A} + {B}}$

requires two resource annotations, one for the Left-constructor and one annotation for the Right-constructor. The important difference being that given a runtime value of this type, we do not know in advance which one of the two constructors will be contained.

${{A} + {B}}$

requires two resource annotations, one for the Left-constructor and one annotation for the Right-constructor. The important difference being that given a runtime value of this type, we do not know in advance which one of the two constructors will be contained.

Figure 2. Potential associated with value v of annotated type A. Note that value v exists at runtime only, while the annotated type A exists at compile time only.

The figure also shows that the potential of a list having length n and type

![]() $L^{P}(A)$

is

$L^{P}(A)$

is

![]() $n \cdot p$

, plus the potential contained in elements of the list. The Nil-constructor does not have a potential annotation because a list data structure contains precisely one Nil-constructor. Like for pairs, this allows its potential to be equivalently shifted outwards to the enclosing type or the top-level, if there is no enclosing type around the list.

$n \cdot p$

, plus the potential contained in elements of the list. The Nil-constructor does not have a potential annotation because a list data structure contains precisely one Nil-constructor. Like for pairs, this allows its potential to be equivalently shifted outwards to the enclosing type or the top-level, if there is no enclosing type around the list.

The according typing rules are

A consequence of associating free resources with values through annotated types is that referencing values more than once could lead to an unsound duplication of potential. This is prevented by an explicit contraction rule, which governs how associated resources are distributed between aliased references, thus becoming an affine type system according to Walker (Reference Walker2002). Aliasing is thus not all problematic for the formulation of AARA, since potential is based per-reference anyway.

The contraction rule L:Share relies on the sharing relation

linearly distributing potential between two annotated types, that is, for all values v the inequality

![]() $\Phi(v:A) \geq \Phi(v:B) + \Phi(v:C)$

holds whenever

$\Phi(v:A) \geq \Phi(v:B) + \Phi(v:C)$

holds whenever

is true. Note that variables that have types without potential may be freely duplicated using the explicit form for sharing.

The two remaining typing rules for function application and abstraction simply track the constant part of the resource cost for evaluating a functions body, the input dependent part being automatically tracked through the annotated argument and result types.

The restriction of pointwise sharing of all types within the context to themselves by

is a simple way to prevent closures from capturing potential, which we will comment upon this in Section 4 and discuss further alternatives there. In a nutshell, this is necessary since we allow unrestricted sharing of functions. If a closure would capture potential, then two uses of that closure would lead to a duplication of the captured potential. Technically, the judgment

forces all resource annotations in the context

![]() $\Gamma$

to be zero.

$\Gamma$

to be zero.

3.2 Program example: Append

Consider as an example the following definition of a functionFootnote 2 that concatenates two lists:

Let us first consider the expected result intuitively: It is fairly easy to see that function

![]() ${append}$

constructs precisely one new

${append}$

constructs precisely one new

![]() ${{\mathop{\,\mathsf{cons}\,}}(,)}$

node for each

${{\mathop{\,\mathsf{cons}\,}}(,)}$

node for each

![]() ${{\mathop{\,\mathsf{cons}\,}}(,)}$

node contained within its first argument, so a simple heap-space execution cost might expected to be to size of a

${{\mathop{\,\mathsf{cons}\,}}(,)}$

node contained within its first argument, so a simple heap-space execution cost might expected to be to size of a

![]() ${{\mathop{\,\mathsf{cons}\,}}(,)}$

node times the length of the first argument. We will now show how the analysis concludes this as well.

${{\mathop{\,\mathsf{cons}\,}}(,)}$

node times the length of the first argument. We will now show how the analysis concludes this as well.

Annotating the type with fresh resource variable yields

![]() ${{{L^{P}(A)} \times {L^{r}(A)}}}\buildrel {q/q'} \over \longrightarrow{L^{s}(A)}$

. The type rules of AARA then construct the cost constraints by starting with q resources, as detailed by the annotation of the function arrow. The resource-annotated type together with the set of constraints can be seen as the most general type. Another point of view would be to consider the constraints to be a description of a set of possible concrete numeric potential annotations.

${{{L^{P}(A)} \times {L^{r}(A)}}}\buildrel {q/q'} \over \longrightarrow{L^{s}(A)}$

. The type rules of AARA then construct the cost constraints by starting with q resources, as detailed by the annotation of the function arrow. The resource-annotated type together with the set of constraints can be seen as the most general type. Another point of view would be to consider the constraints to be a description of a set of possible concrete numeric potential annotations.

Since the outermost term is a case distinction, type rule L:MatchList applies. The Nil-branch being a simple variable, we obtain, in the terms of type rule L:MatchList, the constraint

![]() $q = q' + c_{Nil} + c_{CaseNil}$

from rule L:Var. However, we also note that the types

$q = q' + c_{Nil} + c_{CaseNil}$

from rule L:Var. However, we also note that the types

![]() $L^{r}(A)$

and

$L^{r}(A)$

and

![]() $L^{s}(A)$

must be identical, so we note the constraint

$L^{s}(A)$

must be identical, so we note the constraint

![]() $r=s$

, as well, for which most works use an explicit subtyping mechanism omitted here.

$r=s$

, as well, for which most works use an explicit subtyping mechanism omitted here.

For the Cons-branch, the constraint

![]() $q + p \geq q_2 + c_{CaseCons}$

shows that from the initial amount of resources q, we must pay the cost for the case distinction and gain the potential associated with one node of the first input list p. Note that the overall potential remains unchanged, since the reference x is no longer available within the typing context; instead, we have the new references h and t. The rule L:MatchList mandates the typings

$q + p \geq q_2 + c_{CaseCons}$

shows that from the initial amount of resources q, we must pay the cost for the case distinction and gain the potential associated with one node of the first input list p. Note that the overall potential remains unchanged, since the reference x is no longer available within the typing context; instead, we have the new references h and t. The rule L:MatchList mandates the typings

![]() $h:A$

and

$h:A$

and

![]() $t:L^{P}(A)$

. In this way, it is ensured the potential assigned by p to each element in x and that potential carried by the elements is not duplicated nor lost.Footnote 3

$t:L^{P}(A)$

. In this way, it is ensured the potential assigned by p to each element in x and that potential carried by the elements is not duplicated nor lost.Footnote 3

The subsequent application of L:Let threads these resources to the function application and we obtain the constraints

![]() $q_2 \geq q + c_{\text{App}}$

and

$q_2 \geq q + c_{\text{App}}$

and

![]() $q_2 - q_3 \geq q - q' + c_{\text{App}}$

with

$q_2 - q_3 \geq q - q' + c_{\text{App}}$

with

![]() $q_3$

being the amount of resources being supplied to the body of the let expression. The body of the let expression is typed by L:Cons and we obtain

$q_3$

being the amount of resources being supplied to the body of the let expression. The body of the let expression is typed by L:Cons and we obtain

![]() $q_3 \geq s + q' + c_{\text{Cons}}$

. Gathering and simplifying these constraints, we obtain:

$q_3 \geq s + q' + c_{\text{Cons}}$

. Gathering and simplifying these constraints, we obtain:

For heap-space usage, it is reasonable to set all occurring cost constants to zero, except for

![]() $c_{Cons_A}$

which denotes the cost for allocating a list node for a list of type A. Simplifying the constraints further, we thus inferred the following type for any

$c_{Cons_A}$

which denotes the cost for allocating a list node for a list of type A. Simplifying the constraints further, we thus inferred the following type for any

![]() $p, q \in {\mathbb{Q}_{\geq 0}}$

:

$p, q \in {\mathbb{Q}_{\geq 0}}$

:

which expresses that the heap-space cost for applying append is precisely

![]() $n \cdot c_{Cons_A}$

, where n is the length of the first argument list, thus providing a tight upper bound.

$n \cdot c_{Cons_A}$

, where n is the length of the first argument list, thus providing a tight upper bound.

Nested Recursive Data Types and Linear Resource Bounds

Note that the presented method for linear resource bounds already entails the inference of bounds for functions dealing with arbitrarily nested data structures.

For example, given a function that takes a list of lists of type unit as its input, the first paper (Hofmann and Jost Reference Hofmann and Jost2003) could infer a bound of the form

![]() $an + b\sum_{i=1}^{n}m_i$

with

$an + b\sum_{i=1}^{n}m_i$

with

![]() $a, b \in \mathbb{Q}^{+}$

and

$a, b \in \mathbb{Q}^{+}$

and

![]() $n, m_i \in \mathbb{N}$

, where n is the length of the outer list and

$n, m_i \in \mathbb{N}$

, where n is the length of the outer list and

![]() $m_i$

is the length of each inner list for

$m_i$

is the length of each inner list for

![]() $i\in{1,\dots,n}$

. Such an upper bound would be expressed by the annotated input type

$i\in{1,\dots,n}$

. Such an upper bound would be expressed by the annotated input type

![]() $L^{a}({L^{b}({unit})})$

.

$L^{a}({L^{b}({unit})})$

.

For

![]() $m = max(m_i)$

we then obtain the upper bound

$m = max(m_i)$

we then obtain the upper bound

![]() $n \cdot m$

, which might be considered to be a quadratic bound. Likewise, in this way a list of lists of lists would lead to a cubic bound.

$n \cdot m$

, which might be considered to be a quadratic bound. Likewise, in this way a list of lists of lists would lead to a cubic bound.

However, since the early versions of AARA could not yet infer general bounds involving expressions such as

![]() $n \cdot m_i$

, we consider these versions to deliver linear resource bounds only. See Section 5 for the proper treatment of truly super- and sub-linear bounds.

$n \cdot m_i$

, we consider these versions to deliver linear resource bounds only. See Section 5 for the proper treatment of truly super- and sub-linear bounds.

3.3 Accounting for different resources

Most of the research papers considered in this survey focused on heap-space usage. However, Jost et al. (Reference Jost, Loidl, Hammond, Scaife and Hofmann2009a) showed that it is straightforward to eschew any resource whose cost can be statically bound to each individual instruction by choosing the appropriate cost constants. Note that soundness can be proven independently of these cost constants by proving soundness with respect to a cost-instrumented operational semantics using the same symbolic cost constants. Thereby, the same proof holds for arbitrary resource cost models and one must just verify the cost-instrumented operational semantics match reality.

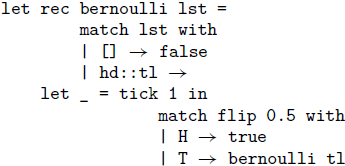

In addition to the flexibility provided by the cost constants, it is easy to also include a general cost-counting statement

![]() ${tick}~q$

for explicitly stating costs within the code:

${tick}~q$

for explicitly stating costs within the code:

The tick-statement evaluates to the unit value and in functional code is mostly used by constructs like

![]() $\mathop{\,\mathsf{let }\,} _ = \mathop{\,\mathsf{tick}\,} q \mathop{\,\mathsf{ in }\,} e$

or so.

$\mathop{\,\mathsf{let }\,} _ = \mathop{\,\mathsf{tick}\,} q \mathop{\,\mathsf{ in }\,} e$

or so.

Considering stack-space usage is straightforward in principle, but the linear bounds of the early research were a practical hindrance. This was addressed by Campbell (Reference Campbell2009) in 2009. However, significant further progress should be expected if the insights of the decade of AARA research that followed after 2009 would be applied to the problems shown by Campbell.

Determining the WCET in actual processor clock cycles is also possible, as shown in detail in Jost et al. (Reference Jost, Loidl, Hammond, Scaife and Hofmann2009a); Jost et al. (Reference Jost, Loidl, Scaife, Hammond, Michaelson and Hofmann2009b). However, this requires one to obtain the WCET for each basic block that might be produced by the binary compiler, since AARA excels at the high level where nested data structures and recursion are abundant. Thus, in the aforementioned works on WCET, the bounds on the basic blocks were established by abstract interpretations methods working on the low-level code. For a simple processor like the Renesas M32C/85, this combined analysis delivered a WCET bound less than 34% above the worst measured runtime in the considered scenarios.

Another application area of AARA gas analysis is smart contracts (Das et al. Reference Das, Balzer, Hoffmann, Pfenning and Santurkar2021; Das and Qadeer Reference Das, Qadeer and Sighireanu2020). Gas is a high-level resource that used to model the execution cost of a smart contract. Gas is important to prevent denial-of-service attacks on blockchains. Commonly, users of smart contracts are required to pay for the gas consumption of a transaction in advance without knowing the memory state of the contract. As a result, statically predicting the gas cost is desirable. Technically, gas can treated like execution time in AARA.

Another resource of interest is energy. We believe that an analysis of the energy consumption of a program with AARA is possible but we are not aware of any works in this space. A challenge could be the need to not only model the energy cost of the processor but also of other devices, such as radios or sensors, that are used by a program.

3.4 Soundness

The main result of many AARA papers is the proof of soundness for the annotated type system, which formally guarantees that the resource bounds are indeed an upper bound on resource usage with respect to a formal cost-instrumented operational semantics.

Usually a big-step semantics is used, as it fits the type rules best. Since big-step semantics only deal with terminating computations, an additional big-step semantics for partial evaluation can be provided. This also allows to prove that even the resource usage of failing or non-terminating programs respects the inferred resource bounds (Hoffmann and Hofmann Reference Hoffmann and Hofmann2010a; Jost 2012). However, the semantics for partial evaluations are usually just a straightforward variant of the ordinary big-step semantics, so we do not repeat this common simple solution to the problem of big-step semantics in this survey paper.

There are several ways to define the cost-instrumented operational semantics. Here, we write

to denote that expression e evaluates in environment V to value v, with p being the minimal amount of resources needed for the evaluation, and p′ the amount of unused resources being available after the evaluation.

The soundness proof usually proceeds by induction on the lexicographically ordered lengths of the formal evaluation and the typing derivation. The proof is primarily divided by a case distinction on the syntactic construct at the current end of the type derivation, but due to structural type rules and the lengthening of the typing derivation in case of function calls or thunks, the lexicographic ordering with a formal evaluation is usually required. A typical formulation of the soundness proof has the following structure:

Type preservation

![]() $v : A$

must sometimes be proven simultaneously within the main soundness theorem as included above, since resource usage becomes an intrinsic property of functions and thunks. Type systems without higher-order types nor laziness typically allow preservation to be proven independently.

$v : A$

must sometimes be proven simultaneously within the main soundness theorem as included above, since resource usage becomes an intrinsic property of functions and thunks. Type systems without higher-order types nor laziness typically allow preservation to be proven independently.

Note that some AARA presentations separated the evaluation environment

![]() $V = (S,H)$

into stack S and heap H (Hofmann and Jost Reference Hofmann and Jost2003; Jost et al. Reference Jost, Loidl, Hammond, Scaife and Hofmann2009a). This facilitates motivating the cost annotation for stack and heap space usage but is not mathematically relevant since the assigned cost and computed values are identical.

$V = (S,H)$

into stack S and heap H (Hofmann and Jost Reference Hofmann and Jost2003; Jost et al. Reference Jost, Loidl, Hammond, Scaife and Hofmann2009a). This facilitates motivating the cost annotation for stack and heap space usage but is not mathematically relevant since the assigned cost and computed values are identical.

Alternative Cost-Instrumented Operational Semantics

The above depicted cost-instrumented big-step semantics deliver the minimal resources required for evaluation; this allows for a concise theorem statement at the expense of requiring more care within the proof itself.

Alternatively, the big-step operational semantics could be instrumented with a simple counter that just increases and decreases with each rule application, according to the cost constants. We write

to denote that expression e evaluates in environment V to value v, with m resources being initially available and and m′ resources being unused after the evaluation. The derivation in this semantics simply fails whenever the amount of available resource would become negative. So if m is chosen too small, then no derivation is possible within these cost-instrumented semantics.

Usually, one can prove that for all

![]() $n \geq m$

that there exists an n′ with

$n \geq m$

that there exists an n′ with

![]() $n' \geq m' + (n - m)$

such that

$n' \geq m' + (n - m)$

such that

![]() is possible, that is, excess resources are harmless and preserved.

is possible, that is, excess resources are harmless and preserved.

This latter version of cost-instrumented semantics was especially used in earlier AARA papers, since it simplifies the proof at the cost of a more complicated statement of the soundness theorem, which then becomes:

Note the inverted inequalities involving the resource bounds.

The value r allows threading of unused excess resources to subsequent subexpressions; programs in LNF then require this for local

![]() $\mathop{\,\mathsf{let}\,}$

-definitions only. Note that an explicit r can be avoided by referring to differences, similarly to the type rule L:Relax depicted above.

$\mathop{\,\mathsf{let}\,}$

-definitions only. Note that an explicit r can be avoided by referring to differences, similarly to the type rule L:Relax depicted above.

The theorem sketched above intuitively says that an evaluation with restricted resources cannot fail due to a lack of resources if the amount of available resources exceeds the upper bound indicated by the annotated types.

3.5 Manual amortized analysis

The similarity of the program analysis outlined above and the manual amortized analysis technique by Tarjan (Reference Tarjan1985) was only later pointed out to us by our colleague Olha Shkaravska around 2004.

Tarjan’s amortized analysis basically allows any kind of mathematical potential function that abstracts any program configuration to simple number. However, the onus is on the user to find a suitable abstraction so that the proof of the desired program property succeeds.

In contrast, the presented analysis restricts this vast search space to a certain set of functions encoded through the type only in a canonical way. This reduced inference to solving a set of linear inequalities, which by chance had the property to be easily solvable. The research that followed the initial work then pushed hard to enlarge the search space again, while retaining a feasible inference.

An important advantage of AARA over Tarjan’s original amortized analysis is the idea to assign potential per reference to a data structure. While the manual amortized analysis generally breaks in the presence of persistent data structures (Okasaki Reference Okasaki1998), the potential per typed reference is the key to the success of AARA in a functional setting.

4. Higher-Order and Other Extensions

Adapting AARA to a higher-order functional language required several steps, most of which are not directly related to higher-order types. Instead, these features are already beneficial in a first-order setting, albeit arguably less urgently so. We discuss these steps in no particular order.

Resource Parametricity

Consider the heap-space usage of a function

![]() ${zip} : {{{L^{P}(A)} \times {L^{q}(B)}}}\buildrel {0/0} \over \longrightarrow{L^{r}({{A} \times {B}})}$

that zips a pair of lists into a list of pairs. Assume the cost

${zip} : {{{L^{P}(A)} \times {L^{q}(B)}}}\buildrel {0/0} \over \longrightarrow{L^{r}({{A} \times {B}})}$

that zips a pair of lists into a list of pairs. Assume the cost

![]() $c_{\text{pair}}=2$

for constructing a list node of the output, including a pair constructor. It is then easy to see that the cost constraints are

$c_{\text{pair}}=2$

for constructing a list node of the output, including a pair constructor. It is then easy to see that the cost constraints are

![]() $p + q \geq r + c_{\text{pair}}$

, and indeed the AARA will infer a more elaborate LP which could then be simplified to this equation.

$p + q \geq r + c_{\text{pair}}$

, and indeed the AARA will infer a more elaborate LP which could then be simplified to this equation.

The problem then arises by our insistence to have a single solution to a single LP, which prevents simultaneous calls with the otherwise valid types

![]() ${zip} : {{{L^{3}(A)} \times {L^{0}(B)}}}\buildrel {0/0} \over \longrightarrow{L^{1}({{{A} \times {B}}})}$

and

${zip} : {{{L^{3}(A)} \times {L^{0}(B)}}}\buildrel {0/0} \over \longrightarrow{L^{1}({{{A} \times {B}}})}$

and

![]() ${zip} : {{{L^{0}(A)} \times {L^{3}(B)}}}\buildrel {0/0} \over \longrightarrow{L^{1}({{{A} \times {B}}})}$

. The solution presented in Jost et al. (Reference Jost, Hammond, Loidl and Hofmann2010), Jost (2012) is an annotated function type that also stores a set of constraints, as well as the set of bound constraint variables. Note that free constraint variables must allowed to be retained as well. Upon each function application, this set of constraints is simply copied into the actual constraints for the programs, with the bound constraint variables being renamed.

${zip} : {{{L^{0}(A)} \times {L^{3}(B)}}}\buildrel {0/0} \over \longrightarrow{L^{1}({{{A} \times {B}}})}$

. The solution presented in Jost et al. (Reference Jost, Hammond, Loidl and Hofmann2010), Jost (2012) is an annotated function type that also stores a set of constraints, as well as the set of bound constraint variables. Note that free constraint variables must allowed to be retained as well. Upon each function application, this set of constraints is simply copied into the actual constraints for the programs, with the bound constraint variables being renamed.

Note that this makes the size of the LP exponential in the depth of the call graph, which turned out to be mostly unproblematic in practice.

Mutual Recursion

Mutual recursion can in principle be dealt with easily, since the annotated type of a function featuring numeric variables instead of numeric constant values is created before the examination of the function body. Each application simply refers to these previously determined annotated types, recursive or otherwise. This requires the annotations to become first-class elements to be manipulated during the inference, as well as explicitly passing sets of constraints at the time of function application.

Polymorphism

Dealing with polymorphic functions requires to defer a part of inference: a function’s constraint set then contains symbolic constraints that are instantiated upon function application, given the appropriate types. These symbolic constraints simply express the number of times an argument must be shared to the various references occurring within a function’s body.

Currying and Closures with Potential

Type rule L:Fun in Section 3 contains the premise

![]() that effectively disallows potential to be captured in closures. Any potential captured within a function closure would be available for each application, leading to an unsound duplication of potential. Restricting potential to zero is one easy way out of this problem, another would be use-once (or use-n-times) function types. Both of these strategies are not in opposition but can be combined in one type system, as demonstrated by Rajani et al. (Reference Rajani, Gaboardi, Garg and Hoffmann2021).

that effectively disallows potential to be captured in closures. Any potential captured within a function closure would be available for each application, leading to an unsound duplication of potential. Restricting potential to zero is one easy way out of this problem, another would be use-once (or use-n-times) function types. Both of these strategies are not in opposition but can be combined in one type system, as demonstrated by Rajani et al. (Reference Rajani, Gaboardi, Garg and Hoffmann2021).

Note that restricting potential of closures for thunks to zero is not an acceptable solution, but thunks being use-once functions resolve this problem automatically, albeit making soundness much more harder to prove, see Section 6.

An important consequence of restricting closure potential to zero is that only the last function argument is allowed to yield any potential, which in turn gives AARA a strong preference for fully uncurried functions, which do not suffer from this limitation at all.

Beyond Worst-Case Bounds

It is also possible to use AARA to derive lower bounds on the best case resource usage and to precisely characterize the resource usage of programs (Ngo et al. Reference Ngo, Dehesa-Azuara, Fredrikson and Hoffmann2017). For a precise characterization, we simply remove the weakening rules and require that all potential needs to be consumed in every branch. Such precise characterizations are interesting in the context of software security, where we would like to show the absence of side channels based on resource usage.

For lower bounds, we also remove weakening but allow to create potential. However, we require that all potential needs to be used to pay for cost at some point. Intuitively, the goal is to spend as much potential as possible to justify a tighter lower bound.

The original AARA corresponds to an affine treatment, the precise analysis to a linear treatment, and the lower-bound analysis to a relevant treatment of potential.

5. Non-Linear Potential Functions

AARA, as introduced by Hofmann and Jost (Reference Hofmann and Jost2003), was well received by the research community. However, the correlation of the technique with linear potential functions, and thus linear bounds, has often been cited as its main limitation.Footnote 4 Indeed, the association of a constant potential with each element of a data structure seemed to be the focal point that enabled local and intuitive typing rules as well as the type and bound inference via off-the-shelf LP solvers. A naive extension to non-linear potential functions would require non-linear constraint solving an additional expressivity in the type system as offered by dependent types (Martin-Löf Reference Martin-Löf1984) or refinement types (Freeman and Pfenning Reference Freeman, Pfenning and Wise1991). An AARA in such a setting would not share many of the characteristics of the original linear version and it is not clear if the potential method would provide advantages (Radiček et al. 2017).

Maybe unexpectedly, even to the surprise of Hofmann and Jost, it could be demonstrated that AARA can be extended to non-linear potential functions while preserving all benefits of the technique, including local typing rules and reducing bound inference to linear constraint solving. The first such extension uses univariate polynomial potential functions and has been developed by Hoffmann and Hofmann in 2009 (Hoffmann and Hofmann Reference Hoffmann and Hofmann2010b). Subsequently, extensions to multivariate polynomial potential functions (Hoffmann et al. Reference Hoffmann, Aehlig and Hofmann2011) and exponential potential functions (Kahn and Hoffmann Reference Kahn and Hoffmann2020) have been developed. Recently, an AARA with logarithmic potential has been proposed (Hofmann et al. Reference Hofmann, Leutgeb, Obwaller, Moser and Zuleger2021; Hofmann and Moser Reference Hofmann and Moser2018).

The extensions to polynomial potential and exponential potential are conservative over the original linear system. However, while potential in linear AARA can be parametric in the values of general recursive types (Jost et al. Reference Jost, Loidl, Hammond, Scaife and Hofmann2009a; Jost et al. Reference Jost, Hammond, Loidl and Hofmann2010), polynomial potential has been introduced for a particular form of inductive typesFootnote 5 only (Hoffmann et al. Reference Hoffmann, Das and Weng2017) and exponential potential has only been developed for lists thus far. Polynomial potential can be a function of either the size or the height of data structures (Hoffmann Reference Hoffmann2011). In this section, we focus on lists for which the notions of height and size coincide.

5.1 Univariate polynomial potential

Univariate polynomial AARA (Hoffmann and Hofmann Reference Hoffmann and Hofmann2010b) is a conservative extension of linear AARA (Hofmann and Jost Reference Hofmann and Jost2003). The only type rules that differ between the systems are the ones for constructing and destructing data structures. In the following, we explain the idea for lists. It can be extended to other tree-like inductive types.

Resource Annotations

In linear AARA, list types are annotated with a single non-negative rational number q that defines the potential function

![]() $q\cdot n$

, where n is the length of the list. In univariate polynomial AARA, we use potential functions that are non-negative linear combinations of binomial coefficients

$q\cdot n$

, where n is the length of the list. In univariate polynomial AARA, we use potential functions that are non-negative linear combinations of binomial coefficients

![]() $\binom{n}{k}$

, where k is a natural number and n is the length of the list. Consequently, a resource annotation for lists is a vector

$\binom{n}{k}$

, where k is a natural number and n is the length of the list. Consequently, a resource annotation for lists is a vector

![]() $\vec q = (q_1,\ldots,q_k) \in ({\mathbb{Q}_{\geq 0}})^k$

of non-negative rational numbers and list types have the form

$\vec q = (q_1,\ldots,q_k) \in ({\mathbb{Q}_{\geq 0}})^k$

of non-negative rational numbers and list types have the form

![]() $L^{\vec q}(A)$

.

$L^{\vec q}(A)$

.

One intuition for the resource annotations is as follows: The annotation

![]() $\vec q$

assigns the potential

$\vec q$

assigns the potential

![]() $q_1$

to every element of the data structures, the potential

$q_1$

to every element of the data structures, the potential

![]() $q_2$

to every element of every proper suffix (sublist or subtree, respectively) of the data structure,

$q_2$

to every element of every proper suffix (sublist or subtree, respectively) of the data structure,

![]() $q_3$

to the elements of the suffixes of the suffixes, etc.

$q_3$

to the elements of the suffixes of the suffixes, etc.

The choice of binomial coefficients is beneficial compared to the standard basis (n,

![]() $n^2$

,

$n^2$

,

![]() $\ldots$

) if we ensure that potential functions are non-negative by only allowing non-negative potential annotations. With non-negative coefficients, functions like

$\ldots$

) if we ensure that potential functions are non-negative by only allowing non-negative potential annotations. With non-negative coefficients, functions like

![]() $\binom{n}{2}$

cannot be expressed in the standard basis. Binomial coefficients with non-negative coefficients are also a canonical choice since they are the largest class of inherently non-negative polynomials (Hoffmann et al. Reference Hoffmann, Aehlig and Hofmann2011).

$\binom{n}{2}$

cannot be expressed in the standard basis. Binomial coefficients with non-negative coefficients are also a canonical choice since they are the largest class of inherently non-negative polynomials (Hoffmann et al. Reference Hoffmann, Aehlig and Hofmann2011).

The Potential of Lists

Let us now consider the construction or destruction of non-empty lists. For linear potential annotations, we can simply assign potential to the tail using the same annotation as on the original list. This would however lead to a substantial loss of potential in the polynomial case. For this reason, we use an additive shift operation to assign potential to sublists. The additive shift of

![]() $\vec q = (q_1,\ldots,q_k)$

is

$\vec q = (q_1,\ldots,q_k)$

is

The definition of potential

![]() $\Phi(v:A)$

of a value v of type A is extended as follows:

$\Phi(v:A)$

of a value v of type A is extended as follows:

\[\begin{array}{lll} \Phi([\,] : L^{\vec q}(A) ) &=& 0 \\ \Phi({{v_1}\mathop{::}{v_2}} : L^{\vec q}(A) ) &=& \Phi(v_1 : A) + q_1 + \Phi(v_2 : L^{\lhd{\vec q}}(A)\end{array}\]

\[\begin{array}{lll} \Phi([\,] : L^{\vec q}(A) ) &=& 0 \\ \Phi({{v_1}\mathop{::}{v_2}} : L^{\vec q}(A) ) &=& \Phi(v_1 : A) + q_1 + \Phi(v_2 : L^{\lhd{\vec q}}(A)\end{array}\]

The potential of a list can be written as a non-negative linear combination of binomial coefficients. If we define

\[\phi(n,\vec q) = \sum_{i=1}^{k} \binom{n}{i} q_i\]

\[\phi(n,\vec q) = \sum_{i=1}^{k} \binom{n}{i} q_i\]

then we can prove the following lemma.

Lemma 1. Let

![]() $\ell = [v_1 \ldots, v_n]$

be a list whose elements are values of type A. Then

$\ell = [v_1 \ldots, v_n]$

be a list whose elements are values of type A. Then

The use of binomial coefficients instead of powers of variables is not theoretically appealing but has also several practical advantages. In particular, the identity

gives rise to a local typing rule for

![]() $\mathop{\,\mathsf{cons}\,}$

and pattern matching, which naturally allows the typing of both recursive calls and other calls to subordinate functions in branches of a pattern match.

$\mathop{\,\mathsf{cons}\,}$

and pattern matching, which naturally allows the typing of both recursive calls and other calls to subordinate functions in branches of a pattern match.

Typing Rules

Like for linear potential functions, the type rules define a judgment of the form

The rules of linear AARA remain unchanged except for the rules for the introduction and elimination of inductive types. Below are the rules for lists. To focus on the novel aspects, we assume that the cost constants are zero. They can be added like in the linear case.

The rule U:Nil requires no additional constant potential and an empty context. It is sound to attach any potential annotation

![]() $\vec p$

to the empty list since the resulting potential is always zero. The rule U:Cons reflects the fact that we have to cover the potential that is assigned to the new list of type

$\vec p$

to the empty list since the resulting potential is always zero. The rule U:Cons reflects the fact that we have to cover the potential that is assigned to the new list of type

![]() $L^{\vec p}({\vec A})$

. We do so by requiring

$L^{\vec p}({\vec A})$

. We do so by requiring

![]() $x_2$

to have the type

$x_2$

to have the type

![]() $L^{\lhd(\vec p)}(A)$

and to have

$L^{\lhd(\vec p)}(A)$

and to have

![]() $p_1$

resource units available. The rule U:MatL accounts for the fact that either

$p_1$

resource units available. The rule U:MatL accounts for the fact that either

![]() $e_0$

or

$e_0$

or

![]() $e_1$

is evaluated. The cons case is inverse to the rule U:Cons and uses the potential associated with a list:

$e_1$

is evaluated. The cons case is inverse to the rule U:Cons and uses the potential associated with a list:

![]() $p_1$

resource units become available as constant potential and the tail of the list is annotated with

$p_1$

resource units become available as constant potential and the tail of the list is annotated with

![]() $\lhd(\vec p)$

.

$\lhd(\vec p)$

.

Example: Alltails

Recall the function append from Section 3.2 and consider again the resource metric for heap space in which all costs are 0 except for

![]() $c_{Cons_A}$

, which is the cost of list cons.

$c_{Cons_A}$

, which is the cost of list cons.

With this metric, heap-space cost for evaluating append(x,y) is

![]() $|x| \cdot c_{Cons_A}$

. This exact bound is reflected by the following typing:

$|x| \cdot c_{Cons_A}$

. This exact bound is reflected by the following typing:

The typing is similar to the one that is discussed in Section 3.2. However, we are considering the special case in which resulting potential is zero as indicated by the annotations on the result type. Moreover, we have added quadratic potential annotations. For instance, the annotated type

![]() $L^{(c_{Cons_A},0)}(A)$

on the first argument x of append assigns the potential

$L^{(c_{Cons_A},0)}(A)$

on the first argument x of append assigns the potential

![]() $|x| \cdot c_{Cons_A} + \binom{|x|}{2} \cdot 0$

. To give the most general type for append with quadratic potential, we need multivariate annotations. More details are discussed in Section 5.2.

$|x| \cdot c_{Cons_A} + \binom{|x|}{2} \cdot 0$

. To give the most general type for append with quadratic potential, we need multivariate annotations. More details are discussed in Section 5.2.

For an example with non-trivial quadratic annotations, consider the following function alltails that creates a list that is a concatenation of all tails, that is, all proper suffixes, of the argument.

With the heap resource metric, the resource consumption of the alltails is

![]() $\binom{n}{2} \cdot c_{Cons_A}$

, where n is the length of the argument. In the univariate system, this exact bound can be expressed by the following typing:

$\binom{n}{2} \cdot c_{Cons_A}$

, where n is the length of the argument. In the univariate system, this exact bound can be expressed by the following typing:

The key aspect of the type derivation is the treatment of the cons case of the case analysis in the function body. The types of the variables are

![]() $h:A$

and

$h:A$

and

![]() $t:L^{(c_{Cons_A},c_{Cons_A})}(A)$

. The annotation

$t:L^{(c_{Cons_A},c_{Cons_A})}(A)$

. The annotation

![]() $(c_{Cons_A},c_{Cons_A})$

is the result of the additive shift

$(c_{Cons_A},c_{Cons_A})$

is the result of the additive shift

![]() $\lhd(0,c_{Cons_A})$

. The potential is then shared as

$\lhd(0,c_{Cons_A})$

. The potential is then shared as

![]() $t_1:L^{(0,c_{Cons_A})}(A)$

to match the argument type (and cover the cost) of the recursive call and

$t_1:L^{(0,c_{Cons_A})}(A)$

to match the argument type (and cover the cost) of the recursive call and

![]() $t_2:L^{(c_{Cons_A}),0}(A)$

to match the argument type of append.

$t_2:L^{(c_{Cons_A}),0}(A)$

to match the argument type of append.

Note that we assume

![]() , that is, the type A does not carry potential. This is needed because elements of the inner list are copied. In the multivariate system, we can type the function alltails with element types that carry potential.

, that is, the type A does not carry potential. This is needed because elements of the inner list are copied. In the multivariate system, we can type the function alltails with element types that carry potential.

Soundness

The soundness theorem has the same form as for linear AARA. Given the appropriate extensions of sharing and subtyping, the change in the proof is limited to the cases that involve the syntactic forms for lists.

Theorem. Let

![]() and

and

![]() $V : \Gamma$

. If

$V : \Gamma$

. If

![]() $V \vdash e {\Downarrow {v}} \mid (p,p')$

for some v and (p, p′) then

$V \vdash e {\Downarrow {v}} \mid (p,p')$

for some v and (p, p′) then

![]() $\Phi(V : \Gamma) + q \geq p$

and

$\Phi(V : \Gamma) + q \geq p$

and

![]() $\Phi(V : \Gamma) + q - (\Phi(v:A) + q') \geq p - p'$

.

$\Phi(V : \Gamma) + q - (\Phi(v:A) + q') \geq p - p'$

.

The proof is by induction on the evaluation judgment and an inner induction on the type judgment. The inner induction is needed because of the structural rules.

Type Inference and Resource-Polymorphic Recursion

The basis of the type inference for the univariate polynomial system is the type inference algorithm for the linear system, which reduces the inference of potential functions to LP. A further challenge for the inference of polynomial bounds is the need to deal with resource-polymorphic recursion, which is required to type many functions that are not tail recursive and have a superlinear cost.

It is a hard problem to infer general resource-polymorphic types, even for the original linear system. A pragmatic approach to resource-polymorphic recursion that works well and efficiently in practice is to apply so-called cost-free typings that only transfer potential from arguments to results. More details can be found in the literature (Hoffmann and Hofmann Reference Hoffmann and Hofmann2010a).

5.2 Multivariate polynomial potential

Linear AARA is compositional in the sense that the typeability of two functions f and g is often indicative of the typeability of the function composition

![]() $f \circ g$

. However, this is not the case to the same extent for univariate polynomial AARA. Consider for instance the function append :

$f \circ g$

. However, this is not the case to the same extent for univariate polynomial AARA. Consider for instance the function append :

![]() $L({\mathbf{1}}) \times L({\mathbf{1}}) \to L({\mathbf{1}})$

for integer lists and the sorting function quicksort :

$L({\mathbf{1}}) \times L({\mathbf{1}}) \to L({\mathbf{1}})$

for integer lists and the sorting function quicksort :

![]() $L({\mathbf{1}}) \to L({\mathbf{1}})$

. Using univariate AARA, we can derive a linear bound like

$L({\mathbf{1}}) \to L({\mathbf{1}})$

. Using univariate AARA, we can derive a linear bound like

![]() $n_1$

for append and a quadratic bound like

$n_1$

for append and a quadratic bound like

![]() $n^2$

for quicksort. Here, we assume that the lengths of the two arguments of append are

$n^2$

for quicksort. Here, we assume that the lengths of the two arguments of append are

![]() $n_1$

and

$n_1$

and

![]() $n_2$

, respectively, and that the length of the argument of quicksort is n. Now consider the function

$n_2$

, respectively, and that the length of the argument of quicksort is n. Now consider the function

Assuming the bounds

![]() $n_1$

and

$n_1$

and

![]() $n^2$

, a tight bound for the function app-qs is

$n^2$

, a tight bound for the function app-qs is

![]() $n_1^2 + 2 n_1n_2 + n_2^2 + n_1$

. This bound is not expressible in the univariate system, but one might expect that a looser bound like

$n_1^2 + 2 n_1n_2 + n_2^2 + n_1$

. This bound is not expressible in the univariate system, but one might expect that a looser bound like

![]() $4n_1^2 + 4n_2^2 + n_1$

is derivable. However, the function app-qs is not typeable in univariate AARA since univariate potential functions cannot express suitable invariants in the type derivation of append. The desire to improve compositionality of polynomial AARA and to express more precise bounds leads to the development of multivariate polynomial AARA in 2010 (Hoffmann et al. Reference Hoffmann, Aehlig and Hofmann2011).

$4n_1^2 + 4n_2^2 + n_1$

is derivable. However, the function app-qs is not typeable in univariate AARA since univariate potential functions cannot express suitable invariants in the type derivation of append. The desire to improve compositionality of polynomial AARA and to express more precise bounds leads to the development of multivariate polynomial AARA in 2010 (Hoffmann et al. Reference Hoffmann, Aehlig and Hofmann2011).

Multivariate AARA is an extension of univariate AARA. It preserves the principles of the univariate system while expanding the set of potential functions so as to express dependencies between different data structures. The term multivariate refers to the use of multivariate monomials like

![]() $n_1 n_2$

as base functions. They enable us to derive the aforementioned bound

$n_1 n_2$

as base functions. They enable us to derive the aforementioned bound

![]() $n_1^2 + 2 n_1n_2 + n_2^2 + n_1$

for the function app-qs.

$n_1^2 + 2 n_1n_2 + n_2^2 + n_1$

for the function app-qs.

Resource Annotations and Base Polynomials

Other than in univariate, potential annotations are now global and there is exactly one annotation per type. More formally, we can write data types that do not contain function arrows as pairs

![]() ${{\langle {\tau},{Q} \rangle}}$

so that τ is a standard type like

${{\langle {\tau},{Q} \rangle}}$

so that τ is a standard type like

![]() $L({L({\mathbf{1}})})$

and the potential annotation

$L({L({\mathbf{1}})})$

and the potential annotation

![]() $Q = (q_i)_{i \in I(\tau)}$

is a family of non-negative rational numbers

$Q = (q_i)_{i \in I(\tau)}$

is a family of non-negative rational numbers

![]() $q_i \in {\mathbb{Q}_{\geq 0}}$

.

$q_i \in {\mathbb{Q}_{\geq 0}}$

.

The (possible infinite) index set I(τ) contains an index i for every base polynomial

![]() $p_i$

for that type. In a well-formed potential annotation only finitely many

$p_i$

for that type. In a well-formed potential annotation only finitely many

![]() $q_i$

are larger than 0. For example, we have

$q_i$

are larger than 0. For example, we have

![]() $I(L({\mathbf{1}})) = \mathbb{N}$

and

$I(L({\mathbf{1}})) = \mathbb{N}$

and

![]() $p_i(n) = \binom{n}{i}$

. The potential defined by an annotation Q is then

$p_i(n) = \binom{n}{i}$

. The potential defined by an annotation Q is then

![]() $\sum_{i \in I(L({\mathbf{1}}))} q_i p_i$

. Note that the constant potential (

$\sum_{i \in I(L({\mathbf{1}}))} q_i p_i$

. Note that the constant potential (

![]() $\binom{n}{0}$

) is also included in the global annotation. As another example, we have

$\binom{n}{0}$

) is also included in the global annotation. As another example, we have

![]() $I(L({\mathbf{1}}) \times L({\mathbf{1}})) = \mathbb{N} \times \mathbb{N}$

and

$I(L({\mathbf{1}}) \times L({\mathbf{1}})) = \mathbb{N} \times \mathbb{N}$

and

![]() $p_{(i,j)}(n,m) = \binom{n}{i}\binom{m}{j}$

.

$p_{(i,j)}(n,m) = \binom{n}{i}\binom{m}{j}$

.

The Potential of Lists and Pairs

In general, the base polynomials

![]() $\mathcal{B}{\tau}$

are inductively defined for inductive data structures and can, for example, take into account the number of certain constructors used in that data structure. For lists and pairs, the inductive definition looks as follows.

$\mathcal{B}{\tau}$

are inductively defined for inductive data structures and can, for example, take into account the number of certain constructors used in that data structure. For lists and pairs, the inductive definition looks as follows.

\begin{eqnarray*} \mathcal{B}(\mathrm{unit}) &=& \{ \lambda (v) \, 1 \} \\ \mathcal{B}({{\tau_1} \times {\tau_2}}) &=& \{ \lambda ( {\langle {v_1},{v_2} \rangle} ) \; p_1(v_1) {\cdot} p_2(v_2) \mid p_i \in \mathcal{B}(\tau_i) \} \\ \mathcal{B}(L(\tau)) &=& \big\{ \lambda ([v_1,\ldots,v_n]) \; \sum_{1 \leq j_1 < \cdots < j_k \leq n} \, \prod_{1 \leq i \leq k} p_i(v_{j_i}) \mid k \in \mathbb{N}, p_i \in \mathcal{B}(\tau) \big\}\end{eqnarray*}

\begin{eqnarray*} \mathcal{B}(\mathrm{unit}) &=& \{ \lambda (v) \, 1 \} \\ \mathcal{B}({{\tau_1} \times {\tau_2}}) &=& \{ \lambda ( {\langle {v_1},{v_2} \rangle} ) \; p_1(v_1) {\cdot} p_2(v_2) \mid p_i \in \mathcal{B}(\tau_i) \} \\ \mathcal{B}(L(\tau)) &=& \big\{ \lambda ([v_1,\ldots,v_n]) \; \sum_{1 \leq j_1 < \cdots < j_k \leq n} \, \prod_{1 \leq i \leq k} p_i(v_{j_i}) \mid k \in \mathbb{N}, p_i \in \mathcal{B}(\tau) \big\}\end{eqnarray*}