Refine search

Actions for selected content:

3312 results in Artificial Intelligence and Natural Language Processing

Efficient bilingual lexicon extraction from comparable corpora based on formal concepts analysis

-

- Journal:

- Natural Language Engineering / Volume 29 / Issue 1 / January 2023

- Published online by Cambridge University Press:

- 04 October 2021, pp. 138-161

-

- Article

- Export citation

Evaluation of taxonomic and neural embedding methods for calculating semantic similarity

-

- Journal:

- Natural Language Engineering / Volume 28 / Issue 6 / November 2022

- Published online by Cambridge University Press:

- 28 September 2021, pp. 733-761

-

- Article

- Export citation

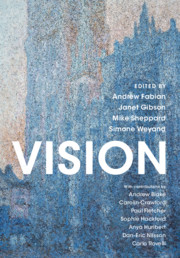

Vision

-

- Published online:

- 17 September 2021

- Print publication:

- 26 August 2021

Foreword

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp xiii-xv

-

- Chapter

- Export citation

13 - Arabic Dialect Processing

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 279-303

-

- Chapter

- Export citation

9 - Dialect and Similar Language Identification

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 187-203

-

- Chapter

- Export citation

4 - Mutual Intelligibility

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 51-95

-

- Chapter

- Export citation

5 - Dialectology for Computational Linguists

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 96-118

-

- Chapter

- Export citation

Contributors

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp xi-xii

-

- Chapter

- Export citation

7 - Adaptation of Morphosyntactic Taggers

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 138-166

-

- Chapter

- Export citation

Introduction

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp xvi-xviii

-

- Chapter

- Export citation

10 - Dialect Variation on Social Media

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 204-218

-

- Chapter

- Export citation

Part III - Applications and Language Specific Issues

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 185-186

-

- Chapter

- Export citation

1 - Language Variation

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 3-16

-

- Chapter

- Export citation

3 - Similar Languages, Varieties, and Dialects: Status and Variation

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 27-50

-

- Chapter

- Export citation

11 - Machine Translation between Similar Languages

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 219-253

-

- Chapter

- Export citation

2 - Phonetic Variation in Dialects

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 17-26

-

- Chapter

- Export citation

14 - Computational Processing of Varieties of Chinese: Comparable Corpus-Driven Approaches to Light Verb Variation

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 304-326

-

- Chapter

- Export citation

Frontmatter

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp i-vi

-

- Chapter

- Export citation

6 - Data Collection and Representation for Similar Languages, Varieties and Dialects

-

- Book:

- Similar Languages, Varieties, and Dialects

- Published online:

- 12 August 2021

- Print publication:

- 02 September 2021, pp 121-137

-

- Chapter

- Export citation