Refine search

Actions for selected content:

48532 results in Computer Science

Opinion dynamics beyond social influence

-

- Journal:

- Network Science / Volume 12 / Issue 4 / December 2024

- Published online by Cambridge University Press:

- 21 October 2024, pp. 339-365

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Applied logic and semantics on indoor and urban adaptive design through knowledge graphs, reasoning and explainable argumentation

-

- Journal:

- The Knowledge Engineering Review / Volume 39 / 2024

- Published online by Cambridge University Press:

- 21 October 2024, e4

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Unraveling the Brexit–COVID-19 nexus: assessing the decline of EU student applications into UK universities

-

- Journal:

- Data & Policy / Volume 6 / 2024

- Published online by Cambridge University Press:

- 21 October 2024, e45

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Transparency challenges in policy evaluation with causal machine learning: improving usability and accountability

-

- Journal:

- Data & Policy / Volume 6 / 2024

- Published online by Cambridge University Press:

- 18 October 2024, e43

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Reset controller synthesis: a correct-by-construction way to the design of CPS

-

- Journal:

- Research Directions: Cyber-Physical Systems / Volume 2 / 2024

- Published online by Cambridge University Press:

- 18 October 2024, e7

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

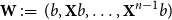

Cokernel statistics for walk matrices of directed and weighted random graphs

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 34 / Issue 1 / January 2025

- Published online by Cambridge University Press:

- 18 October 2024, pp. 131-150

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

AI, big data, and quest for truth: the role of theoretical insight

-

- Journal:

- Data & Policy / Volume 6 / 2024

- Published online by Cambridge University Press:

- 18 October 2024, e44

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Logistics efficiency in Brazilian cities applying data envelopment analysis

-

- Journal:

- Data & Policy / Volume 6 / 2024

- Published online by Cambridge University Press:

- 18 October 2024, e42

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Part II - Financial Regulation and Investor Protection

-

- Book:

- The Cambridge Handbook of Law and Policy for NFTs

- Published online:

- 02 November 2024

- Print publication:

- 17 October 2024, pp 57-136

-

- Chapter

- Export citation

Part III - Capital Markets, Community, and Marketing

-

- Book:

- The Cambridge Handbook of Law and Policy for NFTs

- Published online:

- 02 November 2024

- Print publication:

- 17 October 2024, pp 137-214

-

- Chapter

- Export citation

16 - The Cybersecurity of NFTs and Digital Assets

- from Part V - Data Protection, Privacy, Cybersecurity, and NFTs

-

-

- Book:

- The Cambridge Handbook of Law and Policy for NFTs

- Published online:

- 02 November 2024

- Print publication:

- 17 October 2024, pp 321-334

-

- Chapter

- Export citation

Tables

-

- Book:

- The Cambridge Handbook of Law and Policy for NFTs

- Published online:

- 02 November 2024

- Print publication:

- 17 October 2024, pp xiii-xiv

-

- Chapter

- Export citation

Part VI - Other Legal Issues with NFTs

-

- Book:

- The Cambridge Handbook of Law and Policy for NFTs

- Published online:

- 02 November 2024

- Print publication:

- 17 October 2024, pp 349-394

-

- Chapter

- Export citation

Part VII - Conclusions and Future Directions

-

- Book:

- The Cambridge Handbook of Law and Policy for NFTs

- Published online:

- 02 November 2024

- Print publication:

- 17 October 2024, pp 395-416

-

- Chapter

- Export citation

4 - Digital Assets, Anti-Money Laundering, and Counter Financing of Terrorism

- from Part II - Financial Regulation and Investor Protection

-

-

- Book:

- The Cambridge Handbook of Law and Policy for NFTs

- Published online:

- 02 November 2024

- Print publication:

- 17 October 2024, pp 78-102

-

- Chapter

- Export citation

17 - NFTs within the Commercial Supply Chain

- from Part V - Data Protection, Privacy, Cybersecurity, and NFTs

-

-

- Book:

- The Cambridge Handbook of Law and Policy for NFTs

- Published online:

- 02 November 2024

- Print publication:

- 17 October 2024, pp 335-348

-

- Chapter

- Export citation

2 - The Language Landmines of Blockchain Technology and Cryptocurrency

- from Part I - Introduction and Background

-

-

- Book:

- The Cambridge Handbook of Law and Policy for NFTs

- Published online:

- 02 November 2024

- Print publication:

- 17 October 2024, pp 38-56

-

- Chapter

- Export citation

15 - Fungibility, Information Flow, and Privacy

- from Part V - Data Protection, Privacy, Cybersecurity, and NFTs

-

-

- Book:

- The Cambridge Handbook of Law and Policy for NFTs

- Published online:

- 02 November 2024

- Print publication:

- 17 October 2024, pp 299-320

-

- Chapter

- Export citation

Index

-

- Book:

- The Cambridge Handbook of Law and Policy for NFTs

- Published online:

- 02 November 2024

- Print publication:

- 17 October 2024, pp 417-435

-

- Chapter

- Export citation