Refine search

Actions for selected content:

52382 results in Statistics and Probability

Mortality models incorporating long memory for life table estimation: a comprehensive analysis

-

- Journal:

- Annals of Actuarial Science / Volume 15 / Issue 3 / November 2021

- Published online by Cambridge University Press:

- 02 February 2021, pp. 567-604

-

- Article

- Export citation

A DOUBLE COMMON FACTOR MODEL FOR MORTALITY PROJECTION USING BEST-PERFORMANCE MORTALITY RATES AS REFERENCE

-

- Journal:

- ASTIN Bulletin: The Journal of the IAA / Volume 51 / Issue 2 / May 2021

- Published online by Cambridge University Press:

- 01 February 2021, pp. 349-374

- Print publication:

- May 2021

-

- Article

- Export citation

Diversity of SARS-CoV-2 isolates driven by pressure and health index

-

- Journal:

- Epidemiology & Infection / Volume 149 / 2021

- Published online by Cambridge University Press:

- 01 February 2021, e38

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

TEMPERED PARETO-TYPE MODELLING USING WEIBULL DISTRIBUTIONS

-

- Journal:

- ASTIN Bulletin: The Journal of the IAA / Volume 51 / Issue 2 / May 2021

- Published online by Cambridge University Press:

- 01 February 2021, pp. 509-538

- Print publication:

- May 2021

-

- Article

-

- You have access

- Open access

- Export citation

On the Erdős–Sós conjecture for trees with bounded degree

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 30 / Issue 5 / September 2021

- Published online by Cambridge University Press:

- 01 February 2021, pp. 741-761

-

- Article

- Export citation

Comparative characterisation of human and ovine non-aureus staphylococci isolated in Sardinia (Italy) for antimicrobial susceptibility profiles and resistance genes

-

- Journal:

- Epidemiology & Infection / Volume 149 / 2021

- Published online by Cambridge University Press:

- 29 January 2021, e45

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Epidemiology of COVID-19 in Northern Ireland, 26 February 2020–26 April 2020

-

- Journal:

- Epidemiology & Infection / Volume 149 / 2021

- Published online by Cambridge University Press:

- 29 January 2021, e36

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

From satisficing to artificing: The evolution of administrative decision-making in the age of the algorithm

-

- Journal:

- Data & Policy / Volume 3 / 2021

- Published online by Cambridge University Press:

- 29 January 2021, e3

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Thrombocytopenia as a prognostic marker in COVID-19 patients: diagnostic test accuracy meta-analysis

-

- Journal:

- Epidemiology & Infection / Volume 149 / 2021

- Published online by Cambridge University Press:

- 29 January 2021, e40

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The number of maximum primitive sets of integers

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 30 / Issue 5 / September 2021

- Published online by Cambridge University Press:

- 28 January 2021, pp. 781-795

-

- Article

- Export citation

Urgent need for evaluation of point-of-care tests as an RT-PCR-sparing strategy for the diagnosis of Covid-19 in symptomatic patients

-

- Journal:

- Epidemiology & Infection / Volume 149 / 2021

- Published online by Cambridge University Press:

- 28 January 2021, e35

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

A scoping review of the detection, epidemiology and control of Cyclospora cayetanensis with an emphasis on produce, water and soil

-

- Journal:

- Epidemiology & Infection / Volume 149 / 2021

- Published online by Cambridge University Press:

- 28 January 2021, e49

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Immunogenicity and safety of rapid scheme vaccination against tick-borne encephalitis in HIV-1 infected persons

-

- Journal:

- Epidemiology & Infection / Volume 149 / 2021

- Published online by Cambridge University Press:

- 28 January 2021, e41

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Optimal group testing

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 30 / Issue 6 / November 2021

- Published online by Cambridge University Press:

- 28 January 2021, pp. 811-848

-

- Article

- Export citation

Response to Letter to Editor by Zavascki A.P.: Urgent need for evaluating point-of-care tests as a RT-PCR-sparing strategy for the diagnosis of Covid-19 in symptomatic patients

-

- Journal:

- Epidemiology & Infection / Volume 149 / 2021

- Published online by Cambridge University Press:

- 28 January 2021, e33

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

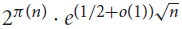

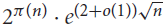

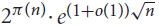

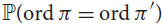

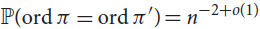

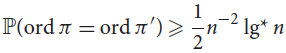

Permutations with equal orders

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 30 / Issue 5 / September 2021

- Published online by Cambridge University Press:

- 27 January 2021, pp. 800-810

-

- Article

- Export citation

A counterexample to the Bollobás–Riordan conjectures on sparse graph limits

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 30 / Issue 5 / September 2021

- Published online by Cambridge University Press:

- 27 January 2021, pp. 796-799

-

- Article

- Export citation

NONLINEAR COINTEGRATING POWER FUNCTION REGRESSION WITH ENDOGENEITY

-

- Journal:

- Econometric Theory / Volume 37 / Issue 6 / December 2021

- Published online by Cambridge University Press:

- 26 January 2021, pp. 1173-1213

-

- Article

- Export citation

Artificial Benchmark for Community Detection (ABCD)—Fast random graph model with community structure

-

- Journal:

- Network Science / Volume 9 / Issue 2 / June 2021

- Published online by Cambridge University Press:

- 26 January 2021, pp. 153-178

-

- Article

-

- You have access

- Open access

- Export citation

ON THE UNIFORM CONVERGENCE OF DECONVOLUTION ESTIMATORS FROM REPEATED MEASUREMENTS

-

- Journal:

- Econometric Theory / Volume 38 / Issue 1 / February 2022

- Published online by Cambridge University Press:

- 25 January 2021, pp. 172-193

-

- Article

- Export citation