Refine search

Actions for selected content:

48284 results in Computer Science

Machine learning approaches for the prediction of serious fluid leakage from hydrocarbon wells

-

- Journal:

- Data-Centric Engineering / Volume 4 / 2023

- Published online by Cambridge University Press:

- 19 May 2023, e12

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Emerging trends: Risks 3.0 and proliferation of spyware to 50,000 cell phones

-

- Journal:

- Natural Language Engineering / Volume 29 / Issue 3 / May 2023

- Published online by Cambridge University Press:

- 19 May 2023, pp. 824-841

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Obituary: Yorick Wilks

-

- Journal:

- Natural Language Engineering / Volume 29 / Issue 3 / May 2023

- Published online by Cambridge University Press:

- 19 May 2023, pp. 846-847

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Raw driving data of passenger cars considering traffic conditions in Semnan city

-

- Journal:

- Experimental Results / Volume 4 / 2023

- Published online by Cambridge University Press:

- 19 May 2023, e14

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Handbook of Augmented Reality Training Design Principles

-

- Published online:

- 18 May 2023

- Print publication:

- 01 June 2023

A comparison of latent semantic analysis and correspondence analysis of document-term matrices

-

- Journal:

- Natural Language Engineering / Volume 30 / Issue 4 / July 2024

- Published online by Cambridge University Press:

- 18 May 2023, pp. 722-752

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

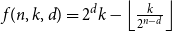

Subspace coverings with multiplicities

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 32 / Issue 5 / September 2023

- Published online by Cambridge University Press:

- 18 May 2023, pp. 782-795

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

A pair degree condition for Hamiltonian cycles in 3-uniform hypergraphs

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 32 / Issue 5 / September 2023

- Published online by Cambridge University Press:

- 17 May 2023, pp. 762-781

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Recommending tasks based on search queries and missions

-

- Journal:

- Natural Language Engineering / Volume 30 / Issue 3 / May 2024

- Published online by Cambridge University Press:

- 17 May 2023, pp. 577-601

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

RSL volume 16 issue 2 Cover and Back matter

-

- Journal:

- The Review of Symbolic Logic / Volume 16 / Issue 2 / June 2023

- Published online by Cambridge University Press:

- 16 May 2023, pp. b1-b2

- Print publication:

- June 2023

-

- Article

-

- You have access

- Export citation

RSL volume 16 issue 2 Cover and Front matter

-

- Journal:

- The Review of Symbolic Logic / Volume 16 / Issue 2 / June 2023

- Published online by Cambridge University Press:

- 16 May 2023, pp. f1-f4

- Print publication:

- June 2023

-

- Article

-

- You have access

- Export citation

Multistage approach for trajectory optimization for a wheeled inverted pendulum passing under an obstacle

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Universal geometric graphs

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 32 / Issue 5 / September 2023

- Published online by Cambridge University Press:

- 15 May 2023, pp. 742-761

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Determining sentiment views of verbal multiword expressions using linguistic features

-

- Journal:

- Natural Language Engineering / Volume 30 / Issue 2 / March 2024

- Published online by Cambridge University Press:

- 15 May 2023, pp. 256-293

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Joint learning of text alignment and abstractive summarization for long documents via unbalanced optimal transport

-

- Journal:

- Natural Language Engineering / Volume 30 / Issue 3 / May 2024

- Published online by Cambridge University Press:

- 15 May 2023, pp. 525-553

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Online and Matching-Based Market Design

-

- Published online:

- 13 May 2023

- Print publication:

- 22 June 2023

Adverse childhood experiences and craving: Results from an Italian population in outpatient addiction treatment

-

- Journal:

- Experimental Results / Volume 4 / 2023

- Published online by Cambridge University Press:

- 12 May 2023, e11

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Introduction to Choreographies

-

- Published online:

- 11 May 2023

- Print publication:

- 25 May 2023