Refine listing

Actions for selected content:

1418034 results in Open Access

SSH volume 48 issue 1 Cover and Back matter

-

- Journal:

- Social Science History / Volume 48 / Issue 1 / Spring 2024

- Published online by Cambridge University Press:

- 06 March 2024, pp. b1-b2

- Print publication:

- Spring 2024

-

- Article

-

- You have access

- Export citation

JFQ volume 59 issue 1 Cover and Front matter

-

- Journal:

- Journal of Financial and Quantitative Analysis / Volume 59 / Issue 1 / February 2024

- Published online by Cambridge University Press:

- 14 February 2024, pp. f1-f4

- Print publication:

- February 2024

-

- Article

-

- You have access

- Export citation

Orders and Disorders of Marriage, Church, and Empire in Mid-Nineteenth-Century Ottoman Armenia

-

- Journal:

- International Journal of Middle East Studies / Volume 56 / Issue 1 / February 2024

- Published online by Cambridge University Press:

- 14 March 2024, pp. 75-90

- Print publication:

- February 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

David Lehmann, After the Decolonial: Ethnicity, Gender and Social Justice in Latin America Polity, 2022, pp. xiv + 223

-

- Journal:

- Journal of Latin American Studies / Volume 56 / Issue 1 / February 2024

- Published online by Cambridge University Press:

- 12 March 2024, pp. 187-189

- Print publication:

- February 2024

-

- Article

- Export citation

Beckett’s Wasted Breath

-

- Journal:

- New Theatre Quarterly / Volume 40 / Issue 1 / February 2024

- Published online by Cambridge University Press:

- 13 February 2024, pp. 62-75

- Print publication:

- February 2024

-

- Article

-

- You have access

- Open access

- Export citation

SITI Training: Viewpoints and the Suzuki Method for Cross-Cultural Collaboration

-

- Journal:

- New Theatre Quarterly / Volume 40 / Issue 1 / February 2024

- Published online by Cambridge University Press:

- 13 February 2024, pp. 48-61

- Print publication:

- February 2024

-

- Article

- Export citation

POLICIES FOR INCLUSIVE GROWTH

-

- Journal:

- National Institute Economic Review / Volume 267 / Spring 2024

- Published online by Cambridge University Press:

- 29 April 2024, pp. 26-34

- Print publication:

- Spring 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

NMITE AND THE POLITICAL ECONOMY OF HIGHER EDUCATION: 2023 PARLIAMENTARY LECTURE

-

- Journal:

- National Institute Economic Review / Volume 267 / Spring 2024

- Published online by Cambridge University Press:

- 08 April 2025, pp. 66-73

- Print publication:

- Spring 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Effects of horizontal magnetic fields on turbulent Rayleigh–Bénard convection in a cuboid vessel with aspect ratio Γ = 5

-

- Journal:

- Journal of Fluid Mechanics / Volume 980 / 10 February 2024

- Published online by Cambridge University Press:

- 31 January 2024, A24

-

- Article

- Export citation

Mitigating Unemployment Stigma: Racialized Differences in Impression Management among Urban and Suburban Jobseekers

-

- Journal:

- Du Bois Review: Social Science Research on Race / Volume 21 / Issue 2 / Fall 2024

- Published online by Cambridge University Press:

- 31 January 2024, pp. 273-292

-

- Article

- Export citation

Effectiveness and predictors of group cognitive behaviour therapy outcome for generalised anxiety disorder in an out-patient hospital setting

-

- Journal:

- Behavioural and Cognitive Psychotherapy / Volume 52 / Issue 4 / July 2024

- Published online by Cambridge University Press:

- 31 January 2024, pp. 440-455

- Print publication:

- July 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

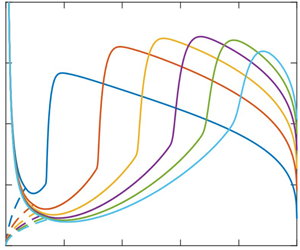

The characteristics of the circular hydraulic jump and vortex structure

-

- Journal:

- Journal of Fluid Mechanics / Volume 980 / 10 February 2024

- Published online by Cambridge University Press:

- 31 January 2024, A15

-

- Article

- Export citation

Travelling Barricades: Transnational Networks, Diffusion and the Dynamics of 1980s Squatter Conflicts in Western Europe – CORRIGENDUM

-

- Journal:

- Contemporary European History / Volume 33 / Issue 2 / May 2024

- Published online by Cambridge University Press:

- 31 January 2024, p. 809

- Print publication:

- May 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Flows with free boundaries and hydrodynamic singularities

-

- Journal:

- Journal of Fluid Mechanics / Volume 980 / 10 February 2024

- Published online by Cambridge University Press:

- 31 January 2024, A23

-

- Article

- Export citation

Arazyme secreted by Serratia proteamaculans: current understanding in animal husbandry – CORRIGENDUM

-

- Journal:

- The Journal of Agricultural Science / Volume 161 / Issue 5 / October 2023

- Published online by Cambridge University Press:

- 31 January 2024, p. 703

-

- Article

-

- You have access

- HTML

- Export citation

Yielding to percolation: a universal scale

-

- Journal:

- Journal of Fluid Mechanics / Volume 980 / 10 February 2024

- Published online by Cambridge University Press:

- 31 January 2024, A14

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Path instability of deformable bubbles rising in Newtonian liquids: a linear study

-

- Journal:

- Journal of Fluid Mechanics / Volume 980 / 10 February 2024

- Published online by Cambridge University Press:

- 31 January 2024, A19

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Thanks to Reviewers

-

- Journal:

- The Cognitive Behaviour Therapist / Volume 17 / 2024

- Published online by Cambridge University Press:

- 31 January 2024, e2

-

- Article

-

- You have access

- HTML

- Export citation