Refine listing

Actions for selected content:

1731 results in 60Jxx

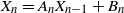

Analysing heavy-tail properties of stochastic gradient descent by means of stochastic recurrence equations

- Part of

-

- Journal:

- Journal of Applied Probability , First View

- Published online by Cambridge University Press:

- 10 February 2026, pp. 1-25

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Euler–Maruyama approximation for stable stochastic differential equations with Markovian switching and related asymptotics

- Part of

-

- Journal:

- Journal of Applied Probability , First View

- Published online by Cambridge University Press:

- 05 February 2026, pp. 1-29

-

- Article

- Export citation

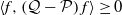

Quantitative convergence rates for stochastically monotone Markov chains

- Part of

-

- Journal:

- Journal of Applied Probability , First View

- Published online by Cambridge University Press:

- 21 January 2026, pp. 1-13

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

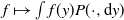

Comparing the Efficiency of General State Space Reversible MCMC Algorithms

- Part of

-

- Journal:

- Advances in Applied Probability , First View

- Published online by Cambridge University Press:

- 15 January 2026, pp. 1-31

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Degree-penalized contact processes

- Part of

-

- Journal:

- Forum of Mathematics, Sigma / Volume 14 / 2026

- Published online by Cambridge University Press:

- 15 January 2026, e6

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Competition of small targets in planar domains: from Dirichlet to Robin and Steklov boundary condition

- Part of

-

- Journal:

- European Journal of Applied Mathematics , First View

- Published online by Cambridge University Press:

- 12 January 2026, pp. 1-48

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Multitype branching processes with immigration generated by point processes

- Part of

-

- Journal:

- Journal of Applied Probability , First View

- Published online by Cambridge University Press:

- 12 January 2026, pp. 1-21

-

- Article

- Export citation

Quasi-stationary distributions in reducible state spaces

- Part of

-

- Journal:

- Advances in Applied Probability , First View

- Published online by Cambridge University Press:

- 26 December 2025, pp. 1-37

-

- Article

- Export citation

Numerical method for solving impulse control problems in partially observed piecewise deterministic Markov processes

- Part of

-

- Journal:

- Advances in Applied Probability , First View

- Published online by Cambridge University Press:

- 15 December 2025, pp. 1-32

-

- Article

- Export citation

Intrinsic stochastic differential equations and the extended Itô formula on manifolds

- Part of

-

- Journal:

- Proceedings of the Royal Society of Edinburgh. Section A: Mathematics , First View

- Published online by Cambridge University Press:

- 12 December 2025, pp. 1-33

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Branching particle systems with mutually catalytic interactions

- Part of

-

- Journal:

- Advances in Applied Probability , First View

- Published online by Cambridge University Press:

- 11 December 2025, pp. 1-38

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Asymptotic analysis for stationary distributions of multiscaled reaction networks

- Part of

-

- Journal:

- Advances in Applied Probability , First View

- Published online by Cambridge University Press:

- 11 December 2025, pp. 1-31

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Distribution of integers with digit restrictions via Markov chains

- Part of

-

- Journal:

- Ergodic Theory and Dynamical Systems / Volume 46 / Issue 3 / March 2026

- Published online by Cambridge University Press:

- 01 December 2025, pp. 757-804

- Print publication:

- March 2026

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

On ergodicity and transience for a class of level-dependent GI/M/1-type Markov chains

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 62 / Issue 4 / December 2025

- Published online by Cambridge University Press:

- 21 November 2025, pp. 1301-1317

- Print publication:

- December 2025

-

- Article

- Export citation

On approximating the Potts model with contracting Glauber dynamics

- Part of

-

- Journal:

- Advances in Applied Probability , First View

- Published online by Cambridge University Press:

- 07 November 2025, pp. 1-38

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

A sequential stopping problem with costly reversibility

- Part of

-

- Journal:

- Journal of Applied Probability , First View

- Published online by Cambridge University Press:

- 03 November 2025, pp. 1-33

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Mixed-state branching evolution for cell division models

- Part of

-

- Journal:

- Journal of Applied Probability , First View

- Published online by Cambridge University Press:

- 29 September 2025, pp. 1-22

-

- Article

- Export citation

Explicit pathwise expansion for multivariate diffusions with applications

- Part of

-

- Journal:

- Advances in Applied Probability , First View

- Published online by Cambridge University Press:

- 29 September 2025, pp. 1-34

-

- Article

- Export citation

Two-type branching processes with immigration, and the structured coalescents

- Part of

-

- Journal:

- Journal of Applied Probability , First View

- Published online by Cambridge University Press:

- 29 September 2025, pp. 1-29

-

- Article

- Export citation

Glauber dynamics for the hard-core model on bounded-degree

$H$-free graphs

$H$-free graphs

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 34 / Issue 6 / November 2025

- Published online by Cambridge University Press:

- 19 September 2025, pp. 803-814

-

- Article

-

- You have access

- Open access

- HTML

- Export citation