Refine listing

Actions for selected content:

225 results in 62Exx

Asymptotics for a multidimensional risk model with a random number of delayed claims and multivariate regularly varying distribution

- Part of

-

- Journal:

- Advances in Applied Probability , First View

- Published online by Cambridge University Press:

- 10 December 2025, pp. 1-30

-

- Article

- Export citation

Uniform asymptotics for a time-dependent bidimensional delay-claim risk model with stochastic return and dependent subexponential claims

- Part of

-

- Journal:

- Advances in Applied Probability , First View

- Published online by Cambridge University Press:

- 10 December 2025, pp. 1-37

-

- Article

- Export citation

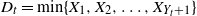

The distribution of the minimum observation until a stopping time, with an application to the minimal spacing in a Renewal process

- Part of

-

- Journal:

- Journal of Applied Probability , First View

- Published online by Cambridge University Press:

- 21 November 2025, pp. 1-20

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Quantization dimensions of negative order

- Part of

-

- Journal:

- Mathematical Proceedings of the Cambridge Philosophical Society / Volume 180 / Issue 2 / March 2026

- Published online by Cambridge University Press:

- 11 November 2025, pp. 433-457

- Print publication:

- March 2026

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Comparisons of variances through the probabilistic mean value theorem and applications

- Part of

-

- Journal:

- Advances in Applied Probability , First View

- Published online by Cambridge University Press:

- 15 July 2025, pp. 1-32

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Extropy-based dynamic cumulative residual inaccuracy measure: properties and applications

- Part of

-

- Journal:

- Probability in the Engineering and Informational Sciences / Volume 39 / Issue 4 / October 2025

- Published online by Cambridge University Press:

- 26 February 2025, pp. 461-485

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Asymptotics of the allele frequency spectrum and the number of alleles

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 62 / Issue 2 / June 2025

- Published online by Cambridge University Press:

- 22 November 2024, pp. 516-540

- Print publication:

- June 2025

-

- Article

- Export citation

Asymptotics for the sum-ruin probability of a bi-dimensional compound risk model with dependent numbers of claims

- Part of

-

- Journal:

- Probability in the Engineering and Informational Sciences / Volume 38 / Issue 4 / October 2024

- Published online by Cambridge University Press:

- 20 September 2024, pp. 765-779

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

A remark on exact simulation of tempered stable Ornstein–Uhlenbeck processes

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 61 / Issue 4 / December 2024

- Published online by Cambridge University Press:

- 02 May 2024, pp. 1196-1198

- Print publication:

- December 2024

-

- Article

-

- You have access

- HTML

- Export citation

ON THE CUMULATIVE DISTRIBUTION FUNCTION OF THE VARIANCE-GAMMA DISTRIBUTION

- Part of

-

- Journal:

- Bulletin of the Australian Mathematical Society / Volume 110 / Issue 2 / October 2024

- Published online by Cambridge University Press:

- 29 January 2024, pp. 389-397

- Print publication:

- October 2024

-

- Article

- Export citation

Normal approximation in total variation for statistics in geometric probability

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 56 / Issue 1 / March 2024

- Published online by Cambridge University Press:

- 03 July 2023, pp. 106-155

- Print publication:

- March 2024

-

- Article

-

- You have access

- HTML

- Export citation

Distribution and moments of the error term in the lattice point counting problem for three-dimensional Cygan–Korányi balls

- Part of

-

- Journal:

- Proceedings of the Royal Society of Edinburgh. Section A: Mathematics / Volume 154 / Issue 3 / June 2024

- Published online by Cambridge University Press:

- 19 May 2023, pp. 830-861

- Print publication:

- June 2024

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

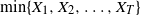

Distributions of random variables involved in discrete censored δ-shock models

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 55 / Issue 4 / December 2023

- Published online by Cambridge University Press:

- 19 May 2023, pp. 1144-1170

- Print publication:

- December 2023

-

- Article

- Export citation

On Bayesian credibility mean for finite mixture distributions

- Part of

-

- Journal:

- Annals of Actuarial Science / Volume 18 / Issue 1 / March 2024

- Published online by Cambridge University Press:

- 29 March 2023, pp. 5-29

-

- Article

- Export citation

Exactly solvable urn models under random replacement schemes and their applications

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 60 / Issue 3 / September 2023

- Published online by Cambridge University Press:

- 14 February 2023, pp. 835-854

- Print publication:

- September 2023

-

- Article

- Export citation

A negative binomial approximation in group testing

- Part of

-

- Journal:

- Probability in the Engineering and Informational Sciences / Volume 37 / Issue 4 / October 2023

- Published online by Cambridge University Press:

- 28 October 2022, pp. 973-996

-

- Article

- Export citation

Multivariate Poisson and Poisson process approximations with applications to Bernoulli sums and

$U$-statistics

$U$-statistics

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 60 / Issue 1 / March 2023

- Published online by Cambridge University Press:

- 30 September 2022, pp. 223-240

- Print publication:

- March 2023

-

- Article

-

- You have access

- HTML

- Export citation

Bounds for the chi-square approximation of the power divergence family of statistics

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 59 / Issue 4 / December 2022

- Published online by Cambridge University Press:

- 09 August 2022, pp. 1059-1080

- Print publication:

- December 2022

-

- Article

-

- You have access

- HTML

- Export citation

Community detection and percolation of information in a geometric setting

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 31 / Issue 6 / November 2022

- Published online by Cambridge University Press:

- 31 May 2022, pp. 1048-1069

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

ELECTRICAL IMPEDANCE TOMOGRAPHY USING NONCONFORMING MESH AND POSTERIOR APPROXIMATED REGRESSION

- Part of

-

- Journal:

- Bulletin of the Australian Mathematical Society / Volume 105 / Issue 3 / June 2022

- Published online by Cambridge University Press:

- 03 March 2022, pp. 520-522

- Print publication:

- June 2022

-

- Article

-

- You have access

- HTML

- Export citation