Refine search

Actions for selected content:

52607 results in Statistics and Probability

Index

-

- Book:

- High-Dimensional Probability

- Published online:

- 30 January 2026

- Print publication:

- 19 February 2026, pp 327-331

-

- Chapter

- Export citation

5 - Concentration without Independence

-

- Book:

- High-Dimensional Probability

- Published online:

- 30 January 2026

- Print publication:

- 19 February 2026, pp 140-170

-

- Chapter

- Export citation

References

-

- Book:

- High-Dimensional Probability

- Published online:

- 30 January 2026

- Print publication:

- 19 February 2026, pp 312-326

-

- Chapter

- Export citation

5 - Confirmatory Factor Analysis

-

- Book:

- Elements of Structural Equation Models (SEMs)

- Published online:

- 05 February 2026

- Print publication:

- 19 February 2026, pp 297-410

-

- Chapter

- Export citation

Contents

-

- Book:

- High-Dimensional Probability

- Published online:

- 30 January 2026

- Print publication:

- 19 February 2026, pp v-viii

-

- Chapter

- Export citation

3 - Multiple Regression as a Structural Equation Model

-

- Book:

- Elements of Structural Equation Models (SEMs)

- Published online:

- 05 February 2026

- Print publication:

- 19 February 2026, pp 82-146

-

- Chapter

- Export citation

Afterword

-

- Book:

- Elements of Structural Equation Models (SEMs)

- Published online:

- 05 February 2026

- Print publication:

- 19 February 2026, pp 590-591

-

- Chapter

- Export citation

Fluid limit of a distributed ledger model with random delay

- Part of

-

- Journal:

- Advances in Applied Probability , First View

- Published online by Cambridge University Press:

- 13 February 2026, pp. 1-41

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Bivariate shock models driven by the geometric counting process

- Part of

-

- Journal:

- Journal of Applied Probability , First View

- Published online by Cambridge University Press:

- 12 February 2026, pp. 1-15

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Optimal allocation policies for relevations in coherent systems

- Part of

-

- Journal:

- Advances in Applied Probability , First View

- Published online by Cambridge University Press:

- 12 February 2026, pp. 1-39

-

- Article

- Export citation

Evaluation of diagnostic practices surrounding foodborne disease in a large university-based healthcare system

-

- Journal:

- Epidemiology & Infection / Accepted manuscript

- Published online by Cambridge University Press:

- 12 February 2026, pp. 1-27

-

- Article

-

- You have access

- Open access

- Export citation

ROBUST ESTIMATION FOR THE SPATIAL AUTOREGRESSIVE MODEL

-

- Journal:

- Econometric Theory , First View

- Published online by Cambridge University Press:

- 12 February 2026, pp. 1-51

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

NEW ASYMPTOTICS APPLIED TO FUNCTIONAL COEFFICIENT REGRESSION AND CLIMATE SENSITIVITY ANALYSIS

-

- Journal:

- Econometric Theory , First View

- Published online by Cambridge University Press:

- 11 February 2026, pp. 1-53

-

- Article

- Export citation

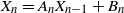

Analysing heavy-tail properties of stochastic gradient descent by means of stochastic recurrence equations

- Part of

-

- Journal:

- Journal of Applied Probability , First View

- Published online by Cambridge University Press:

- 10 February 2026, pp. 1-25

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Cytomegalovirus Seroprevalence and Seroconversion in the Irish Blood Donor Population

-

- Journal:

- Epidemiology & Infection / Accepted manuscript

- Published online by Cambridge University Press:

- 09 February 2026, pp. 1-18

-

- Article

-

- You have access

- Open access

- Export citation

Unravelling the dengue surge in South Asia during 2000–2023: pattern, trend, genomics, and key determinants

-

- Journal:

- Epidemiology & Infection / Accepted manuscript

- Published online by Cambridge University Press:

- 06 February 2026, pp. 1-19

-

- Article

-

- You have access

- Open access

- Export citation

IFoA Presidential Address 2025 by Paul Sweeting

-

- Journal:

- British Actuarial Journal / Volume 31 / 2026

- Published online by Cambridge University Press:

- 06 February 2026, e4

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Excess Mortality in Mainland China after the End of the Zero COVID Policy: A Systematic Review

-

- Journal:

- Epidemiology & Infection / Accepted manuscript

- Published online by Cambridge University Press:

- 06 February 2026, pp. 1-22

-

- Article

-

- You have access

- Open access

- Export citation

Antimicrobial resistance in Bordetella pertussis: a systematic review and meta-analysis

-

- Journal:

- Epidemiology & Infection / Accepted manuscript

- Published online by Cambridge University Press:

- 06 February 2026, pp. 1-28

-

- Article

-

- You have access

- Open access

- Export citation

Leveraging the power of ChatGPT to analyze policy framing: policy agendas and issue positions of U.S. governors during the COVID-19 crisis

- Part of

-

- Journal:

- Data & Policy / Volume 8 / 2026

- Published online by Cambridge University Press:

- 06 February 2026, e7

-

- Article

-

- You have access

- Open access

- HTML

- Export citation