Refine listing

Actions for selected content:

1723 results in 60Jxx

The shape of a seed bank tree

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 59 / Issue 3 / September 2022

- Published online by Cambridge University Press:

- 02 June 2022, pp. 631-651

- Print publication:

- September 2022

-

- Article

- Export citation

On equal-input and monotone Markov matrices

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 54 / Issue 2 / June 2022

- Published online by Cambridge University Press:

- 06 June 2022, pp. 460-492

- Print publication:

- June 2022

-

- Article

- Export citation

Limit theorems for continuous-state branching processes with immigration

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 54 / Issue 2 / June 2022

- Published online by Cambridge University Press:

- 06 June 2022, pp. 599-624

- Print publication:

- June 2022

-

- Article

- Export citation

Moment-constrained optimal dividends: precommitment and consistent planning

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 54 / Issue 2 / June 2022

- Published online by Cambridge University Press:

- 06 June 2022, pp. 404-432

- Print publication:

- June 2022

-

- Article

- Export citation

Construction of age-structured branching processes by stochastic equations

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 59 / Issue 3 / September 2022

- Published online by Cambridge University Press:

- 31 May 2022, pp. 670-684

- Print publication:

- September 2022

-

- Article

-

- You have access

- HTML

- Export citation

Community detection and percolation of information in a geometric setting

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 31 / Issue 6 / November 2022

- Published online by Cambridge University Press:

- 31 May 2022, pp. 1048-1069

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

The critical mean-field Chayes–Machta dynamics

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 31 / Issue 6 / November 2022

- Published online by Cambridge University Press:

- 11 May 2022, pp. 924-975

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Optimal discounted drawdowns in a diffusion approximation under proportional reinsurance

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 59 / Issue 2 / June 2022

- Published online by Cambridge University Press:

- 30 March 2022, pp. 527-540

- Print publication:

- June 2022

-

- Article

- Export citation

An averaging process on hypergraphs

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 59 / Issue 2 / June 2022

- Published online by Cambridge University Press:

- 29 March 2022, pp. 495-504

- Print publication:

- June 2022

-

- Article

- Export citation

On large-deviation probabilities for the empirical distribution of branching random walks with heavy tails

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 59 / Issue 2 / June 2022

- Published online by Cambridge University Press:

- 24 March 2022, pp. 471-494

- Print publication:

- June 2022

-

- Article

- Export citation

An ephemerally self-exciting point process

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 54 / Issue 2 / June 2022

- Published online by Cambridge University Press:

- 14 March 2022, pp. 340-403

- Print publication:

- June 2022

-

- Article

- Export citation

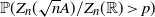

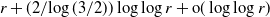

Refined universality for critical KCM: lower bounds

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 31 / Issue 5 / September 2022

- Published online by Cambridge University Press:

- 03 March 2022, pp. 879-906

-

- Article

- Export citation

Limit theorems for critical branching processes in a finite-state-space Markovian environment

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 54 / Issue 1 / March 2022

- Published online by Cambridge University Press:

- 01 March 2022, pp. 111-140

- Print publication:

- March 2022

-

- Article

- Export citation

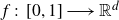

Images of fractional Brownian motion with deterministic drift: Positive Lebesgue measure and non-empty interior

- Part of

-

- Journal:

- Mathematical Proceedings of the Cambridge Philosophical Society / Volume 173 / Issue 3 / November 2022

- Published online by Cambridge University Press:

- 28 February 2022, pp. 693-713

- Print publication:

- November 2022

-

- Article

- Export citation

Limit theorems and structural properties of the cat-and-mouse Markov chain and its generalisations

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 54 / Issue 1 / March 2022

- Published online by Cambridge University Press:

- 28 February 2022, pp. 141-166

- Print publication:

- March 2022

-

- Article

- Export citation

STATIONARY MARKOVIAN ARRIVAL PROCESSES: RESULTS AND OPEN PROBLEMS

- Part of

-

- Journal:

- The ANZIAM Journal / Volume 64 / Issue 1 / January 2022

- Published online by Cambridge University Press:

- 22 February 2022, pp. 54-68

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Renewal theory for iterated perturbed random walks on a general branching process tree: intermediate generations

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 59 / Issue 2 / June 2022

- Published online by Cambridge University Press:

- 18 February 2022, pp. 421-446

- Print publication:

- June 2022

-

- Article

- Export citation

On Foster–Lyapunov criteria for exponential ergodicity of regime-switching jump diffusion processes with countable regimes

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 59 / Issue 1 / March 2022

- Published online by Cambridge University Press:

- 10 February 2022, pp. 167-186

- Print publication:

- March 2022

-

- Article

- Export citation

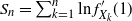

Cover time for branching random walks on regular trees

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 59 / Issue 1 / March 2022

- Published online by Cambridge University Press:

- 09 February 2022, pp. 256-277

- Print publication:

- March 2022

-

- Article

- Export citation

Two-type linear-fractional branching processes in varying environments with asymptotically constant mean matrices

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 59 / Issue 1 / March 2022

- Published online by Cambridge University Press:

- 07 February 2022, pp. 224-255

- Print publication:

- March 2022

-

- Article

- Export citation