Refine listing

Actions for selected content:

84 results in 68Wxx

Rejection- and importance-sampling-based perfect simulation for Gibbs hard-sphere models

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 53 / Issue 3 / September 2021

- Published online by Cambridge University Press:

- 08 October 2021, pp. 839-885

- Print publication:

- September 2021

-

- Article

- Export citation

Average-case complexity of the Euclidean algorithm with a fixed polynomial over a finite field

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 31 / Issue 1 / January 2022

- Published online by Cambridge University Press:

- 06 July 2021, pp. 166-183

-

- Article

- Export citation

More on zeros and approximation of the Ising partition function

- Part of

-

- Journal:

- Forum of Mathematics, Sigma / Volume 9 / 2021

- Published online by Cambridge University Press:

- 07 June 2021, e46

-

- Article

-

- You have access

- Open access

- Export citation

AN ALGORITHM OF COMPUTING COHOMOLOGY INTERSECTION NUMBER OF HYPERGEOMETRIC INTEGRALS

- Part of

-

- Journal:

- Nagoya Mathematical Journal / Volume 246 / June 2022

- Published online by Cambridge University Press:

- 07 May 2021, pp. 256-272

- Print publication:

- June 2022

-

- Article

- Export citation

Polynomial-time approximation algorithms for the antiferromagnetic Ising model on line graphs

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 30 / Issue 6 / November 2021

- Published online by Cambridge University Press:

- 12 April 2021, pp. 905-921

-

- Article

- Export citation

Tail bounds on hitting times of randomized search heuristics using variable drift analysis

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 30 / Issue 4 / July 2021

- Published online by Cambridge University Press:

- 05 November 2020, pp. 550-569

-

- Article

-

- You have access

- Export citation

Approximately counting bases of bicircular matroids

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 30 / Issue 1 / January 2021

- Published online by Cambridge University Press:

- 06 August 2020, pp. 124-135

-

- Article

- Export citation

Deterministic counting of graph colourings using sequences of subgraphs

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 29 / Issue 4 / July 2020

- Published online by Cambridge University Press:

- 22 June 2020, pp. 555-586

-

- Article

- Export citation

Estimating parameters associated with monotone properties

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 29 / Issue 4 / July 2020

- Published online by Cambridge University Press:

- 24 March 2020, pp. 616-632

-

- Article

- Export citation

The string of diamonds is nearly tight for rumour spreading

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 29 / Issue 2 / March 2020

- Published online by Cambridge University Press:

- 04 November 2019, pp. 190-199

-

- Article

- Export citation

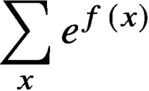

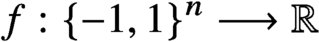

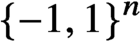

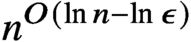

Weighted counting of solutions to sparse systems of equations

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 28 / Issue 5 / September 2019

- Published online by Cambridge University Press:

- 15 April 2019, pp. 696-719

-

- Article

- Export citation

Perturbation Analysis of Orthogonal Least Squares

- Part of

-

- Journal:

- Canadian Mathematical Bulletin / Volume 62 / Issue 4 / December 2019

- Published online by Cambridge University Press:

- 22 March 2019, pp. 780-797

- Print publication:

- December 2019

-

- Article

-

- You have access

- Export citation

Analysis of Robin Hood and Other Hashing Algorithms Under the Random Probing Model, With and Without Deletions

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 28 / Issue 4 / July 2019

- Published online by Cambridge University Press:

- 14 August 2018, pp. 600-617

-

- Article

- Export citation

Dual-Pivot Quicksort: Optimality, Analysis and Zeros of Associated Lattice Paths

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 28 / Issue 4 / July 2019

- Published online by Cambridge University Press:

- 14 August 2018, pp. 485-518

-

- Article

- Export citation

Asymmetric Rényi Problem

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 28 / Issue 4 / July 2019

- Published online by Cambridge University Press:

- 27 June 2018, pp. 542-573

-

- Article

- Export citation

GLOBAL A PRIORI IDENTIFIABILITY OF MODELS OF FLOW-CELL OPTICAL BIOSENSOR EXPERIMENTS

- Part of

-

- Journal:

- Bulletin of the Australian Mathematical Society / Volume 98 / Issue 2 / October 2018

- Published online by Cambridge University Press:

- 14 June 2018, pp. 350-352

- Print publication:

- October 2018

-

- Article

-

- You have access

- Export citation

Fast Property Testing and Metrics for Permutations

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 27 / Issue 4 / July 2018

- Published online by Cambridge University Press:

- 24 May 2018, pp. 539-579

-

- Article

- Export citation

Dependence between path-length and size in random digital trees

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 54 / Issue 4 / December 2017

- Published online by Cambridge University Press:

- 30 November 2017, pp. 1125-1143

- Print publication:

- December 2017

-

- Article

- Export citation

A dichotomy for sampling barrier-crossing events of random walks with regularly varying tails

- Part of

-

- Journal:

- Journal of Applied Probability / Volume 54 / Issue 4 / December 2017

- Published online by Cambridge University Press:

- 30 November 2017, pp. 1213-1232

- Print publication:

- December 2017

-

- Article

- Export citation

A Simple SVD Algorithm for Finding Hidden Partitions

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 27 / Issue 1 / January 2018

- Published online by Cambridge University Press:

- 09 October 2017, pp. 124-140

-

- Article

- Export citation