Refine listing

Actions for selected content:

124 results in 68Qxx

Functional norms, condition numbers and numerical algorithms in algebraic geometry

- Part of

-

- Journal:

- Forum of Mathematics, Sigma / Volume 10 / 2022

- Published online by Cambridge University Press:

- 22 November 2022, e103

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

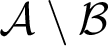

On images of subshifts under embeddings of symbolic varieties

- Part of

-

- Journal:

- Ergodic Theory and Dynamical Systems / Volume 43 / Issue 9 / September 2023

- Published online by Cambridge University Press:

- 01 August 2022, pp. 3131-3149

- Print publication:

- September 2023

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

IS CAUSAL REASONING HARDER THAN PROBABILISTIC REASONING?

- Part of

-

- Journal:

- The Review of Symbolic Logic / Volume 17 / Issue 1 / March 2024

- Published online by Cambridge University Press:

- 18 May 2022, pp. 106-131

- Print publication:

- March 2024

-

- Article

- Export citation

SOLENOIDAL MAPS, AUTOMATIC SEQUENCES, VAN DER PUT SERIES, AND MEALY AUTOMATA

- Part of

-

- Journal:

- Journal of the Australian Mathematical Society / Volume 114 / Issue 1 / February 2023

- Published online by Cambridge University Press:

- 06 April 2022, pp. 78-109

- Print publication:

- February 2023

-

- Article

- Export citation

GAPS IN THE THUE–MORSE WORD

- Part of

-

- Journal:

- Journal of the Australian Mathematical Society / Volume 114 / Issue 1 / February 2023

- Published online by Cambridge University Press:

- 25 January 2022, pp. 110-144

- Print publication:

- February 2023

-

- Article

- Export citation

A MINIMAL SET LOW FOR SPEED

- Part of

-

- Journal:

- The Journal of Symbolic Logic / Volume 87 / Issue 4 / December 2022

- Published online by Cambridge University Press:

- 03 January 2022, pp. 1693-1728

- Print publication:

- December 2022

-

- Article

- Export citation

Tuning as convex optimisation: a polynomial tuner for multi-parametric combinatorial samplers

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 31 / Issue 5 / September 2022

- Published online by Cambridge University Press:

- 15 December 2021, pp. 765-811

-

- Article

- Export citation

Topology of random

$2$-dimensional cubical complexes

$2$-dimensional cubical complexes

- Part of

-

- Journal:

- Forum of Mathematics, Sigma / Volume 9 / 2021

- Published online by Cambridge University Press:

- 29 November 2021, e76

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

NEW RELATIONS AND SEPARATIONS OF CONJECTURES ABOUT INCOMPLETENESS IN THE FINITE DOMAIN

- Part of

-

- Journal:

- The Journal of Symbolic Logic / Volume 87 / Issue 3 / September 2022

- Published online by Cambridge University Press:

- 22 November 2021, pp. 912-937

- Print publication:

- September 2022

-

- Article

- Export citation

DEGREES OF RANDOMIZED COMPUTABILITY

- Part of

-

- Journal:

- Bulletin of Symbolic Logic / Volume 28 / Issue 1 / March 2022

- Published online by Cambridge University Press:

- 27 September 2021, pp. 27-70

- Print publication:

- March 2022

-

- Article

- Export citation

INFORMATION IN PROPOSITIONAL PROOFS AND ALGORITHMIC PROOF SEARCH

- Part of

-

- Journal:

- The Journal of Symbolic Logic / Volume 87 / Issue 2 / June 2022

- Published online by Cambridge University Press:

- 27 September 2021, pp. 852-869

- Print publication:

- June 2022

-

- Article

- Export citation

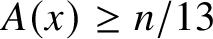

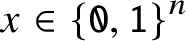

An incompressibility theorem for automatic complexity

- Part of

-

- Journal:

- Forum of Mathematics, Sigma / Volume 9 / 2021

- Published online by Cambridge University Press:

- 10 September 2021, e62

-

- Article

-

- You have access

- Open access

- HTML

- Export citation

Yaglom limit for stochastic fluid models

- Part of

-

- Journal:

- Advances in Applied Probability / Volume 53 / Issue 3 / September 2021

- Published online by Cambridge University Press:

- 08 October 2021, pp. 649-686

- Print publication:

- September 2021

-

- Article

- Export citation

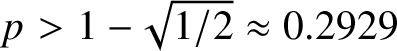

On the mixing time of coordinate Hit-and-Run

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 31 / Issue 2 / March 2022

- Published online by Cambridge University Press:

- 25 August 2021, pp. 320-332

-

- Article

- Export citation

CONTRIBUTIONS TO THE THEORY OF F-AUTOMATIC SETS

- Part of

-

- Journal:

- The Journal of Symbolic Logic / Volume 87 / Issue 1 / March 2022

- Published online by Cambridge University Press:

- 08 June 2021, pp. 127-158

- Print publication:

- March 2022

-

- Article

- Export citation

The power of two choices for random walks

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 31 / Issue 1 / January 2022

- Published online by Cambridge University Press:

- 28 May 2021, pp. 73-100

-

- Article

-

- You have access

- Open access

- Export citation

Most permutations power to a cycle of small prime length

- Part of

-

- Journal:

- Proceedings of the Edinburgh Mathematical Society / Volume 64 / Issue 2 / May 2021

- Published online by Cambridge University Press:

- 24 May 2021, pp. 234-246

-

- Article

- Export citation

BEING CAYLEY AUTOMATIC IS CLOSED UNDER TAKING WREATH PRODUCT WITH VIRTUALLY CYCLIC GROUPS

- Part of

-

- Journal:

- Bulletin of the Australian Mathematical Society / Volume 104 / Issue 3 / December 2021

- Published online by Cambridge University Press:

- 13 April 2021, pp. 464-474

- Print publication:

- December 2021

-

- Article

- Export citation

Polynomial-time approximation algorithms for the antiferromagnetic Ising model on line graphs

- Part of

-

- Journal:

- Combinatorics, Probability and Computing / Volume 30 / Issue 6 / November 2021

- Published online by Cambridge University Press:

- 12 April 2021, pp. 905-921

-

- Article

- Export citation

BISIMULATIONS FOR KNOWING HOW LOGICS

- Part of

-

- Journal:

- The Review of Symbolic Logic / Volume 15 / Issue 2 / June 2022

- Published online by Cambridge University Press:

- 22 March 2021, pp. 450-486

- Print publication:

- June 2022

-

- Article

- Export citation